Denis Trystram

Handling Delayed Feedback in Distributed Online Optimization : A Projection-Free Approach

Feb 03, 2024

Abstract:Learning at the edges has become increasingly important as large quantities of data are continually generated locally. Among others, this paradigm requires algorithms that are simple (so that they can be executed by local devices), robust (again uncertainty as data are continually generated), and reliable in a distributed manner under network issues, especially delays. In this study, we investigate the problem of online convex optimization under adversarial delayed feedback. We propose two projection-free algorithms for centralised and distributed settings in which they are carefully designed to achieve a regret bound of O(\sqrt{B}) where B is the sum of delay, which is optimal for the OCO problem in the delay setting while still being projection-free. We provide an extensive theoretical study and experimentally validate the performance of our algorithms by comparing them with existing ones on real-world problems.

One Gradient Frank-Wolfe for Decentralized Online Convex and Submodular Optimization

Oct 30, 2022

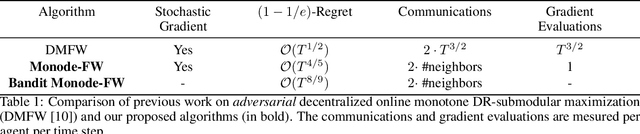

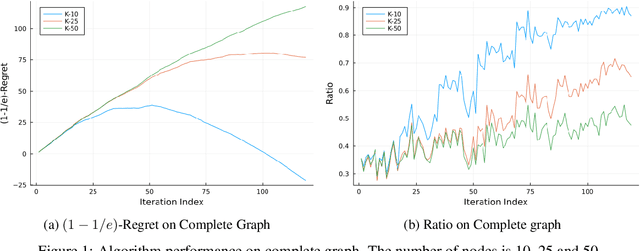

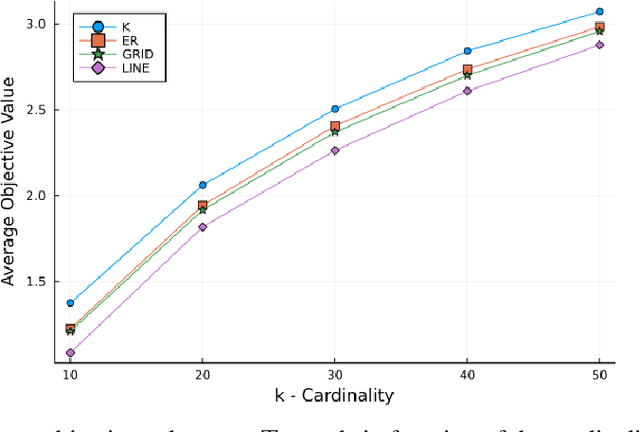

Abstract:Decentralized learning has been studied intensively in recent years motivated by its wide applications in the context of federated learning. The majority of previous research focuses on the offline setting in which the objective function is static. However, the offline setting becomes unrealistic in numerous machine learning applications that witness the change of massive data. In this paper, we propose \emph{decentralized online} algorithm for convex and continuous DR-submodular optimization, two classes of functions that are present in a variety of machine learning problems. Our algorithms achieve performance guarantees comparable to those in the centralized offline setting. Moreover, on average, each participant performs only a \emph{single} gradient computation per time step. Subsequently, we extend our algorithms to the bandit setting. Finally, we illustrate the competitive performance of our algorithms in real-world experiments.

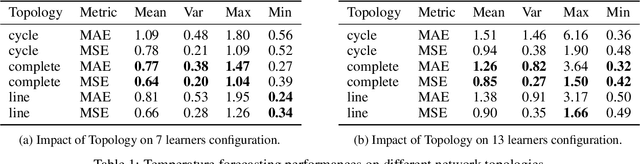

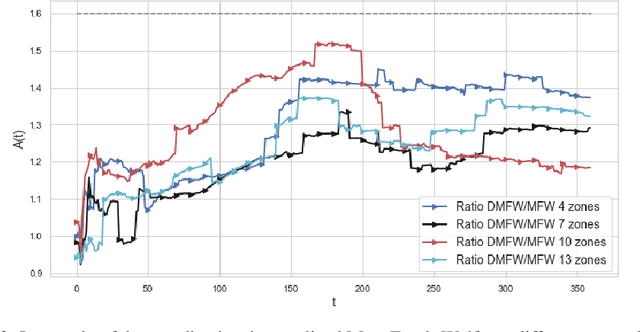

Online Decentralized Frank-Wolfe: From theoretical bound to applications in smart-building

Jul 31, 2022

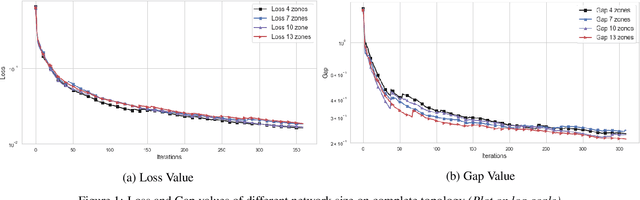

Abstract:The design of decentralized learning algorithms is important in the fast-growing world in which data are distributed over participants with limited local computation resources and communication. In this direction, we propose an online algorithm minimizing non-convex loss functions aggregated from individual data/models distributed over a network. We provide the theoretical performance guarantee of our algorithm and demonstrate its utility on a real life smart building.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge