Deepak Mishra

Generalizable IoT Traffic Representations for Cross-Network Device Identification

Jan 27, 2026Abstract:Machine learning models have demonstrated strong performance in classifying network traffic and identifying Internet-of-Things (IoT) devices, enabling operators to discover and manage IoT assets at scale. However, many existing approaches rely on end-to-end supervised pipelines or task-specific fine-tuning, resulting in traffic representations that are tightly coupled to labeled datasets and deployment environments, which can limit generalizability. In this paper, we study the problem of learning generalizable traffic representations for IoT device identification. We design compact encoder architectures that learn per-flow embeddings from unlabeled IoT traffic and evaluate them using a frozen-encoder protocol with a simple supervised classifier. Our specific contributions are threefold. (1) We develop unsupervised encoder--decoder models that learn compact traffic representations from unlabeled IoT network flows and assess their quality through reconstruction-based analysis. (2) We show that these learned representations can be used effectively for IoT device-type classification using simple, lightweight classifiers trained on frozen embeddings. (3) We provide a systematic benchmarking study against the state-of-the-art pretrained traffic encoders, showing that larger models do not necessarily yield more robust representations for IoT traffic. Using more than 18 million real IoT traffic flows collected across multiple years and deployment environments, we learn traffic representations from unlabeled data and evaluate device-type classification on disjoint labeled subsets, achieving macro F1-scores exceeding 0.9 for device-type classification and demonstrating robustness under cross-environment deployment.

Robust and Secure Blockage-Aware Pinching Antenna-assisted Wireless Communication

Jan 10, 2026Abstract:In this work, we investigate a blockage-aware pinching antenna (PA) system designed for secure and robust wireless communication. The considered system comprises a base station equipped with multiple waveguides, each hosting multiple PAs, and serves multiple single-antenna legitimate users in the presence of multi-antenna eavesdroppers under imperfect channel state information (CSI). To safeguard confidential transmissions, artificial noise (AN) is deliberately injected to degrade the eavesdropping channels. Recognizing that conventional linear CSI-error bounds become overly conservative for spatially distributed PA architectures, we develop new geometry-aware uncertainty sets that jointly characterize eavesdroppers position and array-orientation errors. Building upon these sets, we formulate a robust joint optimization problem that determines per-waveguide beamforming and AN covariance, individual PA power-ratio allocation, and PA positions to maximize the system sum rate subject to secrecy constraints. The highly non-convex design problem is efficiently addressed via a low computational complexity iterative algorithm that capitalizes on block coordinate descent, penalty-based methods, majorization-minimization, the S-procedure, and Lipschitz-based surrogate functions. Simulation results demonstrate that sum rates for the proposed algorithm outperforms conventional fixed antenna systems by 4.7 dB, offering substantially improved rate and secrecy performance. In particular, (i) adaptive PA positioning preserves LoS to legitimate users while effectively exploiting waveguide geometry to disrupt eavesdropper channels, and (ii) neglecting blockage effects in the PA system significantly impacts the system design, leading to performance degradation and inadequate secrecy guarantees.

RobSurv: Vector Quantization-Based Multi-Modal Learning for Robust Cancer Survival Prediction

May 05, 2025

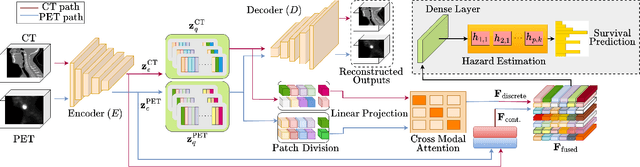

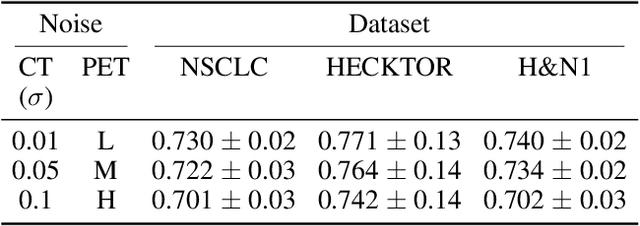

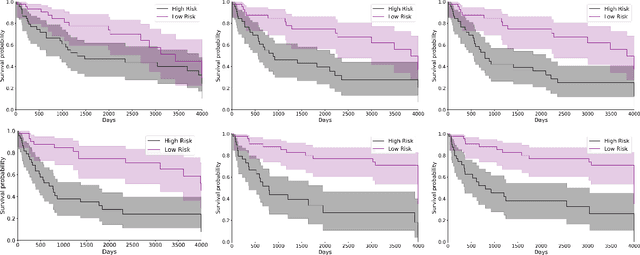

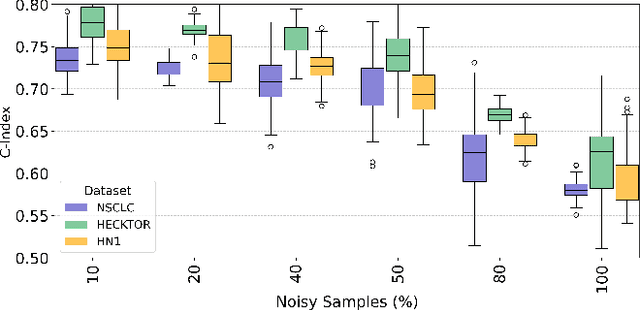

Abstract:Cancer survival prediction using multi-modal medical imaging presents a critical challenge in oncology, mainly due to the vulnerability of deep learning models to noise and protocol variations across imaging centers. Current approaches struggle to extract consistent features from heterogeneous CT and PET images, limiting their clinical applicability. We address these challenges by introducing RobSurv, a robust deep-learning framework that leverages vector quantization for resilient multi-modal feature learning. The key innovation of our approach lies in its dual-path architecture: one path maps continuous imaging features to learned discrete codebooks for noise-resistant representation, while the parallel path preserves fine-grained details through continuous feature processing. This dual representation is integrated through a novel patch-wise fusion mechanism that maintains local spatial relationships while capturing global context via Transformer-based processing. In extensive evaluations across three diverse datasets (HECKTOR, H\&N1, and NSCLC Radiogenomics), RobSurv demonstrates superior performance, achieving concordance index of 0.771, 0.742, and 0.734 respectively - significantly outperforming existing methods. Most notably, our model maintains robust performance even under severe noise conditions, with performance degradation of only 3.8-4.5\% compared to 8-12\% in baseline methods. These results, combined with strong generalization across different cancer types and imaging protocols, establish RobSurv as a promising solution for reliable clinical prognosis that can enhance treatment planning and patient care.

Fine-Grained Rib Fracture Diagnosis with Hyperbolic Embeddings: A Detailed Annotation Framework and Multi-Label Classification Model

Apr 16, 2025Abstract:Accurate rib fracture identification and classification are essential for treatment planning. However, existing datasets often lack fine-grained annotations, particularly regarding rib fracture characterization, type, and precise anatomical location on individual ribs. To address this, we introduce a novel rib fracture annotation protocol tailored for fracture classification. Further, we enhance fracture classification by leveraging cross-modal embeddings that bridge radiological images and clinical descriptions. Our approach employs hyperbolic embeddings to capture the hierarchical nature of fracture, mapping visual features and textual descriptions into a shared non-Euclidean manifold. This framework enables more nuanced similarity computations between imaging characteristics and clinical descriptions, accounting for the inherent hierarchical relationships in fracture taxonomy. Experimental results demonstrate that our approach outperforms existing methods across multiple classification tasks, with average recall improvements of 6% on the AirRib dataset and 17.5% on the public RibFrac dataset.

U-WNO:U-Net-enhanced Wavelet Neural Operator for fetal head segmentation

Nov 25, 2024

Abstract:This article describes the development of a novel U-Net-enhanced Wavelet Neural Operator (U-WNO),which combines wavelet decomposition, operator learning, and an encoder-decoder mechanism. This approach harnesses the superiority of the wavelets in time frequency localization of the functions, and the combine down-sampling and up-sampling operations to generate the segmentation map to enable accurate tracking of patterns in spatial domain and effective learning of the functional mappings to perform regional segmentation. By bridging the gap between theoretical advancements and practical applications, the U-WNO holds potential for significant impact in multiple science and industrial fields, facilitating more accurate decision-making and improved operational efficiencies. The operator is demonstrated for different pregnancy trimesters, utilizing two-dimensional ultrasound images.

RibCageImp: A Deep Learning Framework for 3D Ribcage Implant Generation

Nov 14, 2024Abstract:The recovery of damaged or resected ribcage structures requires precise, custom-designed implants to restore the integrity and functionality of the thoracic cavity. Traditional implant design methods rely mainly on manual processes, making them time-consuming and susceptible to variability. In this work, we explore the feasibility of automated ribcage implant generation using deep learning. We present a framework based on 3D U-Net architecture that processes CT scans to generate patient-specific implant designs. To the best of our knowledge, this is the first investigation into automated thoracic implant generation using deep learning approaches. Our preliminary results, while moderate, highlight both the potential and the significant challenges in this complex domain. These findings establish a foundation for future research in automated ribcage reconstruction and identify key technical challenges that need to be addressed for practical implementation.

Leveraging Auxiliary Classification for Rib Fracture Segmentation

Nov 14, 2024

Abstract:Thoracic trauma often results in rib fractures, which demand swift and accurate diagnosis for effective treatment. However, detecting these fractures on rib CT scans poses considerable challenges, involving the analysis of many image slices in sequence. Despite notable advancements in algorithms for automated fracture segmentation, the persisting challenges stem from the diverse shapes and sizes of these fractures. To address these issues, this study introduces a sophisticated deep-learning model with an auxiliary classification task designed to enhance the accuracy of rib fracture segmentation. The auxiliary classification task is crucial in distinguishing between fractured ribs and negative regions, encompassing non-fractured ribs and surrounding tissues, from the patches obtained from CT scans. By leveraging this auxiliary task, the model aims to improve feature representation at the bottleneck layer by highlighting the regions of interest. Experimental results on the RibFrac dataset demonstrate significant improvement in segmentation performance.

Client Contribution Normalization for Enhanced Federated Learning

Nov 10, 2024

Abstract:Mobile devices, including smartphones and laptops, generate decentralized and heterogeneous data, presenting significant challenges for traditional centralized machine learning models due to substantial communication costs and privacy risks. Federated Learning (FL) offers a promising alternative by enabling collaborative training of a global model across decentralized devices without data sharing. However, FL faces challenges due to statistical heterogeneity among clients, where non-independent and identically distributed (non-IID) data impedes model convergence and performance. This paper focuses on data-dependent heterogeneity in FL and proposes a novel approach leveraging mean latent representations extracted from locally trained models. The proposed method normalizes client contributions based on these representations, allowing the central server to estimate and adjust for heterogeneity during aggregation. This normalization enhances the global model's generalization and mitigates the limitations of conventional federated averaging methods. The main contributions include introducing a normalization scheme using mean latent representations to handle statistical heterogeneity in FL, demonstrating the seamless integration with existing FL algorithms to improve performance in non-IID settings, and validating the approach through extensive experiments on diverse datasets. Results show significant improvements in model accuracy and consistency across skewed distributions. Our experiments with six FL schemes: FedAvg, FedProx, FedBABU, FedNova, SCAFFOLD, and SGDM highlight the robustness of our approach. This research advances FL by providing a practical and computationally efficient solution for statistical heterogeneity, contributing to the development of more reliable and generalized machine learning models.

Enhanced Survival Prediction in Head and Neck Cancer Using Convolutional Block Attention and Multimodal Data Fusion

Oct 29, 2024

Abstract:Accurate survival prediction in head and neck cancer (HNC) is essential for guiding clinical decision-making and optimizing treatment strategies. Traditional models, such as Cox proportional hazards, have been widely used but are limited in their ability to handle complex multi-modal data. This paper proposes a deep learning-based approach leveraging CT and PET imaging modalities to predict survival outcomes in HNC patients. Our method integrates feature extraction with a Convolutional Block Attention Module (CBAM) and a multi-modal data fusion layer that combines imaging data to generate a compact feature representation. The final prediction is achieved through a fully parametric discrete-time survival model, allowing for flexible hazard functions that overcome the limitations of traditional survival models. We evaluated our approach using the HECKTOR and HEAD-NECK-RADIOMICS- HN1 datasets, demonstrating its superior performance compared to conconventional statistical and machine learning models. The results indicate that our deep learning model significantly improves survival prediction accuracy, offering a robust tool for personalized treatment planning in HNC

Survival Prediction in Lung Cancer through Multi-Modal Representation Learning

Sep 30, 2024

Abstract:Survival prediction is a crucial task associated with cancer diagnosis and treatment planning. This paper presents a novel approach to survival prediction by harnessing comprehensive information from CT and PET scans, along with associated Genomic data. Current methods rely on either a single modality or the integration of multiple modalities for prediction without adequately addressing associations across patients or modalities. We aim to develop a robust predictive model for survival outcomes by integrating multi-modal imaging data with genetic information while accounting for associations across patients and modalities. We learn representations for each modality via a self-supervised module and harness the semantic similarities across the patients to ensure the embeddings are aligned closely. However, optimizing solely for global relevance is inadequate, as many pairs sharing similar high-level semantics, such as tumor type, are inadvertently pushed apart in the embedding space. To address this issue, we use a cross-patient module (CPM) designed to harness inter-subject correspondences. The CPM module aims to bring together embeddings from patients with similar disease characteristics. Our experimental evaluation of the dataset of Non-Small Cell Lung Cancer (NSCLC) patients demonstrates the effectiveness of our approach in predicting survival outcomes, outperforming state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge