Dawsin Blanchard

Adaptive County Level COVID-19 Forecast Models: Analysis and Improvement

Jul 01, 2020

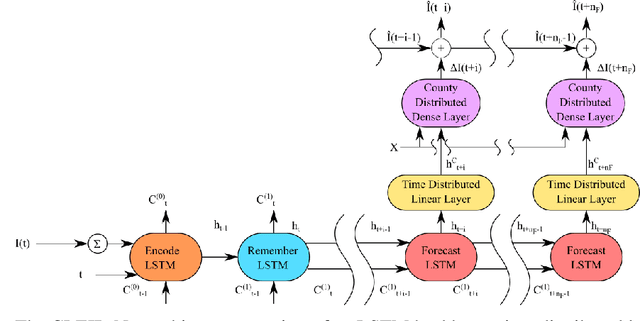

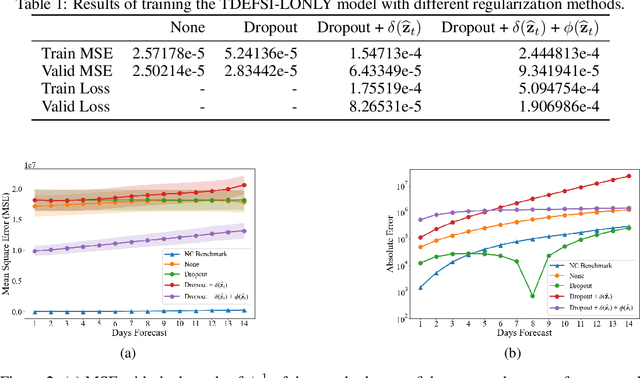

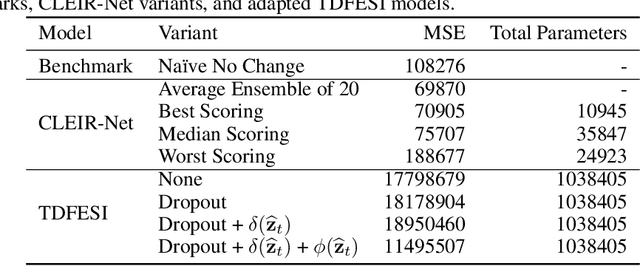

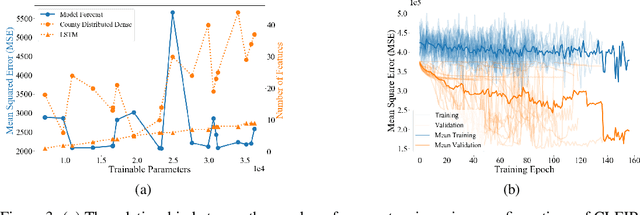

Abstract:Accurately forecasting county level COVID-19 confirmed cases is crucial to optimizing medical resources. Forecasting emerging outbreaks pose a particular challenge because many existing forecasting techniques learn from historical seasons trends. Recurrent neural networks (RNNs) with LSTM-based cells are a logical choice of model due to their ability to learn temporal dynamics. In this paper, we adapt the state and county level influenza model, TDEFSI-LONLY, proposed in Wang et a. [l2020] to national and county level COVID-19 data. We show that this model poorly forecasts the current pandemic. We analyze the two week ahead forecasting capabilities of the TDEFSI-LONLY model with combinations of regularization techniques. Effective training of the TDEFSI-LONLY model requires data augmentation, to overcome this challenge we utilize an SEIR model and present an inter-county mixing extension to this model to simulate sufficient training data. Further, we propose an alternate forecast model, {\it County Level Epidemiological Inference Recurrent Network} (\alg{}) that trains an LSTM backbone on national confirmed cases to learn a low dimensional time pattern and utilizes a time distributed dense layer to learn individual county confirmed case changes each day for a two weeks forecast. We show that the best, worst, and median state forecasts made using CLEIR-Net model are respectively New York, South Carolina, and Montana.

Slimming Neural Networks using Adaptive Connectivity Scores

Jun 26, 2020

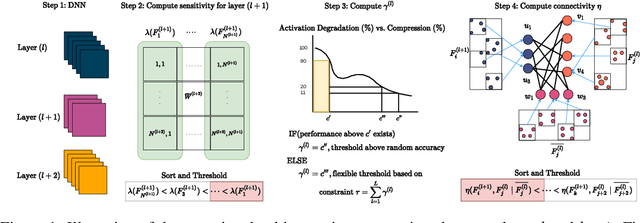

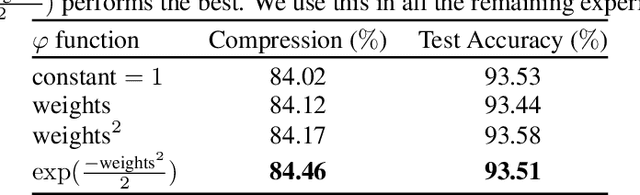

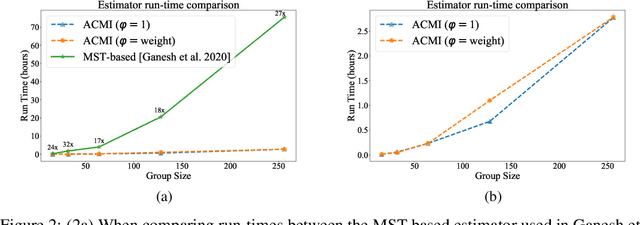

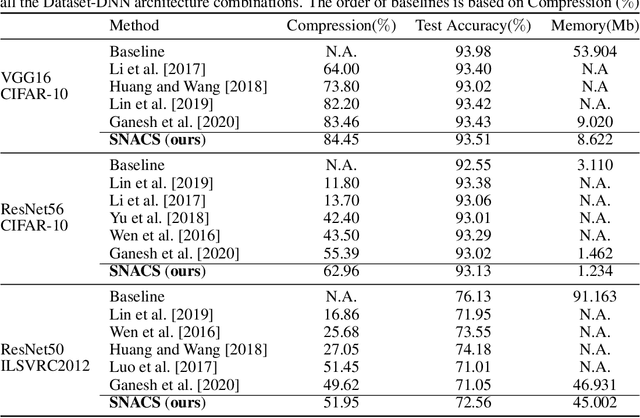

Abstract:There are two broad approaches to deep neural network (DNN) pruning: 1) applying a deterministic constraint on the weight matrices, which takes advantage of their ease of implementation and the learned structures of the weight matrix, and 2) using a probabilistic framework aimed at maintaining the flow of information between layers, which leverages the connections between filters and their downstream impact. Each approach's advantage supplements the missing portions of the alternate approach yet no one has combined and fully capitalized on both of them. Further,there are some common practical issues that affect both, e.g., intense manual effort to analyze sensitivity and set the upper pruning limits of layers. In this work,we propose Slimming Neural networks using Adaptive Connectivity Measures(SNACS), as an algorithm that uses a probabilistic framework for compression while incorporating weight-based constraints at multiple levels to capitalize on both their strengths and overcome previous issues. We propose a hash-based estimator of Adaptive Conditional Mutual Information(ACMI) to evaluate the connectivity between filters of different layers, which includes a magnitude-based scaling criteria that leverages weight matrices. To reduce the amount of unnecessary manual effort required to set the upper pruning limit of different layers in a DNN we propose a set of operating constraints to help automatically set them. Further, we take extended advantage of weight matrices by defining a sensitivity criteria for filters that measures the strength of their contributions to the following layer and highlights critical filters that need to be protected from pruning. We show that our proposed approach is faster by over 17x the nearest comparable method and outperforms all existing pruning approaches on three standard Dataset-DNN benchmarks: CIFAR10-VGG16, CIFAR10-ResNet56 and ILSVRC2012-ResNet50.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge