Davide Domini

Sparse Self-Federated Learning for Energy Efficient Cooperative Intelligence in Society 5.0

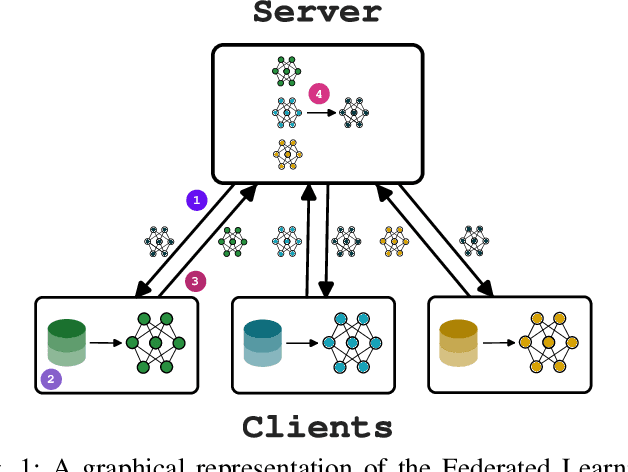

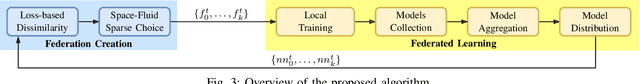

Jul 10, 2025Abstract:Federated Learning offers privacy-preserving collaborative intelligence but struggles to meet the sustainability demands of emerging IoT ecosystems necessary for Society 5.0-a human-centered technological future balancing social advancement with environmental responsibility. The excessive communication bandwidth and computational resources required by traditional FL approaches make them environmentally unsustainable at scale, creating a fundamental conflict with green AI principles as billions of resource-constrained devices attempt to participate. To this end, we introduce Sparse Proximity-based Self-Federated Learning (SParSeFuL), a resource-aware approach that bridges this gap by combining aggregate computing for self-organization with neural network sparsification to reduce energy and bandwidth consumption.

ProFed: a Benchmark for Proximity-based non-IID Federated Learning

Mar 26, 2025

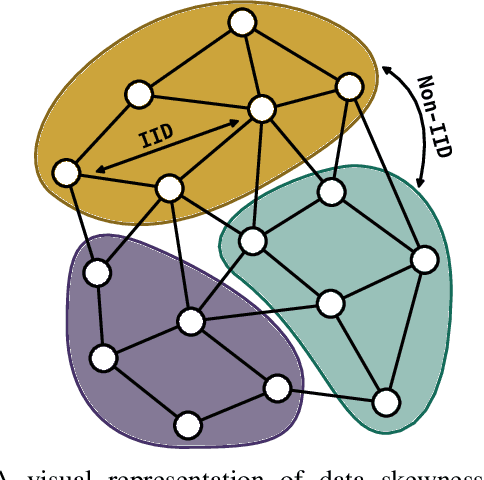

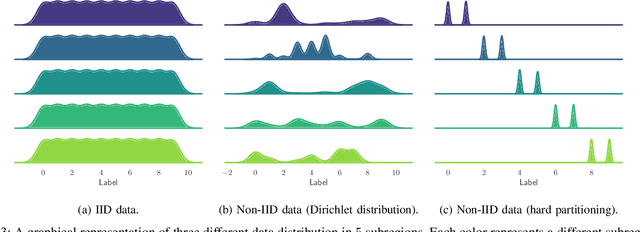

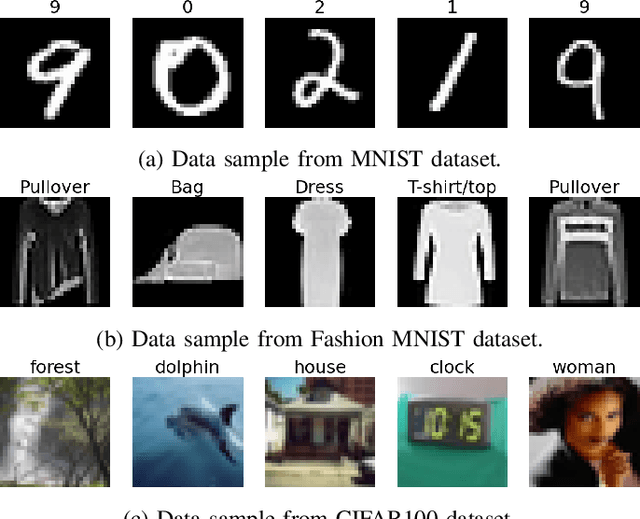

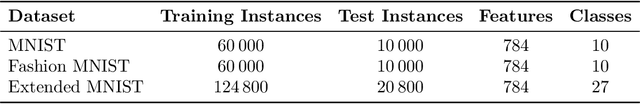

Abstract:In recent years, cro:flFederated learning (FL) has gained significant attention within the machine learning community. Although various FL algorithms have been proposed in the literature, their performance often degrades when data across clients is non-independently and identically distributed (non-IID). This skewness in data distribution often emerges from geographic patterns, with notable examples including regional linguistic variations in text data or localized traffic patterns in urban environments. Such scenarios result in IID data within specific regions but non-IID data across regions. However, existing FL algorithms are typically evaluated by randomly splitting non-IID data across devices, disregarding their spatial distribution. To address this gap, we introduce ProFed, a benchmark that simulates data splits with varying degrees of skewness across different regions. We incorporate several skewness methods from the literature and apply them to well-known datasets, including MNIST, FashionMNIST, CIFAR-10, and CIFAR-100. Our goal is to provide researchers with a standardized framework to evaluate FL algorithms more effectively and consistently against established baselines.

FBFL: A Field-Based Coordination Approach for Data Heterogeneity in Federated Learning

Feb 12, 2025

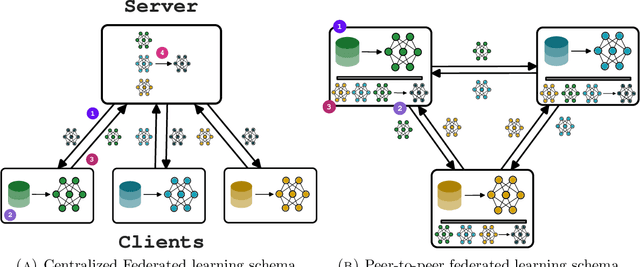

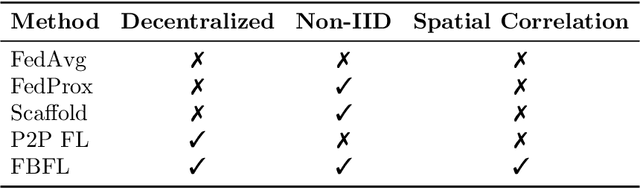

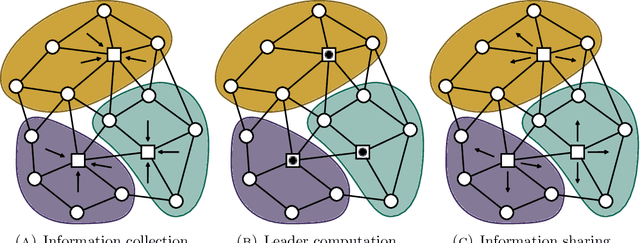

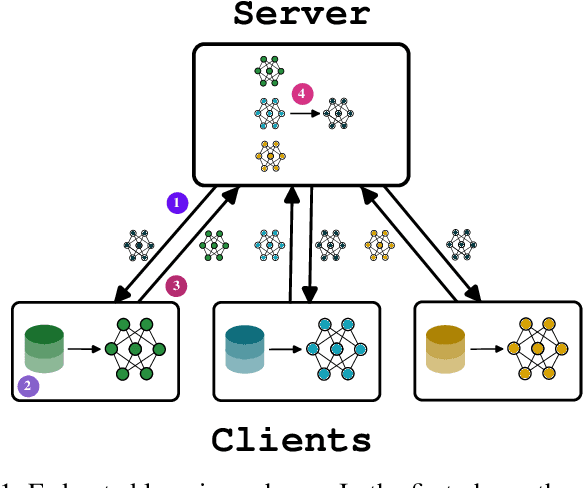

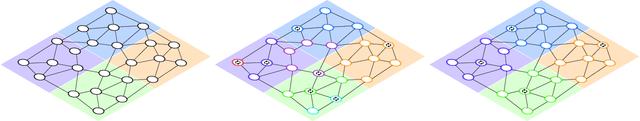

Abstract:In the last years, Federated learning (FL) has become a popular solution to train machine learning models in domains with high privacy concerns. However, FL scalability and performance face significant challenges in real-world deployments where data across devices are non-independently and identically distributed (non-IID). The heterogeneity in data distribution frequently arises from spatial distribution of devices, leading to degraded model performance in the absence of proper handling. Additionally, FL typical reliance on centralized architectures introduces bottlenecks and single-point-of-failure risks, particularly problematic at scale or in dynamic environments. To close this gap, we propose Field-Based Federated Learning (FBFL), a novel approach leveraging macroprogramming and field coordination to address these limitations through: (i) distributed spatial-based leader election for personalization to mitigate non-IID data challenges; and (ii) construction of a self-organizing, hierarchical architecture using advanced macroprogramming patterns. Moreover, FBFL not only overcomes the aforementioned limitations, but also enables the development of more specialized models tailored to the specific data distribution in each subregion. This paper formalizes FBFL and evaluates it extensively using MNIST, FashionMNIST, and Extended MNIST datasets. We demonstrate that, when operating under IID data conditions, FBFL performs comparably to the widely-used FedAvg algorithm. Furthermore, in challenging non-IID scenarios, FBFL not only outperforms FedAvg but also surpasses other state-of-the-art methods, namely FedProx and Scaffold, which have been specifically designed to address non-IID data distributions. Additionally, we showcase the resilience of FBFL's self-organizing hierarchical architecture against server failures.

Proximity-based Self-Federated Learning

Jul 17, 2024

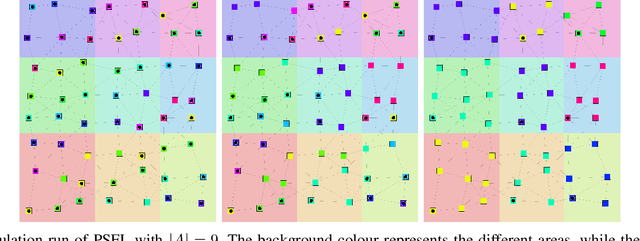

Abstract:In recent advancements in machine learning, federated learning allows a network of distributed clients to collaboratively develop a global model without needing to share their local data. This technique aims to safeguard privacy, countering the vulnerabilities of conventional centralized learning methods. Traditional federated learning approaches often rely on a central server to coordinate model training across clients, aiming to replicate the same model uniformly across all nodes. However, these methods overlook the significance of geographical and local data variances in vast networks, potentially affecting model effectiveness and applicability. Moreover, relying on a central server might become a bottleneck in large networks, such as the ones promoted by edge computing. Our paper introduces a novel, fully-distributed federated learning strategy called proximity-based self-federated learning that enables the self-organised creation of multiple federations of clients based on their geographic proximity and data distribution without exchanging raw data. Indeed, unlike traditional algorithms, our approach encourages clients to share and adjust their models with neighbouring nodes based on geographic proximity and model accuracy. This method not only addresses the limitations posed by diverse data distributions but also enhances the model's adaptability to different regional characteristics creating specialized models for each federation. We demonstrate the efficacy of our approach through simulations on well-known datasets, showcasing its effectiveness over the conventional centralized federated learning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge