Davide Ciucci

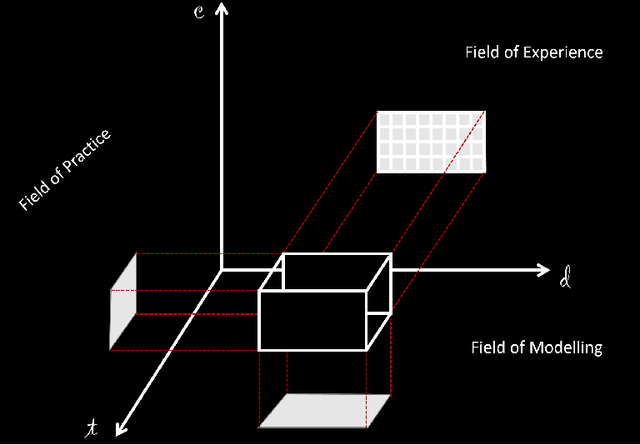

Three-way Decisions with Evaluative Linguistic Expressions

Jul 15, 2023Abstract:We propose a linguistic interpretation of three-way decisions, where the regions of acceptance, rejection, and non-commitment are constructed by using the so-called evaluative linguistic expressions, which are expressions of natural language such as small, medium, very short, quite roughly strong, extremely good, etc. Our results highlight new connections between two different research areas: three-way decisions and the theory of evaluative linguistic expressions.

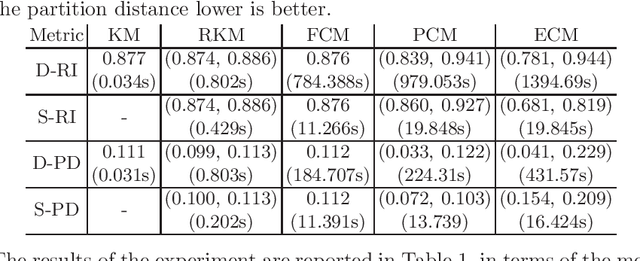

A Distributional Approach for Soft Clustering Comparison and Evaluation

Jun 20, 2022

Abstract:The development of external evaluation criteria for soft clustering (SC) has received limited attention: existing methods do not provide a general approach to extend comparison measures to SC, and are unable to account for the uncertainty represented in the results of SC algorithms. In this article, we propose a general method to address these limitations, grounding on a novel interpretation of SC as distributions over hard clusterings, which we call \emph{distributional measures}. We provide an in-depth study of complexity- and metric-theoretic properties of the proposed approach, and we describe approximation techniques that can make the calculations tractable. Finally, we illustrate our approach through a simple but illustrative experiment.

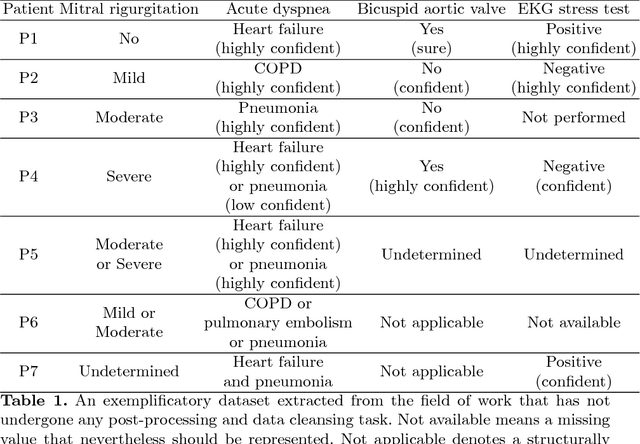

Responsible AI in Healthcare

Feb 19, 2022Abstract:This article discusses open problems, implemented solutions, and future research in the area of responsible AI in healthcare. In particular, we illustrate two main research themes related to the work of two laboratories within the Department of Informatics, Systems, and Communication at the University of Milano-Bicocca. The problems addressed concern, in particular, {uncertainty in medical data and machine advice}, and the problem of online health information disorder.

A giant with feet of clay: on the validity of the data that feed machine learning in medicine

May 14, 2018

Abstract:This paper considers the use of Machine Learning (ML) in medicine by focusing on the main problem that this computational approach has been aimed at solving or at least minimizing: uncertainty. To this aim, we point out how uncertainty is so ingrained in medicine that it biases also the representation of clinical phenomena, that is the very input of ML models, thus undermining the clinical significance of their output. Recognizing this can motivate both medical doctors, in taking more responsibility in the development and use of these decision aids, and the researchers, in pursuing different ways to assess the value of these systems. In so doing, both designers and users could take this intrinsic characteristic of medicine more seriously and consider alternative approaches that do not "sweep uncertainty under the rug" within an objectivist fiction, which everyone can come up by believing as true.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge