David Newton

ConvDTW-ACS: Audio Segmentation for Track Type Detection During Car Manufacturing

Feb 28, 2024

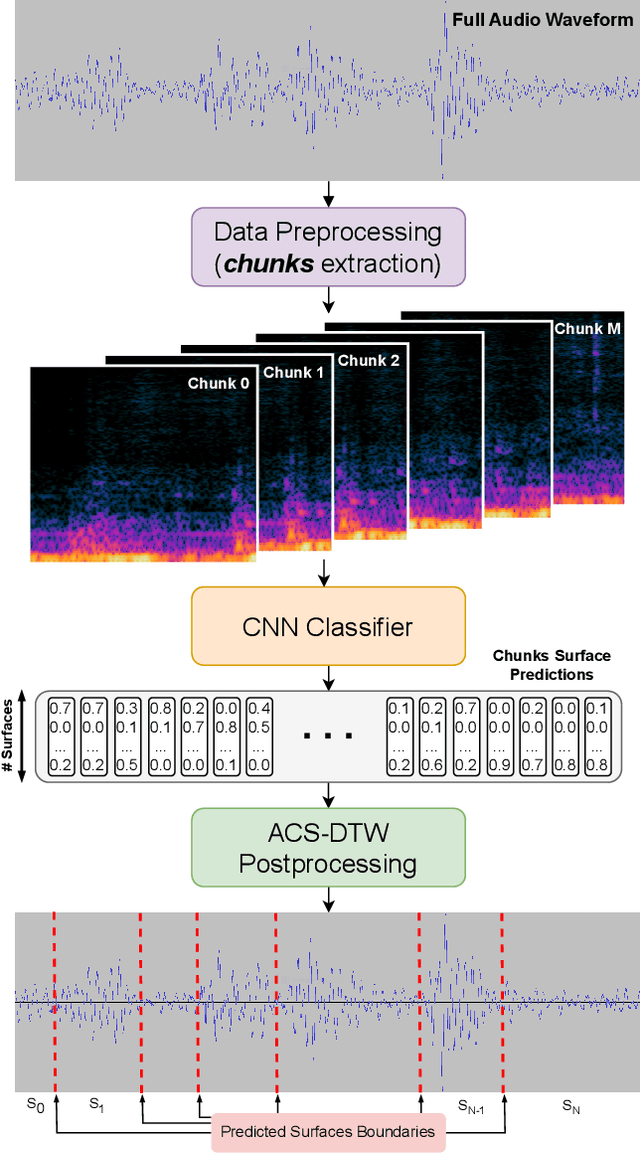

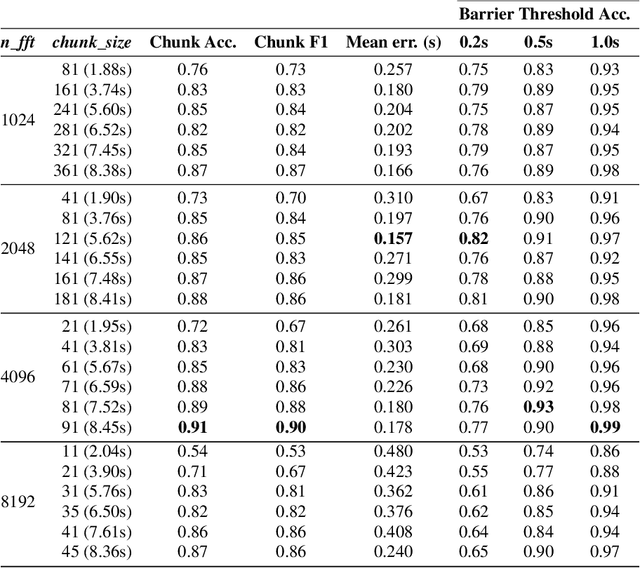

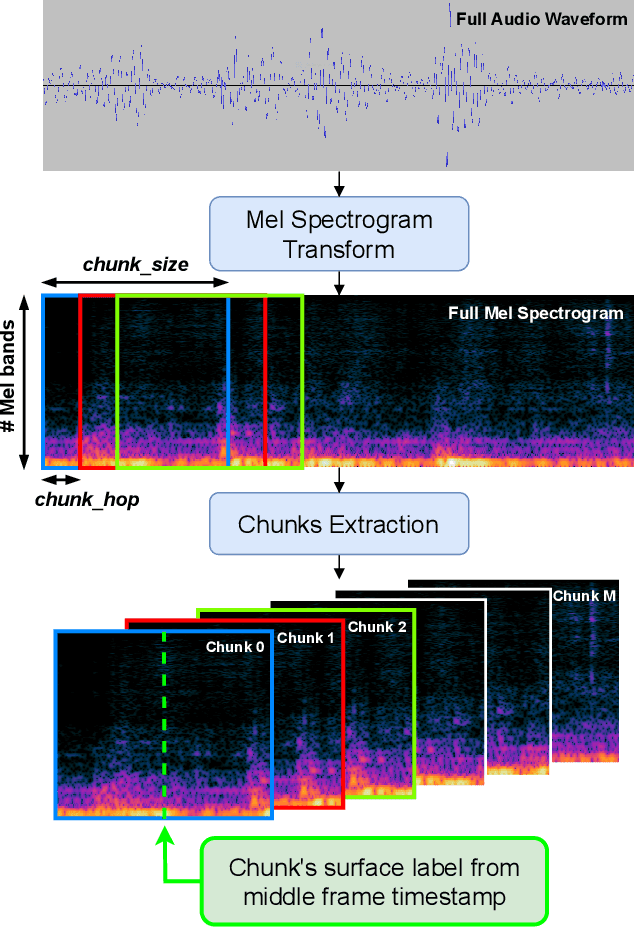

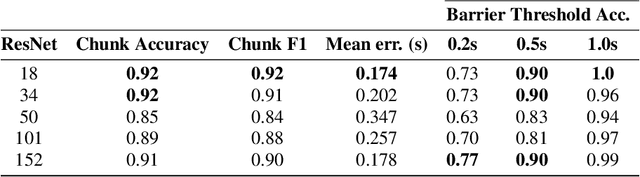

Abstract:This paper proposes a method for Acoustic Constrained Segmentation (ACS) in audio recordings of vehicles driven through a production test track, delimiting the boundaries of surface types in the track. ACS is a variant of classical acoustic segmentation where the sequence of labels is known, contiguous and invariable, which is especially useful in this work as the test track has a standard configuration of surface types. The proposed ConvDTW-ACS method utilizes a Convolutional Neural Network for classifying overlapping image chunks extracted from the full audio spectrogram. Then, our custom Dynamic Time Warping algorithm aligns the sequence of predicted probabilities to the sequence of surface types in the track, from which timestamps of the surface type boundaries can be extracted. The method was evaluated on a real-world dataset collected from the Ford Manufacturing Plant in Valencia (Spain), achieving a mean error of 166 milliseconds when delimiting, within the audio, the boundaries of the surfaces in the track. The results demonstrate the effectiveness of the proposed method in accurately segmenting different surface types, which could enable the development of more specialized AI systems to improve the quality inspection process.

Retrospective Approximation for Smooth Stochastic Optimization

Mar 07, 2021

Abstract:We consider stochastic optimization problems where a smooth (and potentially nonconvex) objective is to be minimized using a stochastic first-order oracle. These type of problems arise in many settings from simulation optimization to deep learning. We present Retrospective Approximation (RA) as a universal sequential sample-average approximation (SAA) paradigm where during each iteration $k$, a sample-path approximation problem is implicitly generated using an adapted sample size $M_k$, and solved (with prior solutions as "warm start") to an adapted error tolerance $\epsilon_k$, using a "deterministic method" such as the line search quasi-Newton method. The principal advantage of RA is that decouples optimization from stochastic approximation, allowing the direct adoption of existing deterministic algorithms without modification, thus mitigating the need to redesign algorithms for the stochastic context. A second advantage is the obvious manner in which RA lends itself to parallelization. We identify conditions on $\{M_k, k \geq 1\}$ and $\{\epsilon_k, k\geq 1\}$ that ensure almost sure convergence and convergence in $L_1$-norm, along with optimal iteration and work complexity rates. We illustrate the performance of RA with line-search quasi-Newton on an ill-conditioned least squares problem, as well as an image classification problem using a deep convolutional neural net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge