David Moloney

Tree Annotations in LiDAR Data Using Point Densities and Convolutional Neural Networks

Jun 09, 2020

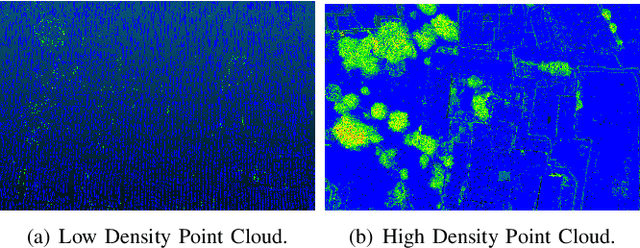

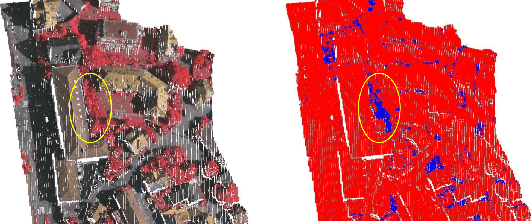

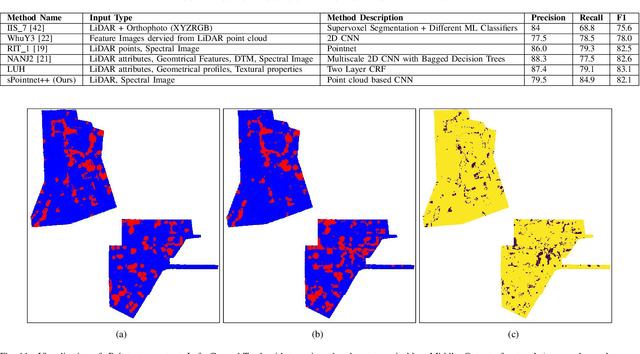

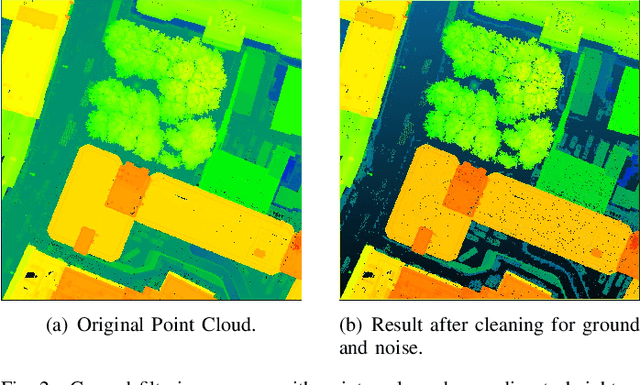

Abstract:LiDAR provides highly accurate 3D point clouds. However, data needs to be manually labelled in order to provide subsequent useful information. Manual annotation of such data is time consuming, tedious and error prone, and hence in this paper we present three automatic methods for annotating trees in LiDAR data. The first method requires high density point clouds and uses certain LiDAR data attributes for the purpose of tree identification, achieving almost 90% accuracy. The second method uses a voxel-based 3D Convolutional Neural Network on low density LiDAR datasets and is able to identify most large trees accurately but struggles with smaller ones due to the voxelisation process. The third method is a scaled version of the PointNet++ method and works directly on outdoor point clouds and achieves an F_score of 82.1% on the ISPRS benchmark dataset, comparable to the state-of-the-art methods but with increased efficiency.

It All Matters: Reporting Accuracy, Inference Time and Power Consumption for Face Emotion Recognition on Embedded Systems

Jun 29, 2018

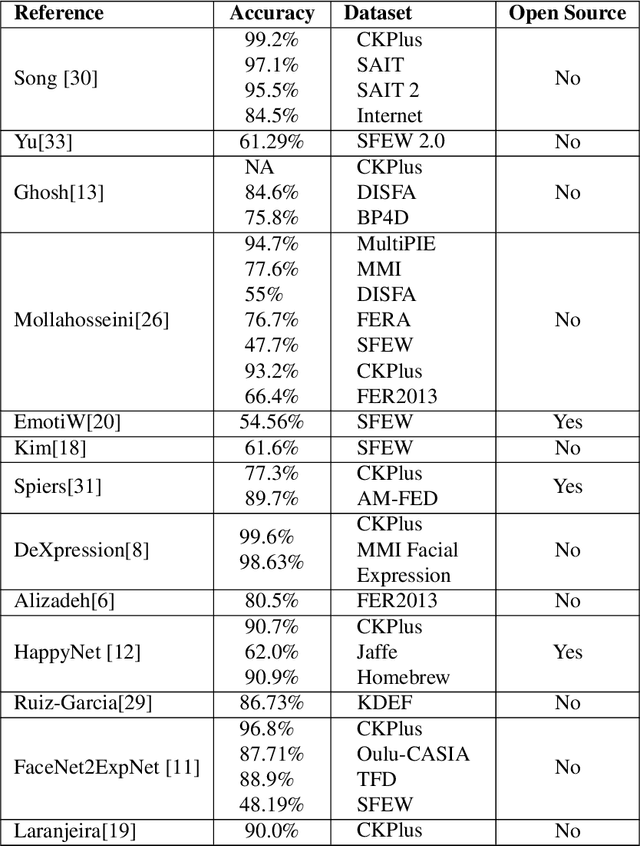

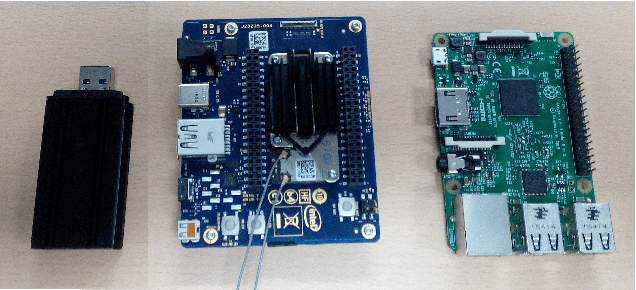

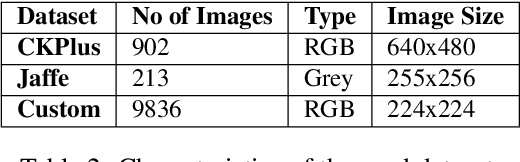

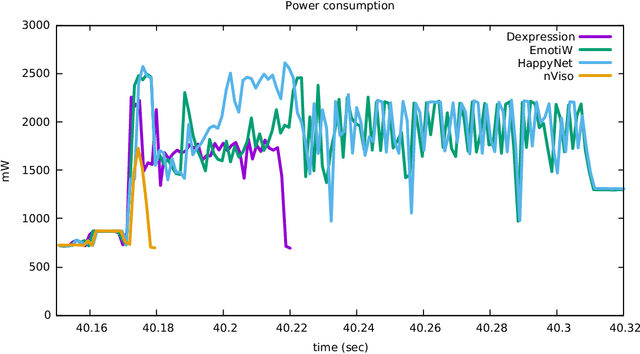

Abstract:While several approaches to face emotion recognition task are proposed in literature, none of them reports on power consumption nor inference time required to run the system in an embedded environment. Without adequate knowledge about these factors it is not clear whether we are actually able to provide accurate face emotion recognition in the embedded environment or not, and if not, how far we are from making it feasible and what are the biggest bottlenecks we face. The main goal of this paper is to answer these questions and to convey the message that instead of reporting only detection accuracy also power consumption and inference time should be reported as real usability of the proposed systems and their adoption in human computer interaction strongly depends on it. In this paper, we identify the state-of-the art face emotion recognition methods that are potentially suitable for embedded environment and the most frequently used datasets for this task. Our study shows that most of the performed experiments use datasets with posed expressions or in a particular experimental setup with special conditions for image collection. Since our goal is to evaluate the performance of the identified promising methods in the realistic scenario, we collect a new dataset with non-exaggerated emotions and we use it, in addition to the publicly available datasets, for the evaluation of detection accuracy, power consumption and inference time on three frequently used embedded devices with different computational capabilities. Our results show that gray images are still more suitable for embedded environment than color ones and that for most of the analyzed systems either inference time or energy consumption or both are limiting factor for their adoption in real-life embedded applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge