David Benhaim

Orient Anything

Oct 02, 2024

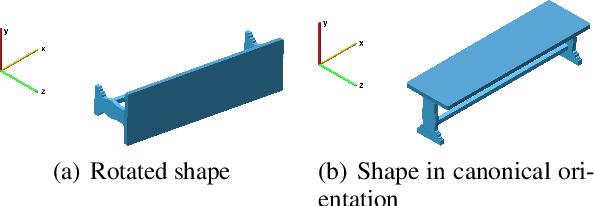

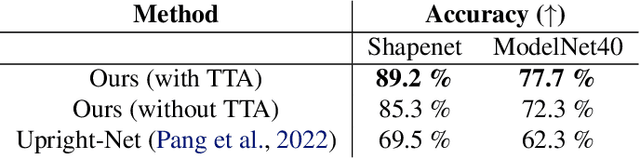

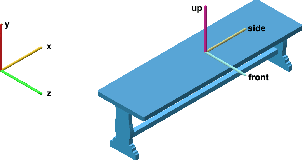

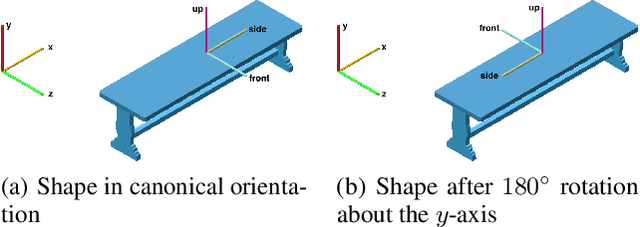

Abstract:Orientation estimation is a fundamental task in 3D shape analysis which consists of estimating a shape's orientation axes: its side-, up-, and front-axes. Using this data, one can rotate a shape into canonical orientation, where its orientation axes are aligned with the coordinate axes. Developing an orientation algorithm that reliably estimates complete orientations of general shapes remains an open problem. We introduce a two-stage orientation pipeline that achieves state of the art performance on up-axis estimation and further demonstrate its efficacy on full-orientation estimation, where one seeks all three orientation axes. Unlike previous work, we train and evaluate our method on all of Shapenet rather than a subset of classes. We motivate our engineering contributions by theory describing fundamental obstacles to orientation estimation for rotationally-symmetric shapes, and show how our method avoids these obstacles.

Segment Any Mesh: Zero-shot Mesh Part Segmentation via Lifting Segment Anything 2 to 3D

Aug 24, 2024Abstract:We propose Segment Any Mesh (SAMesh), a novel zero-shot method for mesh part segmentation that overcomes the limitations of shape analysis-based, learning-based, and current zero-shot approaches. SAMesh operates in two phases: multimodal rendering and 2D-to-3D lifting. In the first phase, multiview renders of the mesh are individually processed through Segment Anything 2 (SAM2) to generate 2D masks. These masks are then lifted into a mesh part segmentation by associating masks that refer to the same mesh part across the multiview renders. We find that applying SAM2 to multimodal feature renders of normals and shape diameter scalars achieves better results than using only untextured renders of meshes. By building our method on top of SAM2, we seamlessly inherit any future improvements made to 2D segmentation. We compare our method with a robust, well-evaluated shape analysis method, Shape Diameter Function (ShapeDiam), and show our method is comparable to or exceeds its performance. Since current benchmarks contain limited object diversity, we also curate and release a dataset of generated meshes and use it to demonstrate our method's improved generalization over ShapeDiam via human evaluation. We release the code and dataset at https://github.com/gtangg12/samesh

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge