David Abensur

Billion-Scale Graph Foundation Models

Feb 04, 2026Abstract:Graph-structured data underpins many critical applications. While foundation models have transformed language and vision via large-scale pretraining and lightweight adaptation, extending this paradigm to general, real-world graphs is challenging. In this work, we present Graph Billion- Foundation-Fusion (GraphBFF): the first end-to-end recipe for building billion-parameter Graph Foundation Models (GFMs) for arbitrary heterogeneous, billion-scale graphs. Central to the recipe is the GraphBFF Transformer, a flexible and scalable architecture designed for practical billion-scale GFMs. Using the GraphBFF, we present the first neural scaling laws for general graphs and show that loss decreases predictably as either model capacity or training data scales, depending on which factor is the bottleneck. The GraphBFF framework provides concrete methodologies for data batching, pretraining, and fine-tuning for building GFMs at scale. We demonstrate the effectiveness of the framework with an evaluation of a 1.4 billion-parameter GraphBFF Transformer pretrained on one billion samples. Across ten diverse, real-world downstream tasks on graphs unseen during training, spanning node- and link-level classification and regression, GraphBFF achieves remarkable zero-shot and probing performance, including in few-shot settings, with large margins of up to 31 PRAUC points. Finally, we discuss key challenges and open opportunities for making GFMs a practical and principled foundation for graph learning at industrial scale.

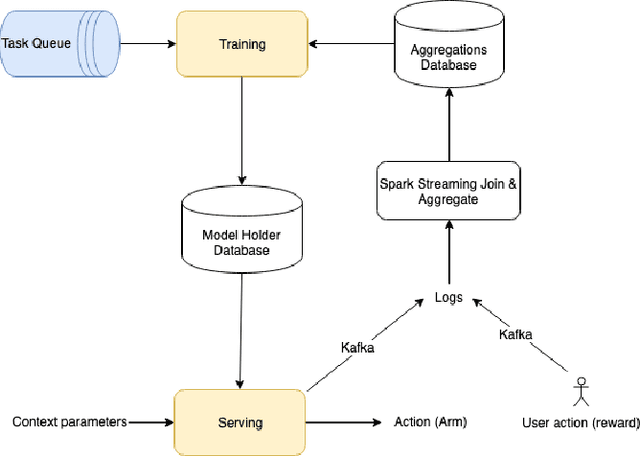

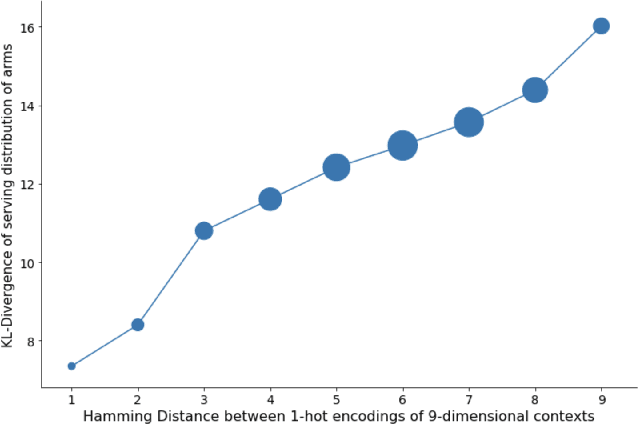

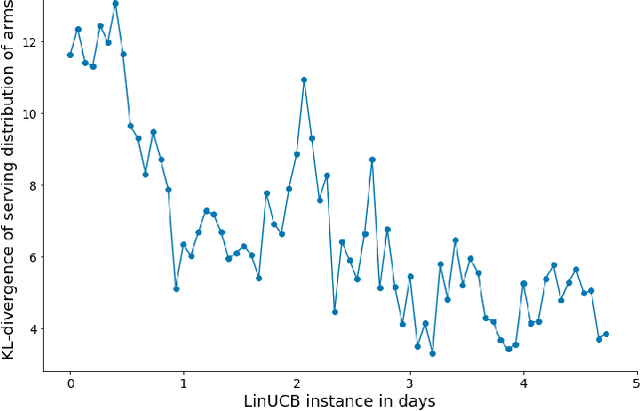

Productization Challenges of Contextual Multi-Armed Bandits

Jul 10, 2019

Abstract:Contextual Multi-Armed Bandits is a well-known and accepted online optimization algorithm, that is used in many Web experiences to tailor content or presentation to users' traffic. Much has been published on theoretical guarantees (e.g. regret bounds) of proposed algorithmic variants, but relatively little attention has been devoted to the challenges encountered while productizing contextual bandits schemes in large scale settings. This work enumerates several productization challenges we encountered while leveraging contextual bandits for two concrete use cases at scale. We discuss how to (1) determine the context (engineer the features) that model the bandit arms; (2) sanity check the health of the optimization process; (3) evaluate the process in an offline manner; (4) add potential actions (arms) on the fly to a running process; (5) subject the decision process to constraints; and (6) iteratively improve the online learning algorithm. For each such challenge, we explain the issue, provide our approach, and relate to prior art where applicable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge