Dattaraj Rao

Bayesian inference to improve quality of Retrieval Augmented Generation

Aug 12, 2024Abstract:Retrieval Augmented Generation or RAG is the most popular pattern for modern Large Language Model or LLM applications. RAG involves taking a user query and finding relevant paragraphs of context in a large corpus typically captured in a vector database. Once the first level of search happens over a vector database, the top n chunks of relevant text are included directly in the context and sent as prompt to the LLM. Problem with this approach is that quality of text chunks depends on effectiveness of search. There is no strong post processing after search to determine if the chunk does hold enough information to include in prompt. Also many times there may be chunks that have conflicting information on the same subject and the model has no prior experience which chunk to prioritize to make a decision. Often times, this leads to the model providing a statement that there are conflicting statements, and it cannot produce an answer. In this research we propose a Bayesian approach to verify the quality of text chunks from the search results. Bayes theorem tries to relate conditional probabilities of the hypothesis with evidence and prior probabilities. We propose that, finding likelihood of text chunks to give a quality answer and using prior probability of quality of text chunks can help us improve overall quality of the responses from RAG systems. We can use the LLM itself to get a likelihood of relevance of a context paragraph. For prior probability of the text chunk, we use the page number in the documents parsed. Assumption is that that paragraphs in earlier pages have a better probability of being findings and more relevant to generalizing an answer.

Zero-shot learning approach to adaptive Cybersecurity using Explainable AI

Jun 21, 2021

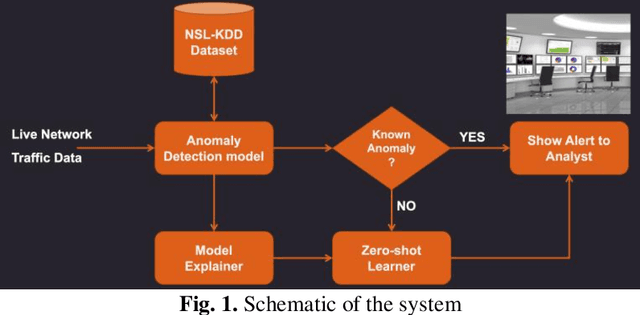

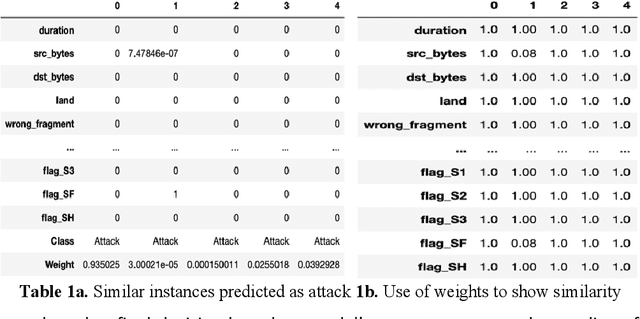

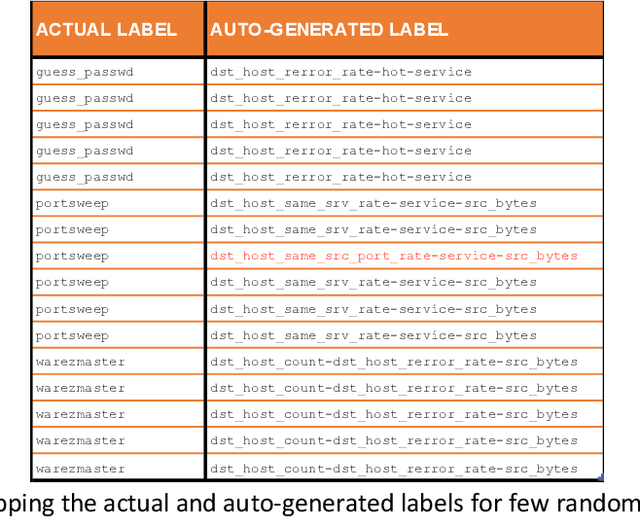

Abstract:Cybersecurity is a domain where there is constant change in patterns of attack, and we need ways to make our Cybersecurity systems more adaptive to handle new attacks and categorize for appropriate action. We present a novel approach to handle the alarm flooding problem faced by Cybersecurity systems like security information and event management (SIEM) and intrusion detection (IDS). We apply a zero-shot learning method to machine learning (ML) by leveraging explanations for predictions of anomalies generated by a ML model. This approach has huge potential to auto detect alarm labels generated in SIEM and associate them with specific attack types. In this approach, without any prior knowledge of attack, we try to identify it, decipher the features that contribute to classification and try to bucketize the attack in a specific category - using explainable AI. Explanations give us measurable factors as to what features influence the prediction of a cyber-attack and to what degree. These explanations generated based on game-theory are used to allocate credit to specific features based on their influence on a specific prediction. Using this allocation of credit, we propose a novel zero-shot approach to categorize novel attacks into specific new classes based on feature influence. The resulting system demonstrated will get good at separating attack traffic from normal flow and auto-generate a label for attacks based on features that contribute to the attack. These auto-generated labels can be presented to SIEM analyst and are intuitive enough to figure out the nature of attack. We apply this approach to a network flow dataset and demonstrate results for specific attack types like ip sweep, denial of service, remote to local, etc. Paper was presented at the first Conference on Deployable AI at IIT-Madras in June 2021.

Explaining Network Intrusion Detection System Using Explainable AI Framework

Mar 12, 2021

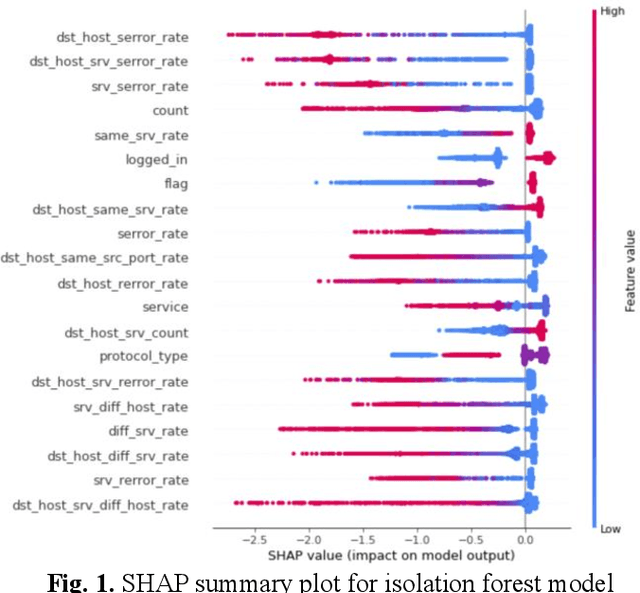

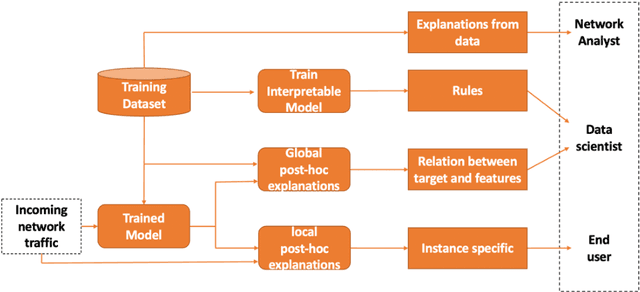

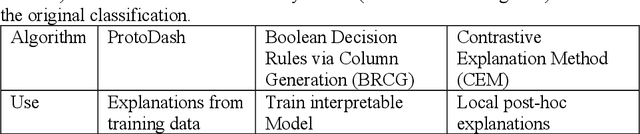

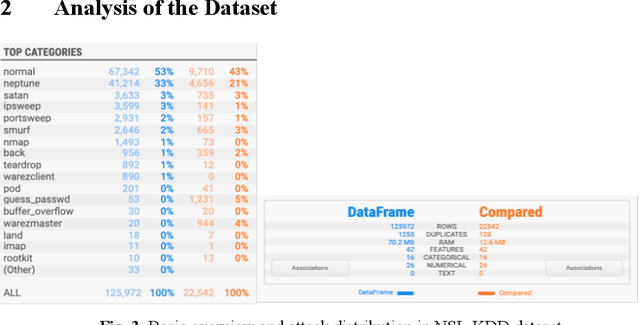

Abstract:Cybersecurity is a domain where the data distribution is constantly changing with attackers exploring newer patterns to attack cyber infrastructure. Intrusion detection system is one of the important layers in cyber safety in today's world. Machine learning based network intrusion detection systems started showing effective results in recent years. With deep learning models, detection rates of network intrusion detection system are improved. More accurate the model, more the complexity and hence less the interpretability. Deep neural networks are complex and hard to interpret which makes difficult to use them in production as reasons behind their decisions are unknown. In this paper, we have used deep neural network for network intrusion detection and also proposed explainable AI framework to add transparency at every stage of machine learning pipeline. This is done by leveraging Explainable AI algorithms which focus on making ML models less of black boxes by providing explanations as to why a prediction is made. Explanations give us measurable factors as to what features influence the prediction of a cyberattack and to what degree. These explanations are generated from SHAP, LIME, Contrastive Explanations Method, ProtoDash and Boolean Decision Rules via Column Generation. We apply these approaches to NSL KDD dataset for intrusion detection system and demonstrate results.

Contextual Bandits for adapting to changing User preferences over time

Sep 23, 2020

Abstract:Contextual bandits provide an effective way to model the dynamic data problem in ML by leveraging online (incremental) learning to continuously adjust the predictions based on changing environment. We explore details on contextual bandits, an extension to the traditional reinforcement learning (RL) problem and build a novel algorithm to solve this problem using an array of action-based learners. We apply this approach to model an article recommendation system using an array of stochastic gradient descent (SGD) learners to make predictions on rewards based on actions taken. We then extend the approach to a publicly available MovieLens dataset and explore the findings. First, we make available a simplified simulated dataset showing varying user preferences over time and how this can be evaluated with static and dynamic learning algorithms. This dataset made available as part of this research is intentionally simulated with limited number of features and can be used to evaluate different problem-solving strategies. We will build a classifier using static dataset and evaluate its performance on this dataset. We show limitations of static learner due to fixed context at a point of time and how changing that context brings down the accuracy. Next we develop a novel algorithm for solving the contextual bandit problem. Similar to the linear bandits, this algorithm maps the reward as a function of context vector but uses an array of learners to capture variation between actions/arms. We develop a bandit algorithm using an array of stochastic gradient descent (SGD) learners, with separate learner per arm. Finally, we will apply this contextual bandit algorithm to predicting movie ratings over time by different users from the standard Movie Lens dataset and demonstrate the results.

NER Models Using Pre-training and Transfer Learning for Healthcare

Oct 23, 2019

Abstract:In this paper, we present our approach to extract structured information from unstructured Electronic Health Records (EHR) [2] to study adverse drug reactions on patients, due to chemicals in their products. Our solution uses a combination of Natural Language Processing (NLP) techniques and a web-based annotation tool to optimize the performance of a custom Named Entity Recognition (NER) [1] model trained on a limited amount of EHR training data. We showcase a combination of tools and techniques leveraging the recent advancements in NLP aimed at targeting domain shifts by applying transfer learning and language model pre-training techniques [3]. We present a comparison of our technique to the base models available and show the effective increase in performance of the NER model and the reduction in time to annotate data. A key observation of the results presented is that the F1 score of model (0.734) trained with our approach with just 50% of available training data outperforms the F1 score of the blank spaCy model (0.704) trained with 100% of the available training data. We also demonstrate an annotation tool to minimize domain expert time and the manual effort required to generate such a training dataset. Further, we plan to release the annotated dataset as well as the pre-trained model to the community to further research in medical health records.

Leveraging human Domain Knowledge to model an empirical Reward function for a Reinforcement Learning problem

Sep 16, 2019

Abstract:Traditional Reinforcement Learning (RL) problems depend on an exhaustive simulation environment that models real-world physics of the problem and trains the RL agent by observing this environment. In this paper, we present a novel approach to creating an environment by modeling the reward function based on empirical rules extracted from human domain knowledge of the system under study. Using this empirical rewards function, we will build an environment and train the agent. We will first create an environment that emulates the effect of setting cabin temperature through thermostat. This is typically done in RL problems by creating an exhaustive model of the system with detailed thermodynamic study. Instead, we propose an empirical approach to model the reward function based on human domain knowledge. We will document some rules of thumb that we usually exercise as humans while setting thermostat temperature and try and model these into our reward function. This modeling of empirical human domain rules into a reward function for RL is the unique aspect of this paper. This is a continuous action space problem and using deep deterministic policy gradient (DDPG) method, we will solve for maximizing the reward function. We will create a policy network that predicts optimal temperature setpoint given external temperature and humidity.

Face recognition for monitoring operator shift in railways

May 21, 2018

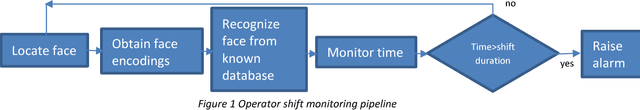

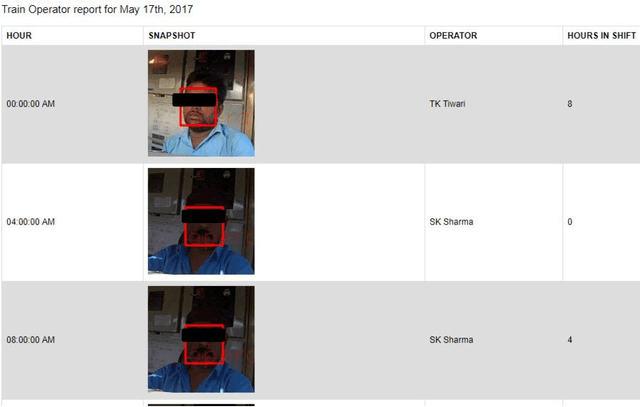

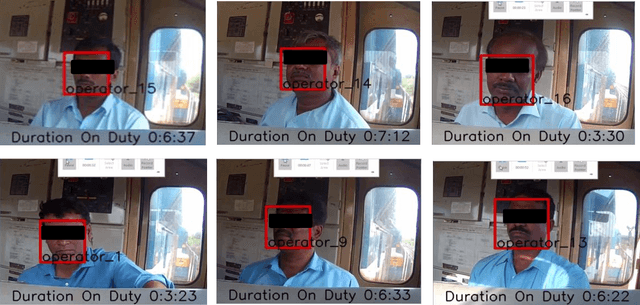

Abstract:Train Pilot is a very tedious and stressful job. Pilots must be vigilant at all times and its easy for them to lose track of time of shift. In countries like USA the pilots are mandated by law to adhere to 8 hour shifts. If they exceed 8 hours of shift the railroads may be penalized for over-tiring their drivers. The problem happens when the 8 hour shift may end in middle of a journey. In such case, the new drivers must be moved to the location locomotive is operating for shift change. Hence accurate monitoring of drivers during their shift and making sure the shifts are scheduled correctly is very important for railroads. Here we propose an automated camera system that uses camera mounted inside Locomotive cabs to continuously record video feeds. These feeds are analyzed in real time to detect the face of driver and recognize the driver using state of the art deep Learning techniques. The outcome is an increased safety of train pilots. Cameras continuously capture video from inside the cab which is stored on an on board data acquisition device. Using advanced computer vision and deep learning techniques the videos are analyzed at regular intervals to detect presence of the pilot and identify the pilot. Using a time based analysis, it is identified for how long that shift has been active. If this time exceeds allocated shift time an alert is sent to the dispatch to adjust shift hours.

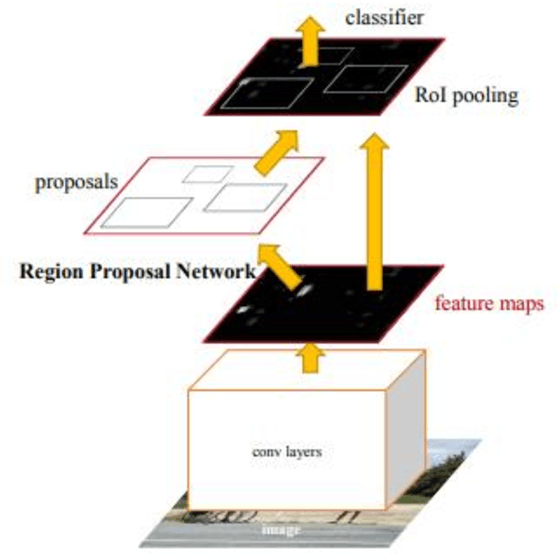

Regional Priority Based Anomaly Detection using Autoencoders

Apr 02, 2018

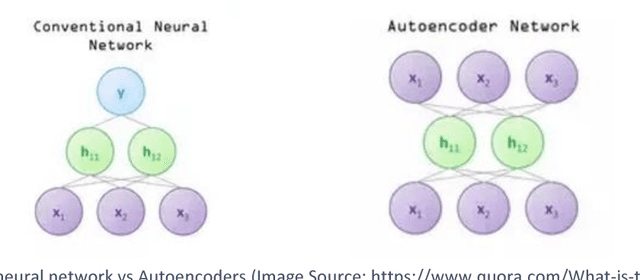

Abstract:In the recent times, autoencoders, besides being used for compression, have been proven quite useful even for regenerating similar images or help in image denoising. They have also been explored for anomaly detection in a few cases. However, due to location invariance property of convolutional neural network, autoencoders tend to learn from or search for learned features in the complete image. This creates issues when all the items in the image are not equally important and their location matters. For such cases, a semi supervised solution - regional priority based autoencoder (RPAE) has been proposed. In this model, similar to object detection models, a region proposal network identifies the relevant areas in the images as belonging to one of the predefined categories and then those bounding boxes are fed into appropriate decoder based on the category they belong to. Finally, the error scores from all the decoders are combined based on their importance to provide total reconstruction error.

Data Augmentation of Railway Images for Track Inspection

Feb 05, 2018

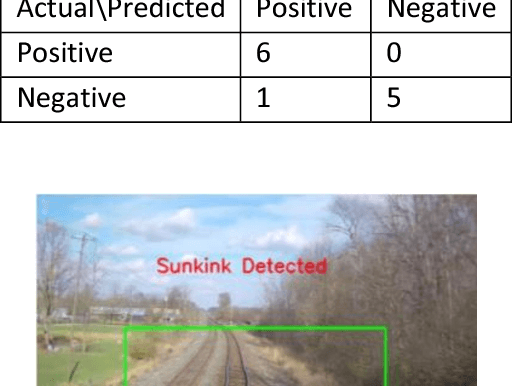

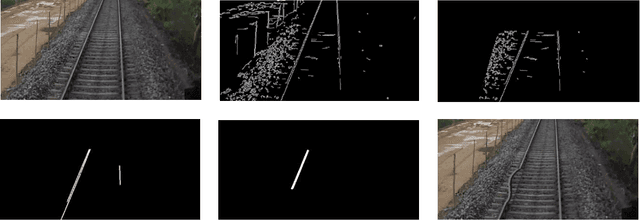

Abstract:Regular maintenance of all the assets is pivotal for proper functioning of railway. Manual maintenance can be very cumbersome and leave room for errors. Track anomalies like vegetation overgrowth, sun kinks affect the track construct and result in unequal load transfer, imbalanced lateral forces on tracks which causes further deterioration of tracks and can ultimately result in derailment of locomotive. Hence there is a need to continuously monitor rail track health. Track anomalies are rare with the skew as high as one anomaly in millions of good images. We propose a method to build training data that will make our algorithms more robust and help us detect real world track issues. The data augmentation will have a direct effect in making us detect better anomalies and hence improve time for railroads that is spent in manual inspection. This paper talks about a real world use case of detecting railway track defects from a camera mounted on a moving locomotive and tracking their locations. The camera is engineered to withstand the environment factors on a moving train and provide a consistent steady image at around 30 frames per second. An image simulation pipeline of track detection, region of interest selection, augmenting image for anomalies is implemented. Training images are simulated for sun kink and vegetation overgrowth. Inception V3 model pretrained on Imagenet dataset is finetuned for a 2 class classification. For the case of vegetation overgrowth, the model generalizes well on actual vegetation images, though it was trained and validated solely on simulated images which might have different distribution than the actual vegetation. Sun kink classifier can classify professionally simulated sun kink videos with a precision of 97.5%.

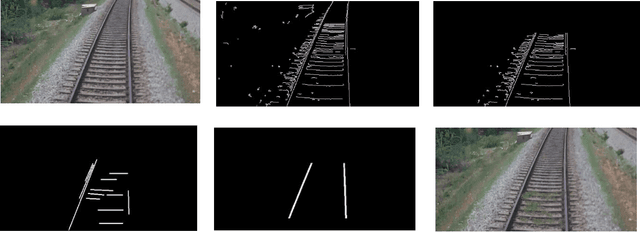

Railway Track Specific Traffic Signal Selection Using Deep Learning

Dec 17, 2017

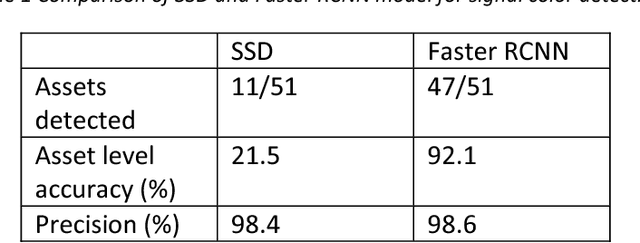

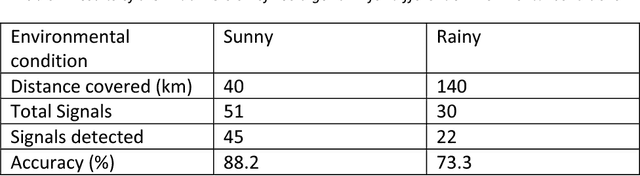

Abstract:With the railway transportation Industry moving actively towards automation, accurate location and inventory of wayside track assets like traffic signals, crossings, switches, mileposts, etc. is of extreme importance. With the new Positive Train Control (PTC) regulation coming into effect, many railway safety rules will be tied directly to location of assets like mileposts and signals. Newer speed regulations will be enforced based on location of the Train with respect to a wayside asset. Hence it is essential for the railroads to have an accurate database of the types and locations of these assets. This paper talks about a real-world use-case of detecting railway signals from a camera mounted on a moving locomotive and tracking their locations. The camera is engineered to withstand the environment factors on a moving train and provide a consistent steady image at around 30 frames per second. Using advanced image analysis and deep learning techniques, signals are detected in these camera images and a database of their locations is created. Railway signals differ a lot from road signals in terms of shapes and rules for placement with respect to track. Due to space constraint and traffic densities in urban areas signals are not placed on the same side of the track and multiple lines can run in parallel. Hence there is need to associate signal detected with the track on which the train runs. We present a method to associate the signals to the specific track they belong to using a video feed from the front facing camera mounted on the lead locomotive. A pipeline of track detection, region of interest selection, signal detection has been implemented which gives an overall accuracy of 94.7% on a route covering 150km with 247 signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge