Darshana Saravanan

Investigating Mechanisms for In-Context Vision Language Binding

May 28, 2025Abstract:To understand a prompt, Vision-Language models (VLMs) must perceive the image, comprehend the text, and build associations within and across both modalities. For instance, given an 'image of a red toy car', the model should associate this image to phrases like 'car', 'red toy', 'red object', etc. Feng and Steinhardt propose the Binding ID mechanism in LLMs, suggesting that the entity and its corresponding attribute tokens share a Binding ID in the model activations. We investigate this for image-text binding in VLMs using a synthetic dataset and task that requires models to associate 3D objects in an image with their descriptions in the text. Our experiments demonstrate that VLMs assign a distinct Binding ID to an object's image tokens and its textual references, enabling in-context association.

VELOCITI: Can Video-Language Models Bind Semantic Concepts through Time?

Jun 16, 2024Abstract:Compositionality is a fundamental aspect of vision-language understanding and is especially required for videos since they contain multiple entities (e.g. persons, actions, and scenes) interacting dynamically over time. Existing benchmarks focus primarily on perception capabilities. However, they do not study binding, the ability of a model to associate entities through appropriate relationships. To this end, we propose VELOCITI, a new benchmark building on complex movie clips and dense semantic role label annotations to test perception and binding in video language models (contrastive and Video-LLMs). Our perception-based tests require discriminating video-caption pairs that share similar entities, and the binding tests require models to associate the correct entity to a given situation while ignoring the different yet plausible entities that also appear in the same video. While current state-of-the-art models perform moderately well on perception tests, accuracy is near random when both entities are present in the same video, indicating that they fail at binding tests. Even the powerful Gemini 1.5 Flash has a substantial gap (16-28%) with respect to human accuracy in such binding tests.

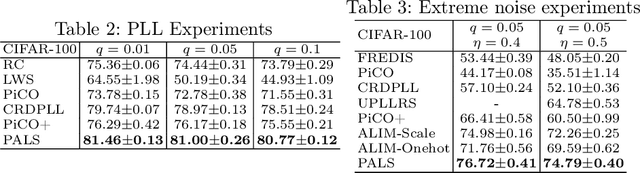

SARI: Simplistic Average and Robust Identification based Noisy Partial Label Learning

Feb 07, 2024

Abstract:Partial label learning (PLL) is a weakly-supervised learning paradigm where each training instance is paired with a set of candidate labels (partial label), one of which is the true label. Noisy PLL (NPLL) relaxes this constraint by allowing some partial labels to not contain the true label, enhancing the practicality of the problem. Our work centers on NPLL and presents a minimalistic framework called SARI that initially assigns pseudo-labels to images by exploiting the noisy partial labels through a weighted nearest neighbour algorithm. These pseudo-label and image pairs are then used to train a deep neural network classifier with label smoothing and standard regularization techniques. The classifier's features and predictions are subsequently employed to refine and enhance the accuracy of pseudo-labels. SARI combines the strengths of Average Based Strategies (in pseudo labelling) and Identification Based Strategies (in classifier training) from the literature. We perform thorough experiments on seven datasets and compare SARI against nine NPLL and PLL methods from the prior art. SARI achieves state-of-the-art results in almost all studied settings, obtaining substantial gains in fine-grained classification and extreme noise settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge