Darshan Gera

S2IL: Structurally Stable Incremental Learning

Mar 15, 2025

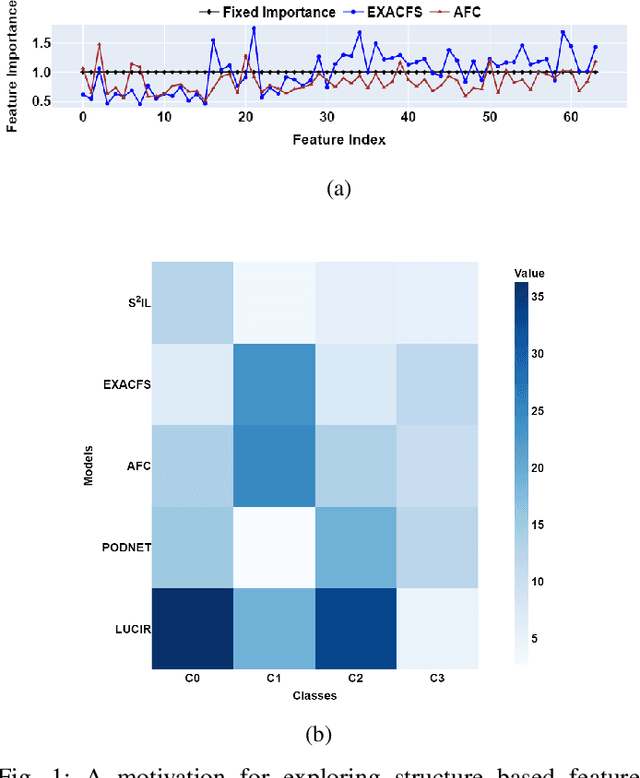

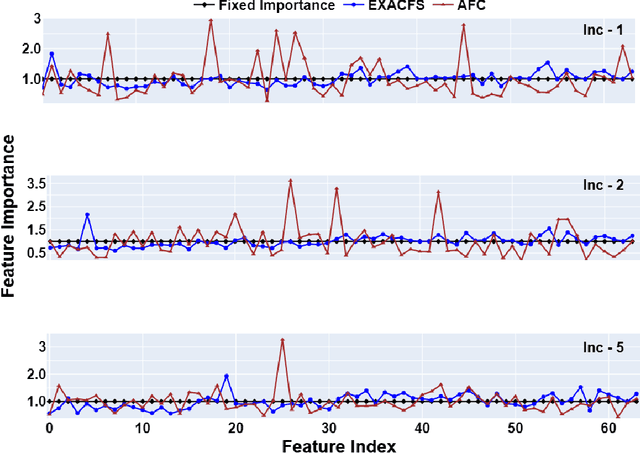

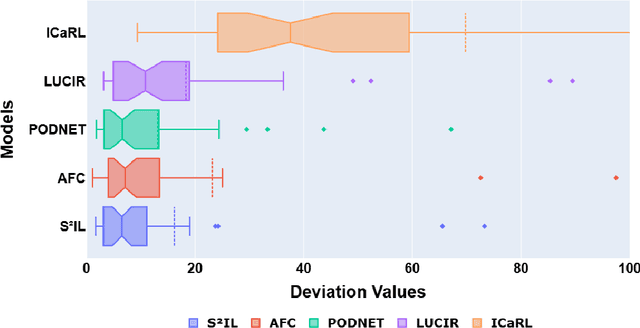

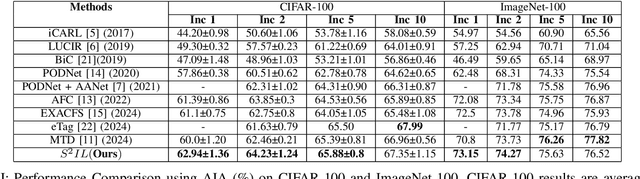

Abstract:Feature Distillation (FD) strategies are proven to be effective in mitigating Catastrophic Forgetting (CF) seen in Class Incremental Learning (CIL). However, current FD approaches enforce strict alignment of feature magnitudes and directions across incremental steps, limiting the model's ability to adapt to new knowledge. In this paper we propose Structurally Stable Incremental Learning(S22IL), a FD method for CIL that mitigates CF by focusing on preserving the overall spatial patterns of features which promote flexible (plasticity) yet stable representations that preserve old knowledge (stability). We also demonstrate that our proposed method S2IL achieves strong incremental accuracy and outperforms other FD methods on SOTA benchmark datasets CIFAR-100, ImageNet-100 and ImageNet-1K. Notably, S2IL outperforms other methods by a significant margin in scenarios that have a large number of incremental tasks.

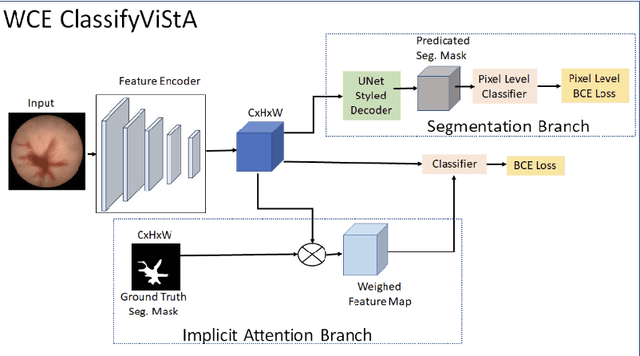

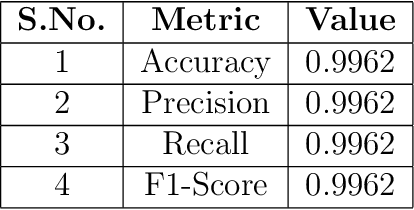

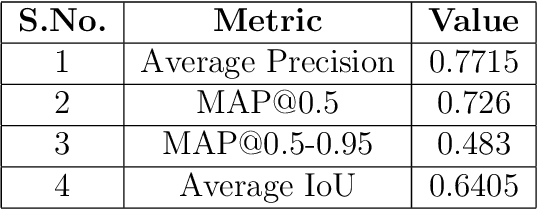

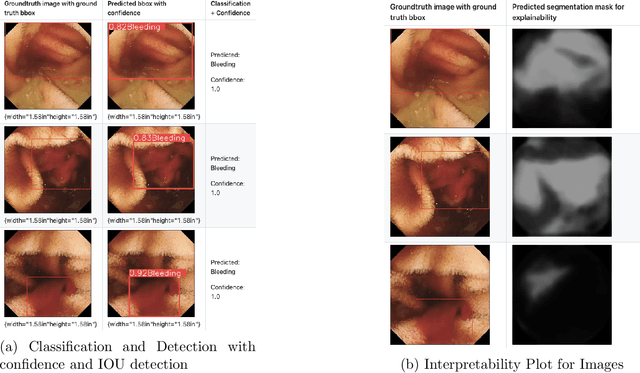

ClassifyViStA:WCE Classification with Visual understanding through Segmentation and Attention

Dec 24, 2024

Abstract:Gastrointestinal (GI) bleeding is a serious medical condition that presents significant diagnostic challenges, particularly in settings with limited access to healthcare resources. Wireless Capsule Endoscopy (WCE) has emerged as a powerful diagnostic tool for visualizing the GI tract, but it requires time-consuming manual analysis by experienced gastroenterologists, which is prone to human error and inefficient given the increasing number of patients.To address this challenge, we propose ClassifyViStA, an AI-based framework designed for the automated detection and classification of bleeding and non-bleeding frames from WCE videos. The model consists of a standard classification path, augmented by two specialized branches: an implicit attention branch and a segmentation branch.The attention branch focuses on the bleeding regions, while the segmentation branch generates accurate segmentation masks, which are used for classification and interpretability. The model is built upon an ensemble of ResNet18 and VGG16 architectures to enhance classification performance. For the bleeding region detection, we implement a Soft Non-Maximum Suppression (Soft NMS) approach with YOLOv8, which improves the handling of overlapping bounding boxes, resulting in more accurate and nuanced detections.The system's interpretability is enhanced by using the segmentation masks to explain the classification results, offering insights into the decision-making process similar to the way a gastroenterologist identifies bleeding regions. Our approach not only automates the detection of GI bleeding but also provides an interpretable solution that can ease the burden on healthcare professionals and improve diagnostic efficiency. Our code is available at ClassifyViStA.

EXACFS -- A CIL Method to mitigate Catastrophic Forgetting

Oct 31, 2024Abstract:Deep neural networks (DNNS) excel at learning from static datasets but struggle with continual learning, where data arrives sequentially. Catastrophic forgetting, the phenomenon of forgetting previously learned knowledge, is a primary challenge. This paper introduces EXponentially Averaged Class-wise Feature Significance (EXACFS) to mitigate this issue in the class incremental learning (CIL) setting. By estimating the significance of model features for each learned class using loss gradients, gradually aging the significance through the incremental tasks and preserving the significant features through a distillation loss, EXACFS effectively balances remembering old knowledge (stability) and learning new knowledge (plasticity). Extensive experiments on CIFAR-100 and ImageNet-100 demonstrate EXACFS's superior performance in preserving stability while acquiring plasticity.

Predictive Analysis of Tuberculosis Treatment Outcomes Using Machine Learning: A Karnataka TB Data Study at a Scale

Mar 13, 2024

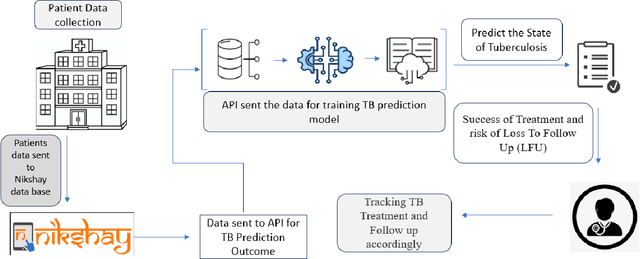

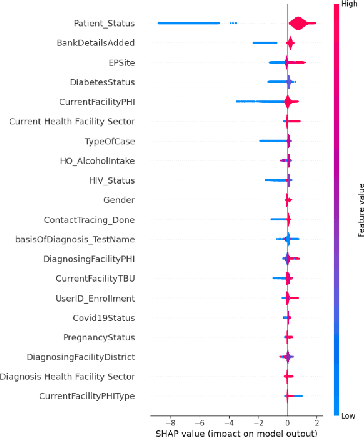

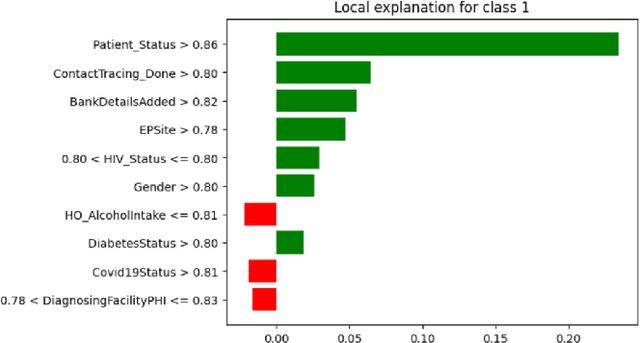

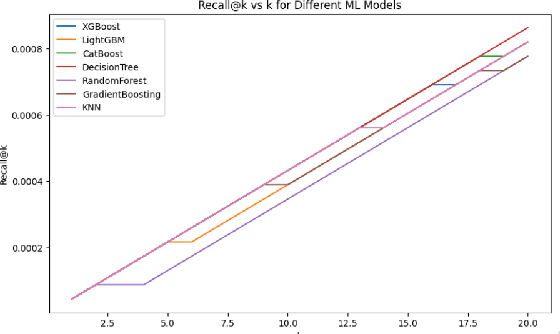

Abstract:Tuberculosis (TB) remains a global health threat, ranking among the leading causes of mortality worldwide. In this context, machine learning (ML) has emerged as a transformative force, providing innovative solutions to the complexities associated with TB treatment.This study explores how machine learning, especially with tabular data, can be used to predict Tuberculosis (TB) treatment outcomes more accurately. It transforms this prediction task into a binary classification problem, generating risk scores from patient data sourced from NIKSHAY, India's national TB control program, which includes over 500,000 patient records. Data preprocessing is a critical component of the study, and the model achieved an recall of 98% and an AUC-ROC score of 0.95 on the validation set, which includes 20,000 patient records.We also explore the use of Natural Language Processing (NLP) for improved model learning. Our results, corroborated by various metrics and ablation studies, validate the effectiveness of our approach. The study concludes by discussing the potential ramifications of our research on TB eradication efforts and proposing potential avenues for future work. This study marks a significant stride in the battle against TB, showcasing the potential of machine learning in healthcare.

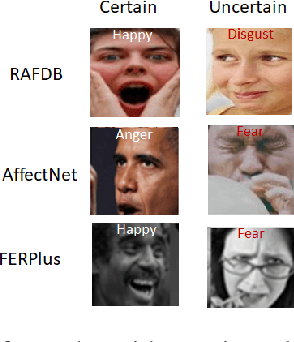

Class adaptive threshold and negative class guided noisy annotation robust Facial Expression Recognition

May 03, 2023

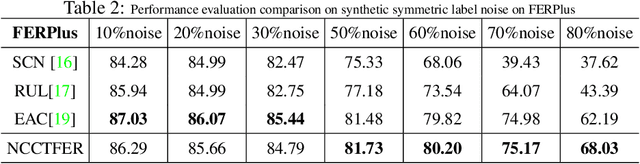

Abstract:The hindering problem in facial expression recognition (FER) is the presence of inaccurate annotations referred to as noisy annotations in the datasets. These noisy annotations are present in the datasets inherently because the labeling is subjective to the annotator, clarity of the image, etc. Recent works use sample selection methods to solve this noisy annotation problem in FER. In our work, we use a dynamic adaptive threshold to separate confident samples from non-confident ones so that our learning won't be hampered due to non-confident samples. Instead of discarding the non-confident samples, we impose consistency in the negative classes of those non-confident samples to guide the model to learn better in the positive class. Since FER datasets usually come with 7 or 8 classes, we can correctly guess a negative class by 85% probability even by choosing randomly. By learning "which class a sample doesn't belong to", the model can learn "which class it belongs to" in a better manner. We demonstrate proposed framework's effectiveness using quantitative as well as qualitative results. Our method performs better than the baseline by a margin of 4% to 28% on RAFDB and 3.3% to 31.4% on FERPlus for various levels of synthetic noisy labels in the aforementioned datasets.

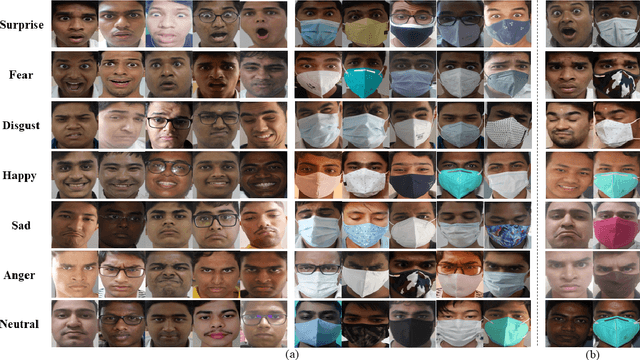

Masked Student Dataset of Expressions

Apr 07, 2023

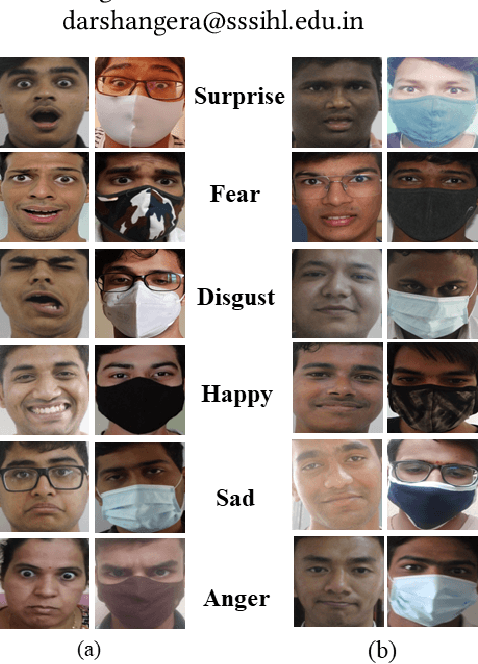

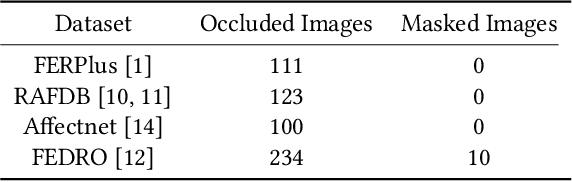

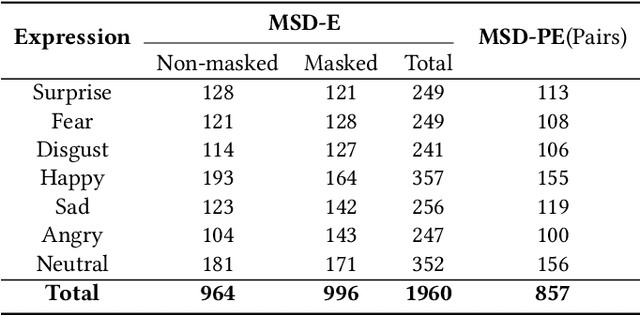

Abstract:Facial expression recognition (FER) algorithms work well in constrained environments with little or no occlusion of the face. However, real-world face occlusion is prevalent, most notably with the need to use a face mask in the current Covid-19 scenario. While there are works on the problem of occlusion in FER, little has been done before on the particular face mask scenario. Moreover, the few works in this area largely use synthetically created masked FER datasets. Motivated by these challenges posed by the pandemic to FER, we present a novel dataset, the Masked Student Dataset of Expressions or MSD-E, consisting of 1,960 real-world non-masked and masked facial expression images collected from 142 individuals. Along with the issue of obfuscated facial features, we illustrate how other subtler issues in masked FER are represented in our dataset. We then provide baseline results using ResNet-18, finding that its performance dips in the non-masked case when trained for FER in the presence of masks. To tackle this, we test two training paradigms: contrastive learning and knowledge distillation, and find that they increase the model's performance in the masked scenario while maintaining its non-masked performance. We further visualise our results using t-SNE plots and Grad-CAM, demonstrating that these paradigms capitalise on the limited features available in the masked scenario. Finally, we benchmark SOTA methods on MSD-E.

ABAW : Facial Expression Recognition in the wild

Mar 17, 2023Abstract:The fifth Affective Behavior Analysis in-the-wild (ABAW) competition has multiple challenges such as Valence-Arousal Estimation Challenge, Expression Classification Challenge, Action Unit Detection Challenge, Emotional Reaction Intensity Estimation Challenge. In this paper we have dealt only expression classification challenge using multiple approaches such as fully supervised, semi-supervised and noisy label approach. Our approach using noise aware model has performed better than baseline model by 10.46% and semi supervised model has performed better than baseline model by 9.38% and the fully supervised model has performed better than the baseline by 9.34%

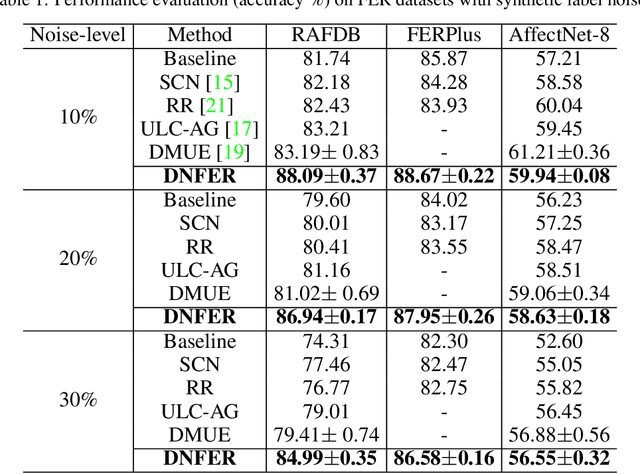

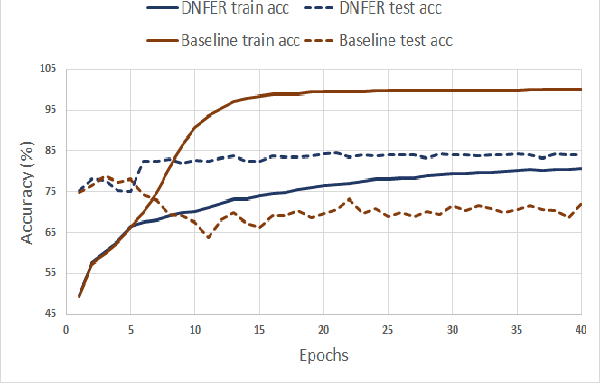

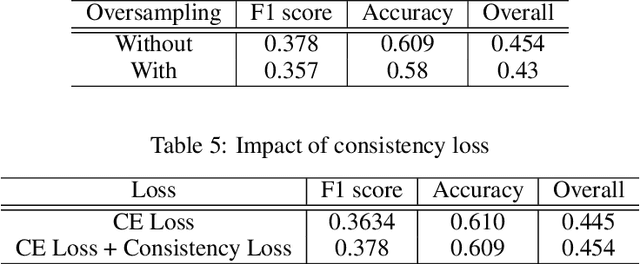

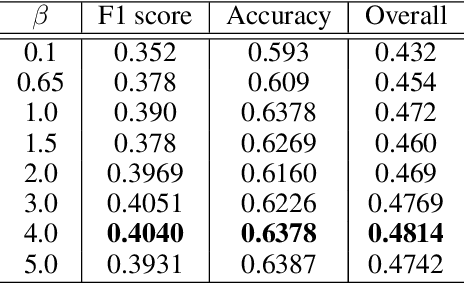

Dynamic Adaptive Threshold based Learning for Noisy Annotations Robust Facial Expression Recognition

Aug 22, 2022

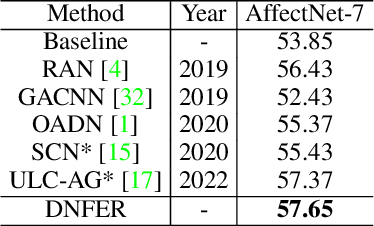

Abstract:The real-world facial expression recognition (FER) datasets suffer from noisy annotations due to crowd-sourcing, ambiguity in expressions, the subjectivity of annotators and inter-class similarity. However, the recent deep networks have strong capacity to memorize the noisy annotations leading to corrupted feature embedding and poor generalization. To handle noisy annotations, we propose a dynamic FER learning framework (DNFER) in which clean samples are selected based on dynamic class specific threshold during training. Specifically, DNFER is based on supervised training using selected clean samples and unsupervised consistent training using all the samples. During training, the mean posterior class probabilities of each mini-batch is used as dynamic class-specific threshold to select the clean samples for supervised training. This threshold is independent of noise rate and does not need any clean data unlike other methods. In addition, to learn from all samples, the posterior distributions between weakly-augmented image and strongly-augmented image are aligned using an unsupervised consistency loss. We demonstrate the robustness of DNFER on both synthetic as well as on real noisy annotated FER datasets like RAFDB, FERPlus, SFEW and AffectNet.

SS-MFAR : Semi-supervised Multi-task Facial Affect Recognition

Jul 19, 2022

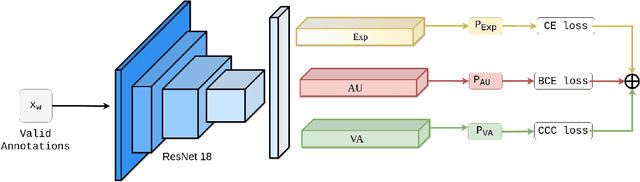

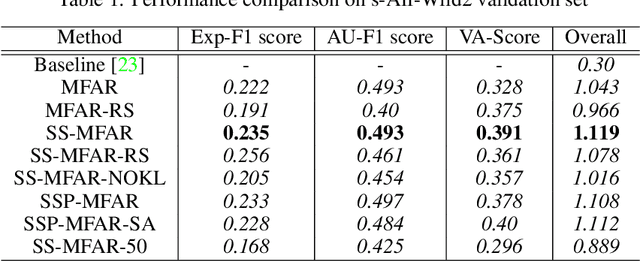

Abstract:Automatic affect recognition has applications in many areas such as education, gaming, software development, automotives, medical care, etc. but it is non trivial task to achieve appreciable performance on in-the-wild data sets. In-the-wild data sets though represent real-world scenarios better than synthetic data sets, the former ones suffer from the problem of incomplete labels. Inspired by semi-supervised learning, in this paper, we introduce our submission to the Multi-Task-Learning Challenge at the 4th Affective Behavior Analysis in-the-wild (ABAW) 2022 Competition. The three tasks that are considered in this challenge are valence-arousal(VA) estimation, classification of expressions into 6 basic (anger, disgust, fear, happiness, sadness, surprise), neutral, and the 'other' category and 12 action units(AU) numbered AU-\{1,2,4,6,7,10,12,15,23,24,25,26\}. Our method Semi-supervised Multi-task Facial Affect Recognition titled \textbf{SS-MFAR} uses a deep residual network with task specific classifiers for each of the tasks along with adaptive thresholds for each expression class and semi-supervised learning for the incomplete labels. Source code is available at https://github.com/1980x/ABAW2022DMACS.

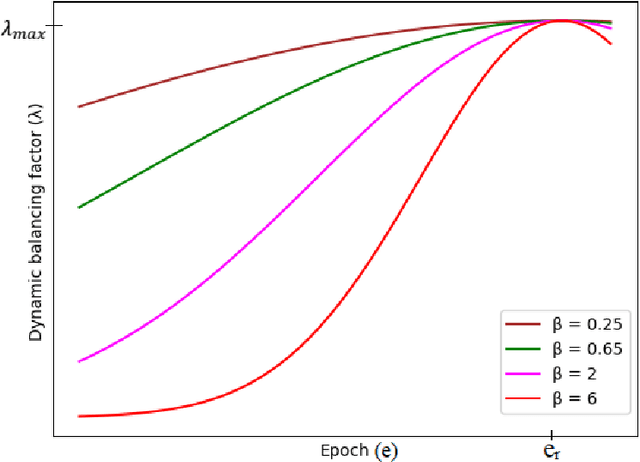

Affect Expression Behaviour Analysis in the Wild using Consensual Collaborative Training

Jul 24, 2021

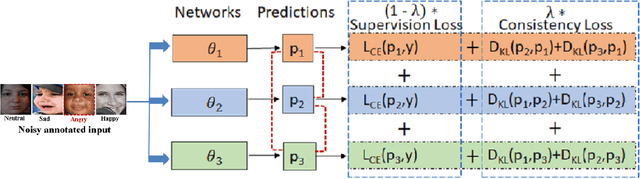

Abstract:Facial expression recognition (FER) in the wild is crucial for building reliable human-computer interactive systems. However, annotations of large scale datasets in FER has been a key challenge as these datasets suffer from noise due to various factors like crowd sourcing, subjectivity of annotators, poor quality of images, automatic labelling based on key word search etc. Such noisy annotations impede the performance of FER due to the memorization ability of deep networks. During early learning stage, deep networks fit on clean data. Then, eventually, they start overfitting on noisy labels due to their memorization ability, which limits FER performance. This report presents Consensual Collaborative Training (CCT) framework used in our submission to expression recognition track of the Affective Behaviour Analysis in-the-wild (ABAW) 2021 competition. CCT co-trains three networks jointly using a convex combination of supervision loss and consistency loss, without making any assumption about the noise distribution. A dynamic transition mechanism is used to move from supervision loss in early learning to consistency loss for consensus of predictions among networks in the later stage. Co-training reduces overall error, and consistency loss prevents overfitting to noisy samples. The performance of the model is validated on challenging Aff-Wild2 dataset for categorical expression classification. Our code is made publicly available at https://github.com/1980x/ABAW2021DMACS.

* 7 pages, 2 figures. arXiv admin note: substantial text overlap with arXiv:2009.14440. substantial text overlap with arXiv:2107.04746

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge