Darko Zibar

Subspace tracking: a novel measurement method to test the standard phase noise model of optical frequency combs

Dec 18, 2025

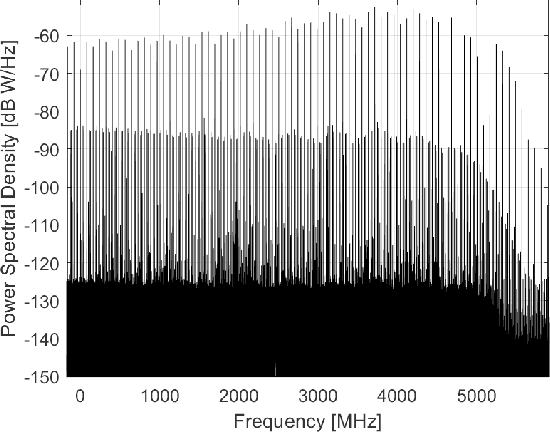

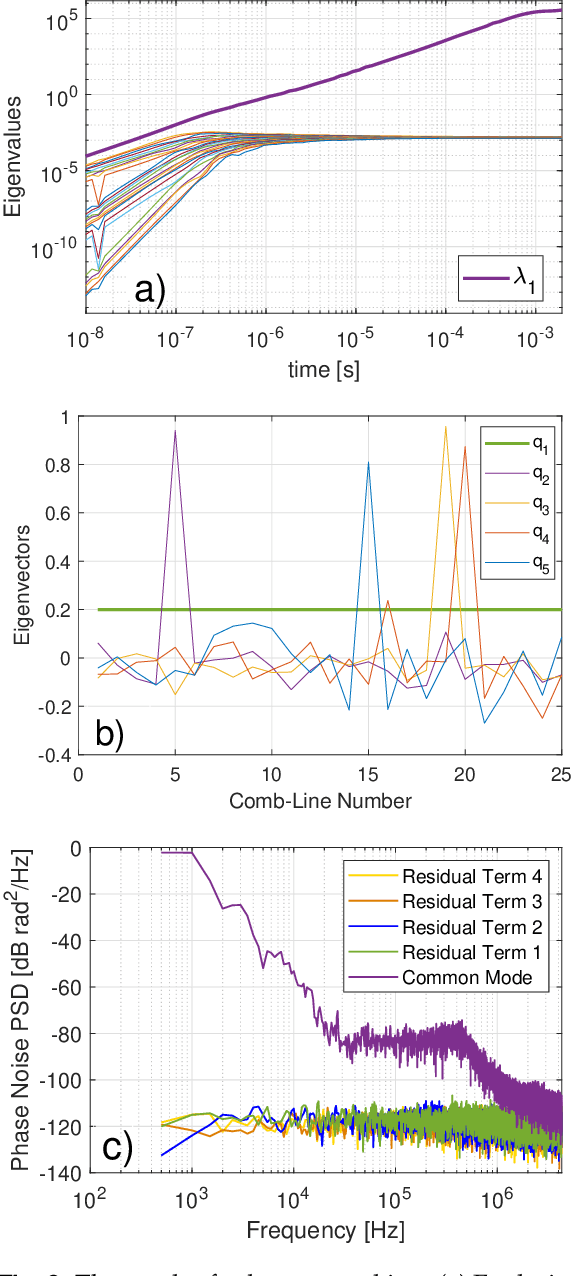

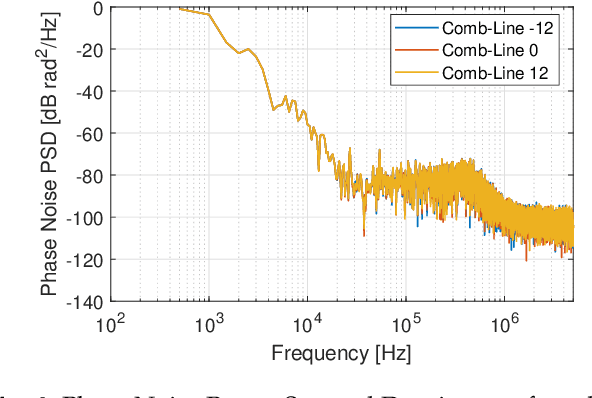

Abstract:The introduction of digital signal processing (DSP) assisted coherent detection has been a cornerstone of modern fiber-optic communication systems. The ability to digitally, i.e. after analogue-to-digital converter, compensate for chromatic dispersion, polarization mode dispersion, and phase noise has rendered traditional analog feedback loops largely obsolete. While analog techniques remain prevalent for phase noise characterization of single-frequency lasers, the phase noise characterization of optical frequency combs presents a greater challenge. This complexity arises from different number of phase noise sources affecting an optical frequency comb. Here, we show how a phase noise measurement techniques method based on multi-heterodyne coherent detection and DSP-based subspace tracking can be used to identify, measure and quantify various phase noise sources associated with an optical frequency comb.

Experimental End-to-End Optimization of Directly Modulated Laser-based IM/DD Transmission

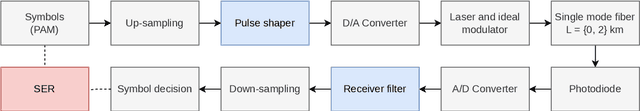

Aug 27, 2025Abstract:Directly modulated lasers (DMLs) are an attractive technology for short-reach intensity modulation and direct detection communication systems. However, their complex nonlinear dynamics make the modeling and optimization of DML-based systems challenging. In this paper, we study the end-to-end optimization of DML-based systems based on a data-driven surrogate model trained on experimental data. The end-to-end optimization includes the pulse shaping and equalizer filters, the bias current and the modulation radio-frequency (RF) power applied to the laser. The performance of the end-to-end optimization scheme is tested on the experimental setup and compared to 4 different benchmark schemes based on linear and nonlinear receiver-side equalization. The results show that the proposed end-to-end scheme is able to deliver better performance throughout the studied symbol rates and transmission distances while employing lower modulation RF power, fewer filter taps and utilizing a smaller signal bandwidth.

Quantum Noise Limited Temperature-Change Estimation for Phase-OTDR Employing Coherent Detection

May 09, 2025Abstract:The quantum limit is a fundamental lower bound on the uncertainty when estimating a parameter in a system dominated by the minimum amount of noise (quantum noise). For the first time, we derive and demonstrate a quantum limit for temperature-change estimation for coherent phase-OTDR sensing-systems.

Low-Complexity Event Detection and Identification in Coherent Correlation OTDR Measurements

Jan 29, 2025

Abstract:Pairing coherent correlation OTDR with low-complexity analysis methods, we investigate the detection of fast temperature changes and vibrations in optical fibers. A localization accuracy of ~2 m and extraction of vibration amplitudes and frequencies is demonstrated.

Experimental Demonstration of End-to-End Optimization for Directly Modulated Laser-based IM/DD Systems

Oct 23, 2024

Abstract:We experimentally demonstrate the joint optimization of transmitter and receiver parameters in directly modulated laser systems, showing superior performance compared to nonlinear receiver-only equalization while using fewer memory taps, less bandwidth, and lower radiofrequency power.

Blind Equalization using a Variational Autoencoder with Second Order Volterra Channel Model

Oct 21, 2024Abstract:Existing communication hardware is being exerted to its limits to accommodate for the ever increasing internet usage globally. This leads to non-linear distortion in the communication link that requires non-linear equalization techniques to operate the link at a reasonable bit error rate. This paper addresses the challenge of blind non-linear equalization using a variational autoencoder (VAE) with a second-order Volterra channel model. The VAE framework's costfunction, the evidence lower bound (ELBO), is derived for real-valued constellations and can be evaluated analytically without resorting to sampling techniques. We demonstrate the effectiveness of our approach through simulations on a synthetic Wiener-Hammerstein channel and a simulated intensity modulated direct detection (IM/DD) optical link. The results show significant improvements in equalization performance, compared to a VAE with linear channel assumptions, highlighting the importance of appropriate channel modeling in unsupervised VAE equalizer frameworks.

End-to-End Learning of Transmitter and Receiver Filters in Bandwidth Limited Fiber Optic Communication Systems

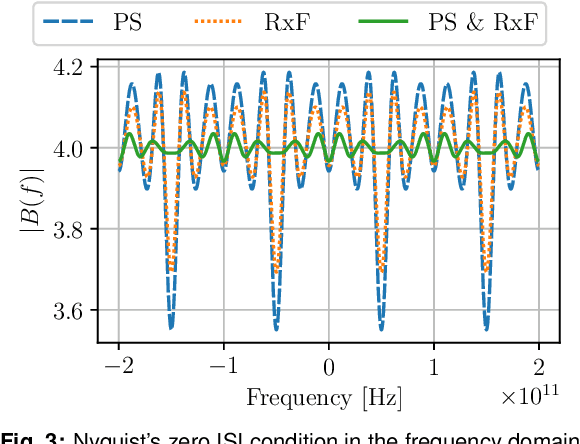

Sep 18, 2024Abstract:This paper investigates the application of end-to-end (E2E) learning for joint optimization of pulse-shaper and receiver filter to reduce intersymbol interference (ISI) in bandwidth-limited communication systems. We investigate this in two numerical simulation models: 1) an additive white Gaussian noise (AWGN) channel with bandwidth limitation and 2) an intensity modulated direct detection (IM/DD) link employing an electro-absorption modulator. For both simulation models, we implement a wavelength division multiplexing (WDM) scheme to ensure that the learned filters adhere to the bandwidth constraints of the WDM channels. Our findings reveal that E2E learning greatly surpasses traditional single-sided transmitter pulse-shaper or receiver filter optimization methods, achieving significant performance gains in terms of symbol error rate with shorter filter lengths. These results suggest that E2E learning can decrease the complexity and enhance the performance of future high-speed optical communication systems.

Phase Noise Characterization of Cr:ZnS Frequency Comb using Subspace Tracking

Sep 03, 2024

Abstract:We present a comprehensive phase noise characterization of a mid-IR Cr:ZnS frequency comb. Despite their emergence as a platform for high-resolution dual-comb spectroscopy, detailed investigations into the phase noise of Cr:ZnS combs have been lacking. To address this, we use a recently proposed phase noise measurement technique that employs multi-heterodyne detection and subspace tracking. This allows for the measurement of the common mode, repetition-rate and high-order phase noise terms, and their corresponding scaling as a function of a comb-line number, using a single measurement set-up. We demonstrate that the comb under test is dominated by the common mode phase noise, while all the other phase noise terms are below the measurement noise floor (~ -120 dB rad^2/Hz), and are thereby not identifiable.

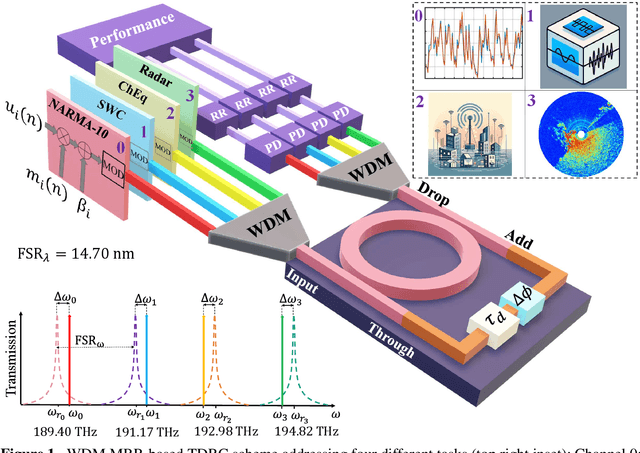

Multi-task Photonic Reservoir Computing: Wavelength Division Multiplexing for Parallel Computing with a Silicon Microring Resonator

Jul 30, 2024

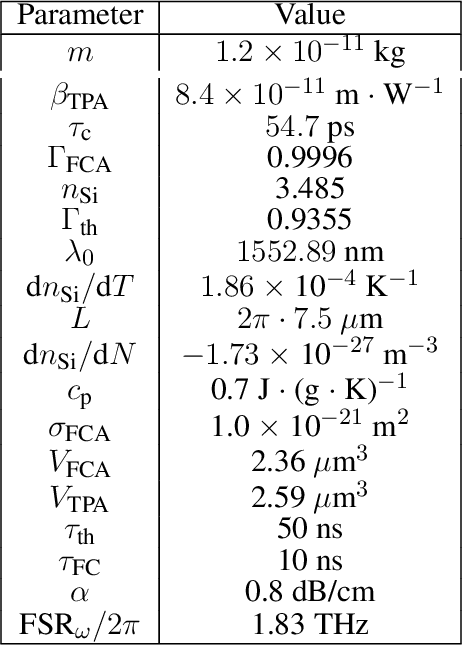

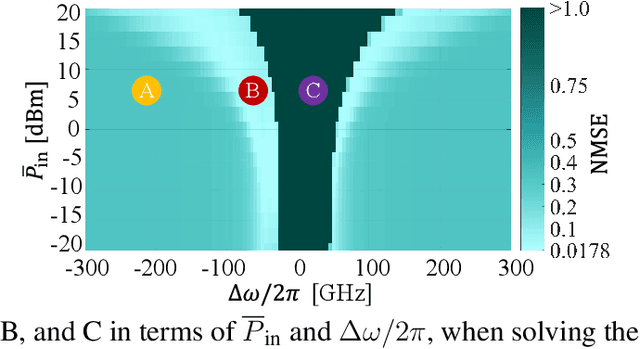

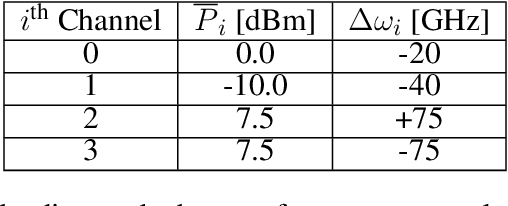

Abstract:Nowadays, as the ever-increasing demand for more powerful computing resources continues, alternative advanced computing paradigms are under extensive investigation. Significant effort has been made to deviate from conventional Von Neumann architectures. In-memory computing has emerged in the field of electronics as a possible solution to the infamous bottleneck between memory and computing processors, which reduces the effective throughput of data. In photonics, novel schemes attempt to collocate the computing processor and memory in a single device. Photonics offers the flexibility of multiplexing streams of data not only spatially and in time, but also in frequency or, equivalently, in wavelength, which makes it highly suitable for parallel computing. Here, we numerically show the use of time and wavelength division multiplexing (WDM) to solve four independent tasks at the same time in a single photonic chip, serving as a proof of concept for our proposal. The system is a time-delay reservoir computing (TDRC) based on a microring resonator (MRR). The addressed tasks cover different applications: Time-series prediction, waveform signal classification, wireless channel equalization, and radar signal prediction. The system is also tested for simultaneous computing of up to 10 instances of the same task, exhibiting excellent performance. The footprint of the system is reduced by using time-division multiplexing of the nodes that act as the neurons of the studied neural network scheme. WDM is used for the parallelization of wavelength channels, each addressing a single task. By adjusting the input power and frequency of each optical channel, we can achieve levels of performance for each of the tasks that are comparable to those quoted in state-of-the-art reports focusing on single-task operation...

End-to-End Learning of Pulse-Shaper and Receiver Filter in the Presence of Strong Intersymbol Interference

May 22, 2024

Abstract:We numerically demonstrate that joint optimization of FIR based pulse-shaper and receiver filter results in an improved system performance, and shorter filter lengths (lower complexity), for 4-PAM 100 GBd IM/DD systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge