Darko Anicic

On-Device Continual Learning for Unsupervised Visual Anomaly Detection in Dynamic Manufacturing

Dec 15, 2025Abstract:In modern manufacturing, Visual Anomaly Detection (VAD) is essential for automated inspection and consistent product quality. Yet, increasingly dynamic and flexible production environments introduce key challenges: First, frequent product changes in small-batch and on-demand manufacturing require rapid model updates. Second, legacy edge hardware lacks the resources to train and run large AI models. Finally, both anomalous and normal training data are often scarce, particularly for newly introduced product variations. We investigate on-device continual learning for unsupervised VAD with localization, extending the PatchCore to incorporate online learning for real-world industrial scenarios. The proposed method leverages a lightweight feature extractor and an incremental coreset update mechanism based on k-center selection, enabling rapid, memory-efficient adaptation from limited data while eliminating costly cloud retraining. Evaluations on an industrial use case are conducted using a testbed designed to emulate flexible production with frequent variant changes in a controlled environment. Our method achieves a 12% AUROC improvement over the baseline, an 80% reduction in memory usage, and faster training compared to batch retraining. These results confirm that our method delivers accurate, resource-efficient, and adaptive VAD suitable for dynamic and smart manufacturing.

On-device Online Learning and Semantic Management of TinyML Systems

May 15, 2024Abstract:Recent advances in Tiny Machine Learning (TinyML) empower low-footprint embedded devices for real-time on-device Machine Learning. While many acknowledge the potential benefits of TinyML, its practical implementation presents unique challenges. This study aims to bridge the gap between prototyping single TinyML models and developing reliable TinyML systems in production: (1) Embedded devices operate in dynamically changing conditions. Existing TinyML solutions primarily focus on inference, with models trained offline on powerful machines and deployed as static objects. However, static models may underperform in the real world due to evolving input data distributions. We propose online learning to enable training on constrained devices, adapting local models towards the latest field conditions. (2) Nevertheless, current on-device learning methods struggle with heterogeneous deployment conditions and the scarcity of labeled data when applied across numerous devices. We introduce federated meta-learning incorporating online learning to enhance model generalization, facilitating rapid learning. This approach ensures optimal performance among distributed devices by knowledge sharing. (3) Moreover, TinyML's pivotal advantage is widespread adoption. Embedded devices and TinyML models prioritize extreme efficiency, leading to diverse characteristics ranging from memory and sensors to model architectures. Given their diversity and non-standardized representations, managing these resources becomes challenging as TinyML systems scale up. We present semantic management for the joint management of models and devices at scale. We demonstrate our methods through a basic regression example and then assess them in three real-world TinyML applications: handwritten character image classification, keyword audio classification, and smart building presence detection, confirming our approaches' effectiveness.

TinyMetaFed: Efficient Federated Meta-Learning for TinyML

Jul 13, 2023Abstract:The field of Tiny Machine Learning (TinyML) has made substantial advancements in democratizing machine learning on low-footprint devices, such as microcontrollers. The prevalence of these miniature devices raises the question of whether aggregating their knowledge can benefit TinyML applications. Federated meta-learning is a promising answer to this question, as it addresses the scarcity of labeled data and heterogeneous data distribution across devices in the real world. However, deploying TinyML hardware faces unique resource constraints, making existing methods impractical due to energy, privacy, and communication limitations. We introduce TinyMetaFed, a model-agnostic meta-learning framework suitable for TinyML. TinyMetaFed facilitates collaborative training of a neural network initialization that can be quickly fine-tuned on new devices. It offers communication savings and privacy protection through partial local reconstruction and Top-P% selective communication, computational efficiency via online learning, and robustness to client heterogeneity through few-shot learning. The evaluations on three TinyML use cases demonstrate that TinyMetaFed can significantly reduce energy consumption and communication overhead, accelerate convergence, and stabilize the training process.

TinyReptile: TinyML with Federated Meta-Learning

Apr 11, 2023Abstract:Tiny machine learning (TinyML) is a rapidly growing field aiming to democratize machine learning (ML) for resource-constrained microcontrollers (MCUs). Given the pervasiveness of these tiny devices, it is inherent to ask whether TinyML applications can benefit from aggregating their knowledge. Federated learning (FL) enables decentralized agents to jointly learn a global model without sharing sensitive local data. However, a common global model may not work for all devices due to the complexity of the actual deployment environment and the heterogeneity of the data available on each device. In addition, the deployment of TinyML hardware has significant computational and communication constraints, which traditional ML fails to address. Considering these challenges, we propose TinyReptile, a simple but efficient algorithm inspired by meta-learning and online learning, to collaboratively learn a solid initialization for a neural network (NN) across tiny devices that can be quickly adapted to a new device with respect to its data. We demonstrate TinyReptile on Raspberry Pi 4 and Cortex-M4 MCU with only 256-KB RAM. The evaluations on various TinyML use cases confirm a resource reduction and training time saving by at least two factors compared with baseline algorithms with comparable performance.

SeLoC-ML: Semantic Low-Code Engineering for Machine Learning Applications in Industrial IoT

Jul 18, 2022

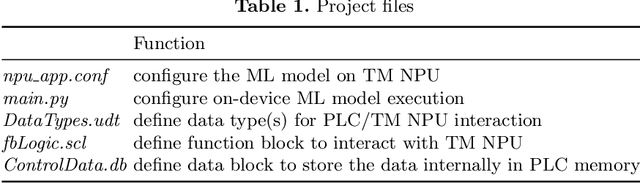

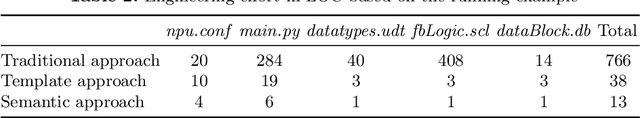

Abstract:Internet of Things (IoT) is transforming the industry by bridging the gap between Information Technology (IT) and Operational Technology (OT). Machines are being integrated with connected sensors and managed by intelligent analytics applications, accelerating digital transformation and business operations. Bringing Machine Learning (ML) to industrial devices is an advancement aiming to promote the convergence of IT and OT. However, developing an ML application in industrial IoT (IIoT) presents various challenges, including hardware heterogeneity, non-standardized representations of ML models, device and ML model compatibility issues, and slow application development. Successful deployment in this area requires a deep understanding of hardware, algorithms, software tools, and applications. Therefore, this paper presents a framework called Semantic Low-Code Engineering for ML Applications (SeLoC-ML), built on a low-code platform to support the rapid development of ML applications in IIoT by leveraging Semantic Web technologies. SeLoC-ML enables non-experts to easily model, discover, reuse, and matchmake ML models and devices at scale. The project code can be automatically generated for deployment on hardware based on the matching results. Developers can benefit from semantic application templates, called recipes, to fast prototype end-user applications. The evaluations confirm an engineering effort reduction by a factor of at least three compared to traditional approaches on an industrial ML classification case study, showing the efficiency and usefulness of SeLoC-ML. We share the code and welcome any contributions.

How to Manage Tiny Machine Learning at Scale: An Industrial Perspective

Feb 18, 2022

Abstract:Tiny machine learning (TinyML) has gained widespread popularity where machine learning (ML) is democratized on ubiquitous microcontrollers, processing sensor data everywhere in real-time. To manage TinyML in the industry, where mass deployment happens, we consider the hardware and software constraints, ranging from available onboard sensors and memory size to ML-model architectures and runtime platforms. However, Internet of Things (IoT) devices are typically tailored to specific tasks and are subject to heterogeneity and limited resources. Moreover, TinyML models have been developed with different structures and are often distributed without a clear understanding of their working principles, leading to a fragmented ecosystem. Considering these challenges, we propose a framework using Semantic Web technologies to enable the joint management of TinyML models and IoT devices at scale, from modeling information to discovering possible combinations and benchmarking, and eventually facilitate TinyML component exchange and reuse. We present an ontology (semantic schema) for neural network models aligned with the World Wide Web Consortium (W3C) Thing Description, which semantically describes IoT devices. Furthermore, a Knowledge Graph of 23 publicly available ML models and six IoT devices were used to demonstrate our concept in three case studies, and we shared the code and examples to enhance reproducibility: https://github.com/Haoyu-R/How-to-Manage-TinyML-at-Scale

The Synergy of Complex Event Processing and Tiny Machine Learning in Industrial IoT

May 04, 2021

Abstract:Focusing on comprehensive networking, big data, and artificial intelligence, the Industrial Internet-of-Things (IIoT) facilitates efficiency and robustness in factory operations. Various sensors and field devices play a central role, as they generate a vast amount of real-time data that can provide insights into manufacturing. The synergy of complex event processing (CEP) and machine learning (ML) has been developed actively in the last years in IIoT to identify patterns in heterogeneous data streams and fuse raw data into tangible facts. In a traditional compute-centric paradigm, the raw field data are continuously sent to the cloud and processed centrally. As IIoT devices become increasingly pervasive and ubiquitous, concerns are raised since transmitting such amount of data is energy-intensive, vulnerable to be intercepted, and subjected to high latency. The data-centric paradigm can essentially solve these problems by empowering IIoT to perform decentralized on-device ML and CEP, keeping data primarily on edge devices and minimizing communications. However, this is no mean feat because most IIoT edge devices are designed to be computationally constrained with low power consumption. This paper proposes a framework that exploits ML and CEP's synergy at the edge in distributed sensor networks. By leveraging tiny ML and micro CEP, we shift the computation from the cloud to the power-constrained IIoT devices and allow users to adapt the on-device ML model and the CEP reasoning logic flexibly on the fly without requiring to reupload the whole program. Lastly, we evaluate the proposed solution and show its effectiveness and feasibility using an industrial use case of machine safety monitoring.

TinyOL: TinyML with Online-Learning on Microcontrollers

Apr 10, 2021

Abstract:Tiny machine learning (TinyML) is a fast-growing research area committed to democratizing deep learning for all-pervasive microcontrollers (MCUs). Challenged by the constraints on power, memory, and computation, TinyML has achieved significant advancement in the last few years. However, the current TinyML solutions are based on batch/offline settings and support only the neural network's inference on MCUs. The neural network is first trained using a large amount of pre-collected data on a powerful machine and then flashed to MCUs. This results in a static model, hard to adapt to new data, and impossible to adjust for different scenarios, which impedes the flexibility of the Internet of Things (IoT). To address these problems, we propose a novel system called TinyOL (TinyML with Online-Learning), which enables incremental on-device training on streaming data. TinyOL is based on the concept of online learning and is suitable for constrained IoT devices. We experiment TinyOL under supervised and unsupervised setups using an autoencoder neural network. Finally, we report the performance of the proposed solution and show its effectiveness and feasibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge