Darius Sinha

Symmetry Perception by Deep Networks: Inadequacy of Feed-Forward Architectures and Improvements with Recurrent Connections

Dec 08, 2021

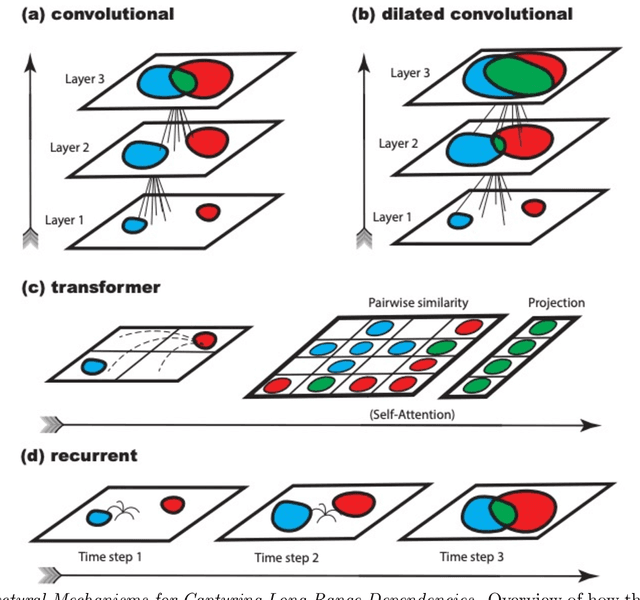

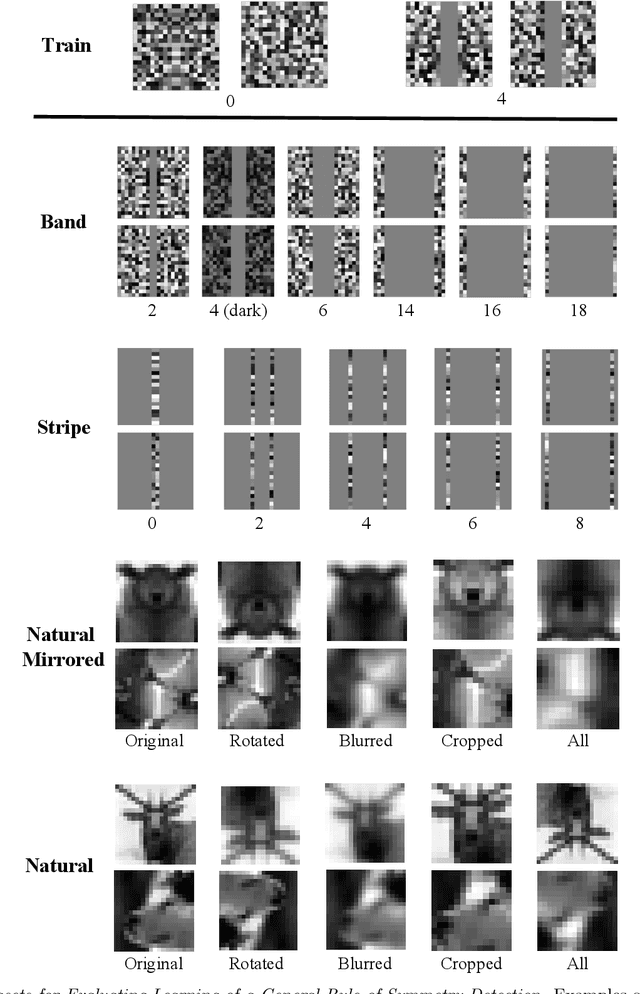

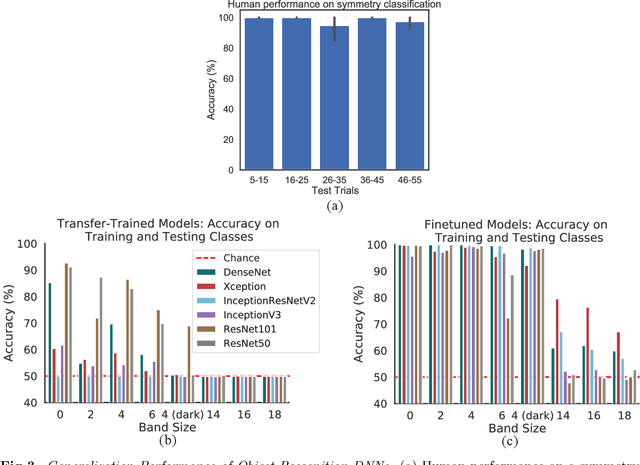

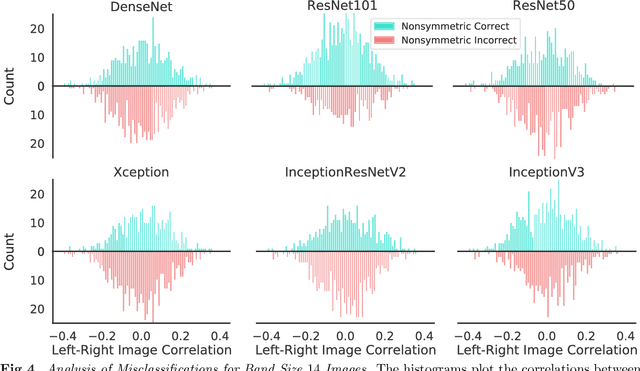

Abstract:Symmetry is omnipresent in nature and perceived by the visual system of many species, as it facilitates detecting ecologically important classes of objects in our environment. Symmetry perception requires abstraction of non-local spatial dependencies between image regions, and its underlying neural mechanisms remain elusive. In this paper, we evaluate Deep Neural Network (DNN) architectures on the task of learning symmetry perception from examples. We demonstrate that feed-forward DNNs that excel at modelling human performance on object recognition tasks, are unable to acquire a general notion of symmetry. This is the case even when the DNNs are architected to capture non-local spatial dependencies, such as through `dilated' convolutions and the recently introduced `transformers' design. By contrast, we find that recurrent architectures are capable of learning to perceive symmetry by decomposing the non-local spatial dependencies into a sequence of local operations, that are reusable for novel images. These results suggest that recurrent connections likely play an important role in symmetry perception in artificial systems, and possibly, biological ones too.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge