Daria Hemmerling

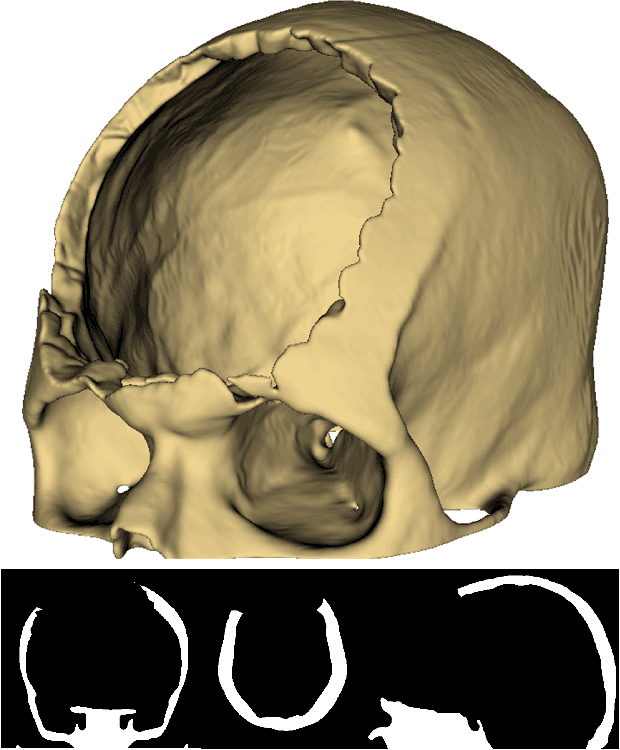

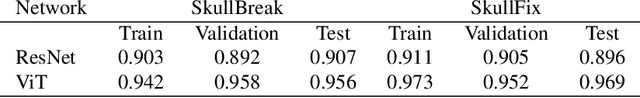

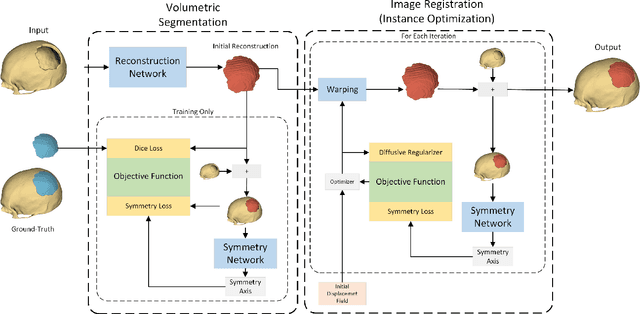

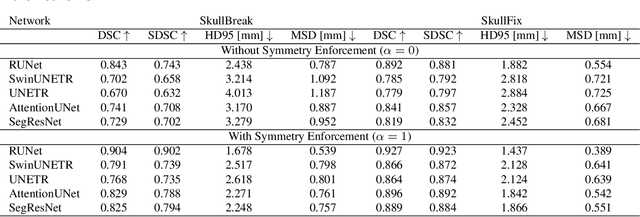

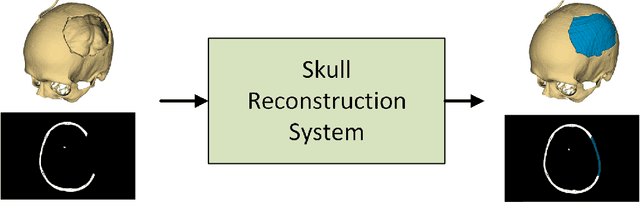

Automatic Skull Reconstruction by Deep Learnable Symmetry Enforcement

Nov 26, 2024

Abstract:Every year, thousands of people suffer from skull damage and require personalized implants to fill the cranial cavity. Unfortunately, the waiting time for reconstruction surgery can extend to several weeks or even months, especially in less developed countries. One factor contributing to the extended waiting period is the intricate process of personalized implant modeling. Currently, the preparation of these implants by experienced biomechanical experts is both costly and time-consuming. Recent advances in artificial intelligence, especially in deep learning, offer promising potential for automating the process. However, deep learning-based cranial reconstruction faces several challenges: (i) the limited size of training datasets, (ii) the high resolution of the volumetric data, and (iii) significant data heterogeneity. In this work, we propose a novel approach to address these challenges by enhancing the reconstruction through learnable symmetry enforcement. We demonstrate that it is possible to train a neural network dedicated to calculating skull symmetry, which can be utilized either as an additional objective function during training or as a post-reconstruction objective during the refinement step. We quantitatively evaluate the proposed method using open SkullBreak and SkullFix datasets, and qualitatively using real clinical cases. The results indicate that the symmetry-preserving reconstruction network achieves considerably better outcomes compared to the baseline (0.94/0.94/1.31 vs 0.84/0.76/2.43 in terms of DSC, bDSC, and HD95). Moreover, the results are comparable to the best-performing methods while requiring significantly fewer computational resources (< 500 vs > 100,000 GPU hours). The proposed method is a considerable contribution to the field of applied artificial intelligence in medicine and is a step toward automatic cranial defect reconstruction in clinical practice.

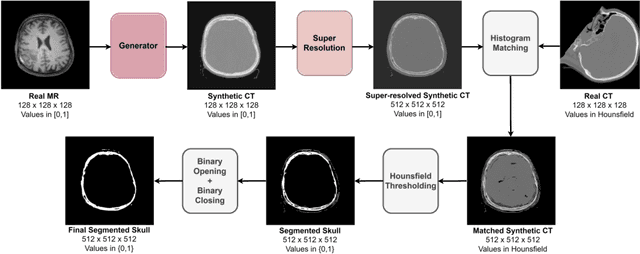

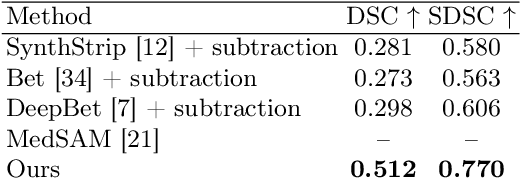

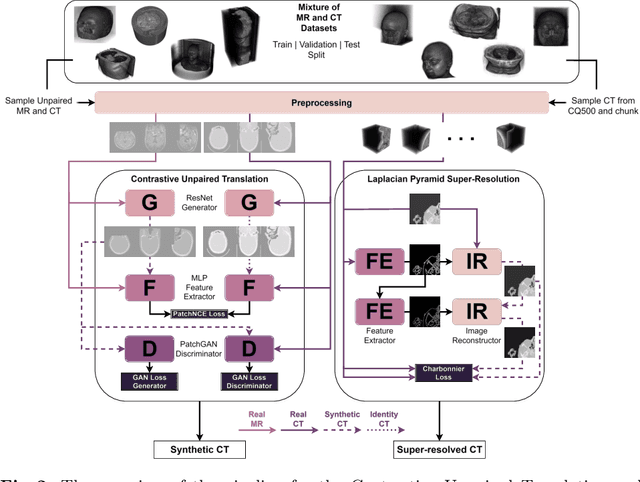

Unsupervised Skull Segmentation via Contrastive MR-to-CT Modality Translation

Oct 17, 2024

Abstract:The skull segmentation from CT scans can be seen as an already solved problem. However, in MR this task has a significantly greater complexity due to the presence of soft tissues rather than bones. Capturing the bone structures from MR images of the head, where the main visualization objective is the brain, is very demanding. The attempts that make use of skull stripping seem to not be well suited for this task and fail to work in many cases. On the other hand, supervised approaches require costly and time-consuming skull annotations. To overcome the difficulties we propose a fully unsupervised approach, where we do not perform the segmentation directly on MR images, but we rather perform a synthetic CT data generation via MR-to-CT translation and perform the segmentation there. We address many issues associated with unsupervised skull segmentation including the unpaired nature of MR and CT datasets (contrastive learning), low resolution and poor quality (super-resolution), and generalization capabilities. The research has a significant value for downstream tasks requiring skull segmentation from MR volumes such as craniectomy or surgery planning and can be seen as an important step towards the utilization of synthetic data in medical imaging.

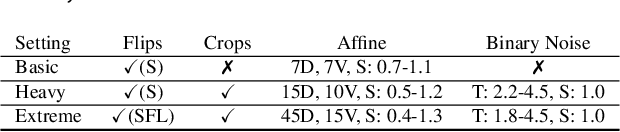

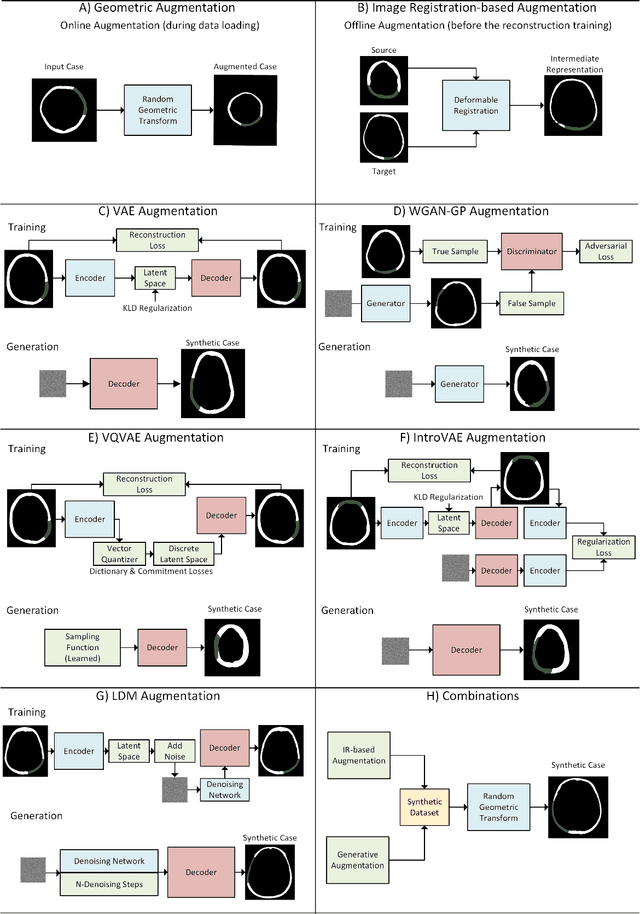

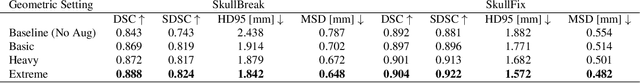

Improving Deep Learning-based Automatic Cranial Defect Reconstruction by Heavy Data Augmentation: From Image Registration to Latent Diffusion Models

Jun 10, 2024

Abstract:Modeling and manufacturing of personalized cranial implants are important research areas that may decrease the waiting time for patients suffering from cranial damage. The modeling of personalized implants may be partially automated by the use of deep learning-based methods. However, this task suffers from difficulties with generalizability into data from previously unseen distributions that make it difficult to use the research outcomes in real clinical settings. Due to difficulties with acquiring ground-truth annotations, different techniques to improve the heterogeneity of datasets used for training the deep networks have to be considered and introduced. In this work, we present a large-scale study of several augmentation techniques, varying from classical geometric transformations, image registration, variational autoencoders, and generative adversarial networks, to the most recent advances in latent diffusion models. We show that the use of heavy data augmentation significantly increases both the quantitative and qualitative outcomes, resulting in an average Dice Score above 0.94 for the SkullBreak and above 0.96 for the SkullFix datasets. Moreover, we show that the synthetically augmented network successfully reconstructs real clinical defects. The work is a considerable contribution to the field of artificial intelligence in the automatic modeling of personalized cranial implants.

Automatic Cranial Defect Reconstruction with Self-Supervised Deep Deformable Masked Autoencoders

Apr 19, 2024

Abstract:Thousands of people suffer from cranial injuries every year. They require personalized implants that need to be designed and manufactured before the reconstruction surgery. The manual design is expensive and time-consuming leading to searching for algorithms whose goal is to automatize the process. The problem can be formulated as volumetric shape completion and solved by deep neural networks dedicated to supervised image segmentation. However, such an approach requires annotating the ground-truth defects which is costly and time-consuming. Usually, the process is replaced with synthetic defect generation. However, even the synthetic ground-truth generation is time-consuming and limits the data heterogeneity, thus the deep models' generalizability. In our work, we propose an alternative and simple approach to use a self-supervised masked autoencoder to solve the problem. This approach by design increases the heterogeneity of the training set and can be seen as a form of data augmentation. We compare the proposed method with several state-of-the-art deep neural networks and show both the quantitative and qualitative improvement on the SkullBreak and SkullFix datasets. The proposed method can be used to efficiently reconstruct the cranial defects in real time.

Eye-tracking in Mixed Reality for Diagnosis of Neurodegenerative Diseases

Apr 19, 2024

Abstract:Parkinson's disease ranks as the second most prevalent neurodegenerative disorder globally. This research aims to develop a system leveraging Mixed Reality capabilities for tracking and assessing eye movements. In this paper, we present a medical scenario and outline the development of an application designed to capture eye-tracking signals through Mixed Reality technology for the evaluation of neurodegenerative diseases. Additionally, we introduce a pipeline for extracting clinically relevant features from eye-gaze analysis, describing the capabilities of the proposed system from a medical perspective. The study involved a cohort of healthy control individuals and patients suffering from Parkinson's disease, showcasing the feasibility and potential of the proposed technology for non-intrusive monitoring of eye movement patterns for the diagnosis of neurodegenerative diseases. Clinical relevance - Developing a non-invasive biomarker for Parkinson's disease is urgently needed to accurately detect the disease's onset. This would allow for the timely introduction of neuroprotective treatment at the earliest stage and enable the continuous monitoring of intervention outcomes. The ability to detect subtle changes in eye movements allows for early diagnosis, offering a critical window for intervention before more pronounced symptoms emerge. Eye tracking provides objective and quantifiable biomarkers, ensuring reliable assessments of disease progression and cognitive function. The eye gaze analysis using Mixed Reality glasses is wireless, facilitating convenient assessments in both home and hospital settings. The approach offers the advantage of utilizing hardware that requires no additional specialized attachments, enabling examinations through personal eyewear.

High-Resolution Cranial Defect Reconstruction by Iterative, Low-Resolution, Point Cloud Completion Transformers

Aug 07, 2023Abstract:Each year thousands of people suffer from various types of cranial injuries and require personalized implants whose manual design is expensive and time-consuming. Therefore, an automatic, dedicated system to increase the availability of personalized cranial reconstruction is highly desirable. The problem of the automatic cranial defect reconstruction can be formulated as the shape completion task and solved using dedicated deep networks. Currently, the most common approach is to use the volumetric representation and apply deep networks dedicated to image segmentation. However, this approach has several limitations and does not scale well into high-resolution volumes, nor takes into account the data sparsity. In our work, we reformulate the problem into a point cloud completion task. We propose an iterative, transformer-based method to reconstruct the cranial defect at any resolution while also being fast and resource-efficient during training and inference. We compare the proposed methods to the state-of-the-art volumetric approaches and show superior performance in terms of GPU memory consumption while maintaining high-quality of the reconstructed defects.

Deep Learning-based Framework for Automatic Cranial Defect Reconstruction and Implant Modeling

Apr 13, 2022

Abstract:The goal of this work is to propose a robust, fast, and fully automatic method for personalized cranial defect reconstruction and implant modeling. We propose a two-step deep learning-based method using a modified U-Net architecture to perform the defect reconstruction, and a dedicated iterative procedure to improve the implant geometry, followed by automatic generation of models ready for 3-D printing. We propose a cross-case augmentation based on imperfect image registration combining cases from different datasets. We perform ablation studies regarding different augmentation strategies and compare them to other state-of-the-art methods. We evaluate the method on three datasets introduced during the AutoImplant 2021 challenge, organized jointly with the MICCAI conference. We perform the quantitative evaluation using the Dice and boundary Dice coefficients, and the Hausdorff distance. The average Dice coefficient, boundary Dice coefficient, and the 95th percentile of Hausdorff distance are 0.91, 0.94, and 1.53 mm respectively. We perform an additional qualitative evaluation by 3-D printing and visualization in mixed reality to confirm the implant's usefulness. We propose a complete pipeline that enables one to create the cranial implant model ready for 3-D printing. The described method is a greatly extended version of the method that scored 1st place in all AutoImplant 2021 challenge tasks. We freely release the source code, that together with the open datasets, makes the results fully reproducible. The automatic reconstruction of cranial defects may enable manufacturing personalized implants in a significantly shorter time, possibly allowing one to perform the 3-D printing process directly during a given intervention. Moreover, we show the usability of the defect reconstruction in mixed reality that may further reduce the surgery time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge