Daniel Stumper

Offline Object Extraction from Dynamic Occupancy Grid Map Sequences

Apr 11, 2018

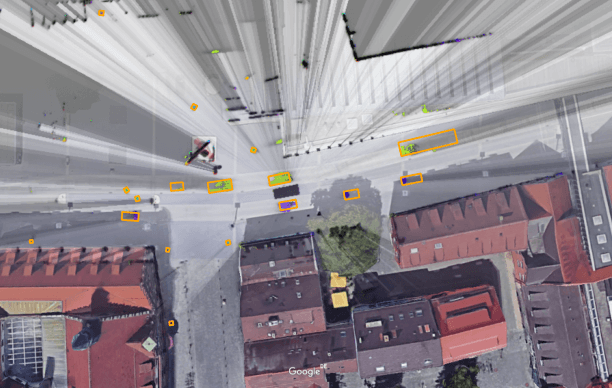

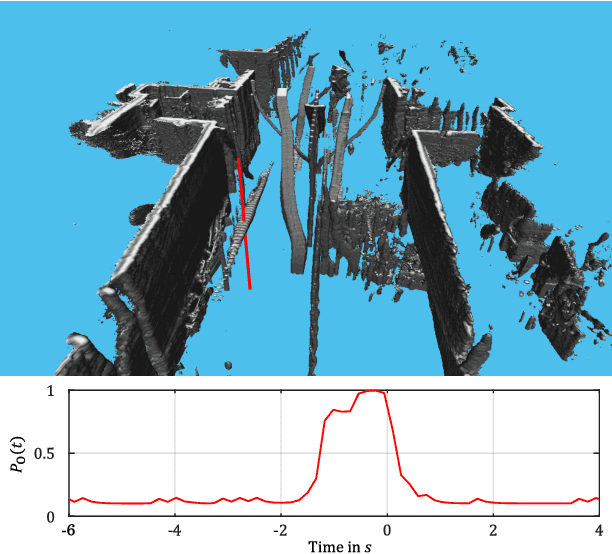

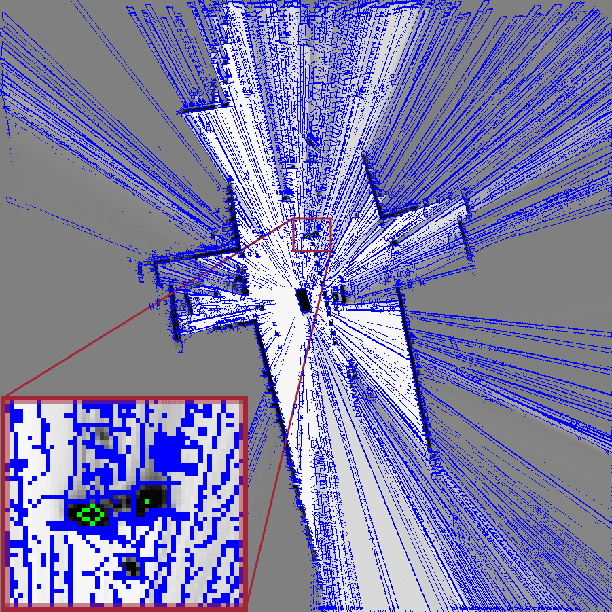

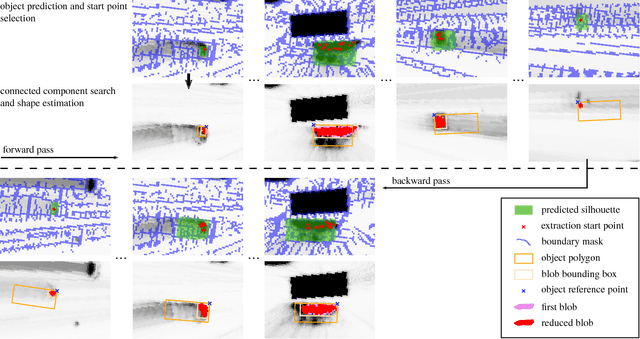

Abstract:A dynamic occupancy grid map (DOGMa) allows a fast, robust, and complete environment representation for automated vehicles. Dynamic objects in a DOGMa, however, are commonly represented as independent cells while modeled objects with shape and pose are favorable. The evaluation of algorithms for object extraction or the training and validation of learning algorithms rely on labeled ground truth data. Manually annotating objects in a DOGMa to obtain ground truth data is a time consuming and expensive process. Additionally the quality of labeled data depend strongly on the variation of filtered input data. The presented work introduces an automatic labeling process, where a full sequence is used to extract the best possible object pose and shape in terms of temporal consistency. A two direction temporal search is executed to trace single objects over a sequence, where the best estimate of its extent and pose is refined in every time step. Furthermore, the presented algorithm only uses statistical constraints of the cell clusters for the object extraction instead of fixed heuristic parameters. Experimental results show a well-performing automatic labeling algorithm with real sensor data even at challenging scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge