Daniel R. McGowan

Quality Control for Radiology Report Generation Models via Auxiliary Auditing Components

Jul 31, 2024Abstract:Automation of medical image interpretation could alleviate bottlenecks in diagnostic workflows, and has become of particular interest in recent years due to advancements in natural language processing. Great strides have been made towards automated radiology report generation via AI, yet ensuring clinical accuracy in generated reports is a significant challenge, hindering deployment of such methods in clinical practice. In this work we propose a quality control framework for assessing the reliability of AI-generated radiology reports with respect to semantics of diagnostic importance using modular auxiliary auditing components (AC). Evaluating our pipeline on the MIMIC-CXR dataset, our findings show that incorporating ACs in the form of disease-classifiers can enable auditing that identifies more reliable reports, resulting in higher F1 scores compared to unfiltered generated reports. Additionally, leveraging the confidence of the AC labels further improves the audit's effectiveness.

Prediction of recurrence free survival of head and neck cancer using PET/CT radiomics and clinical information

Feb 28, 2024

Abstract:The 5-year survival rate of Head and Neck Cancer (HNC) has not improved over the past decade and one common cause of treatment failure is recurrence. In this paper, we built Cox proportional hazard (CoxPH) models that predict the recurrence free survival (RFS) of oropharyngeal HNC patients. Our models utilise both clinical information and multimodal radiomics features extracted from tumour regions in Computed Tomography (CT) and Positron Emission Tomography (PET). Furthermore, we were one of the first studies to explore the impact of segmentation accuracy on the predictive power of the extracted radiomics features, through under- and over-segmentation study. Our models were trained using the HEad and neCK TumOR (HECKTOR) challenge data, and the best performing model achieved a concordance index (C-index) of 0.74 for the model utilising clinical information and multimodal CT and PET radiomics features, which compares favourably with the model that only used clinical information (C-index of 0.67). Our under- and over-segmentation study confirms that segmentation accuracy affects radiomics extraction, however, it affects PET and CT differently.

Multimodal PET/CT Tumour Segmentation and Prediction of Progression-Free Survival using a Full-Scale UNet with Attention

Nov 06, 2021

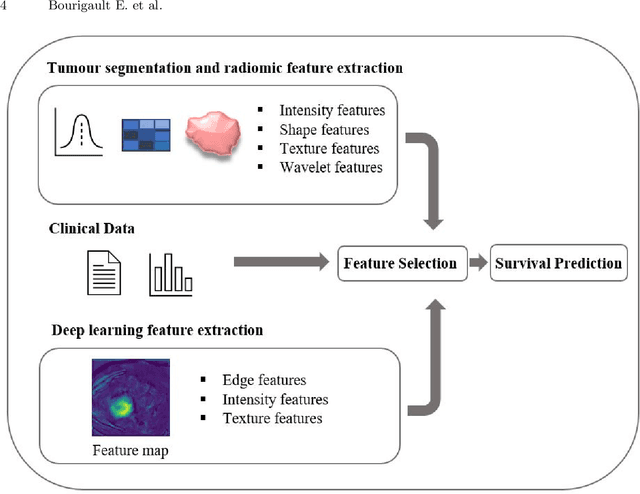

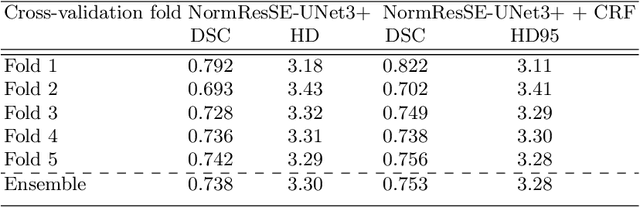

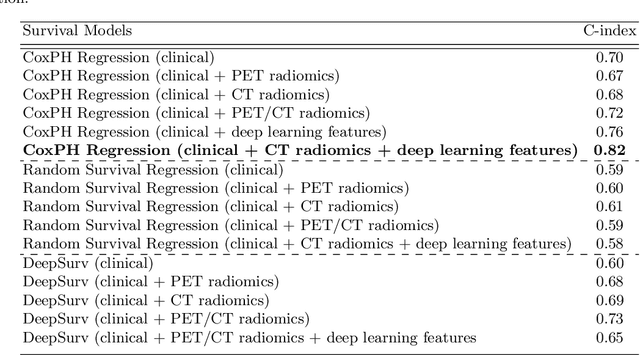

Abstract:Segmentation of head and neck (H\&N) tumours and prediction of patient outcome are crucial for patient's disease diagnosis and treatment monitoring. Current developments of robust deep learning models are hindered by the lack of large multi-centre, multi-modal data with quality annotations. The MICCAI 2021 HEad and neCK TumOR (HECKTOR) segmentation and outcome prediction challenge creates a platform for comparing segmentation methods of the primary gross target volume on fluoro-deoxyglucose (FDG)-PET and Computed Tomography images and prediction of progression-free survival in H\&N oropharyngeal cancer.For the segmentation task, we proposed a new network based on an encoder-decoder architecture with full inter- and intra-skip connections to take advantage of low-level and high-level semantics at full scales. Additionally, we used Conditional Random Fields as a post-processing step to refine the predicted segmentation maps. We trained multiple neural networks for tumor volume segmentation, and these segmentations were ensembled achieving an average Dice Similarity Coefficient of 0.75 in cross-validation, and 0.76 on the challenge testing data set. For prediction of patient progression free survival task, we propose a Cox proportional hazard regression combining clinical, radiomic, and deep learning features. Our survival prediction model achieved a concordance index of 0.82 in cross-validation, and 0.62 on the challenge testing data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge