Daniel Lizotte

Look-Ahead Selective Plasticity for Continual Learning of Visual Tasks

Nov 02, 2023Abstract:Contrastive representation learning has emerged as a promising technique for continual learning as it can learn representations that are robust to catastrophic forgetting and generalize well to unseen future tasks. Previous work in continual learning has addressed forgetting by using previous task data and trained models. Inspired by event models created and updated in the brain, we propose a new mechanism that takes place during task boundaries, i.e., when one task finishes and another starts. By observing the redundancy-inducing ability of contrastive loss on the output of a neural network, our method leverages the first few samples of the new task to identify and retain parameters contributing most to the transfer ability of the neural network, freeing up the remaining parts of the network to learn new features. We evaluate the proposed methods on benchmark computer vision datasets including CIFAR10 and TinyImagenet and demonstrate state-of-the-art performance in the task-incremental, class-incremental, and domain-incremental continual learning scenarios.

DRL-GAN: A Hybrid Approach for Binary and Multiclass Network Intrusion Detection

Jan 05, 2023

Abstract:Our increasingly connected world continues to face an ever-growing amount of network-based attacks. Intrusion detection systems (IDS) are an essential security technology for detecting these attacks. Although numerous machine learning-based IDS have been proposed for the detection of malicious network traffic, the majority have difficulty properly detecting and classifying the more uncommon attack types. In this paper, we implement a novel hybrid technique using synthetic data produced by a Generative Adversarial Network (GAN) to use as input for training a Deep Reinforcement Learning (DRL) model. Our GAN model is trained with the NSL-KDD dataset for four attack categories as well as normal network flow. Ultimately, our findings demonstrate that training the DRL on specific synthetic datasets can result in better performance in correctly classifying minority classes over training on the true imbalanced dataset.

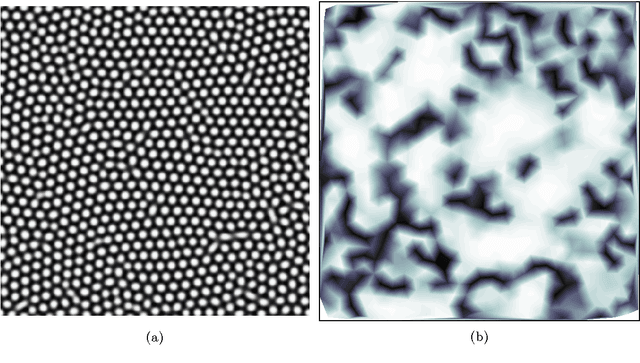

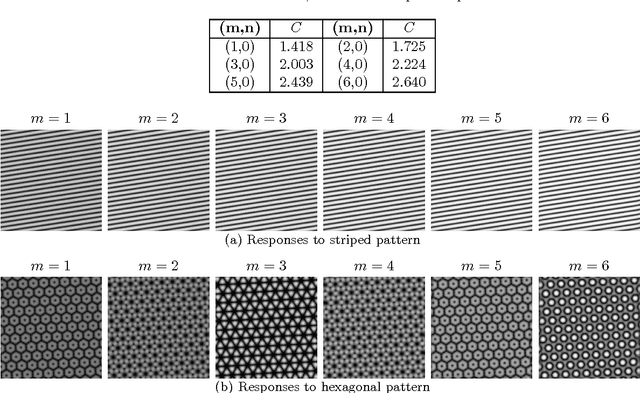

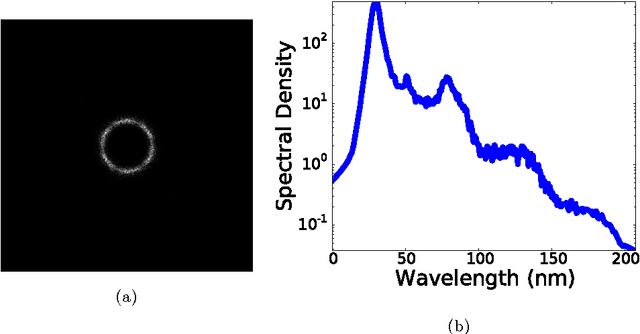

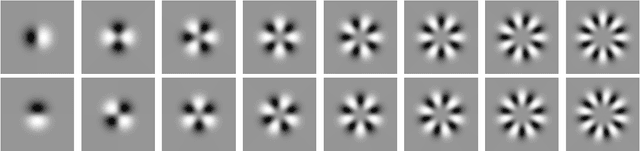

Theory and Application of Shapelets to the Analysis of Surface Self-assembly Imaging

Apr 02, 2014

Abstract:A method for quantitative analysis of local pattern strength and defects in surface self-assembly imaging is presented and applied to images of stripe and hexagonal ordered domains. The presented method uses "shapelet" functions which were originally developed for quantitative analysis of images of galaxies ($\propto 10^{20}\mathrm{m}$). In this work, they are used instead to quantify the presence of translational order in surface self-assembled films ($\propto 10^{-9}\mathrm{m}$) through reformulation into "steerable" filters. The resulting method is both computationally efficient (with respect to the number of filter evaluations), robust to variation in pattern feature shape, and, unlike previous approaches, is applicable to a wide variety of pattern types. An application of the method is presented which uses a nearest-neighbour analysis to distinguish between uniform (defect-free) and non-uniform (strained, defect-containing) regions within imaged self-assembled domains, both with striped and hexagonal patterns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge