Daniel Kumor

Sequential Causal Imitation Learning with Unobserved Confounders

Aug 12, 2022

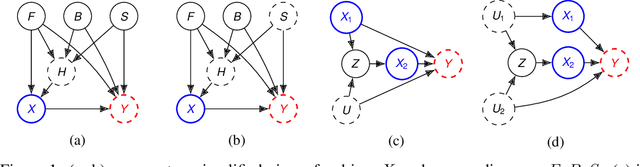

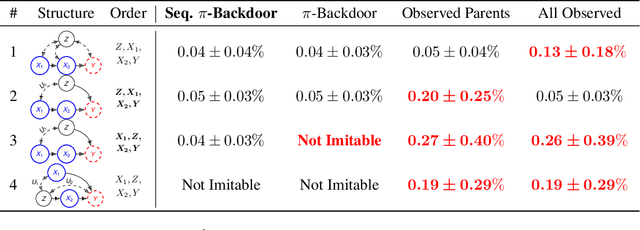

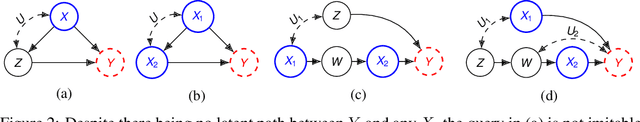

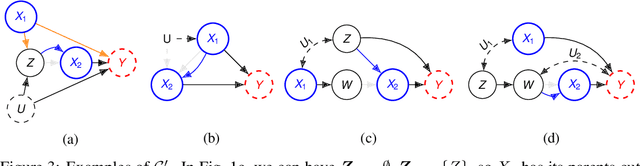

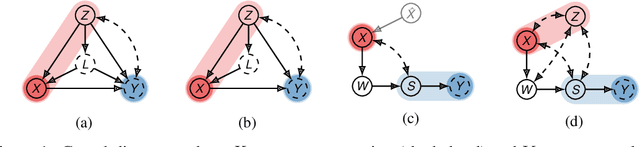

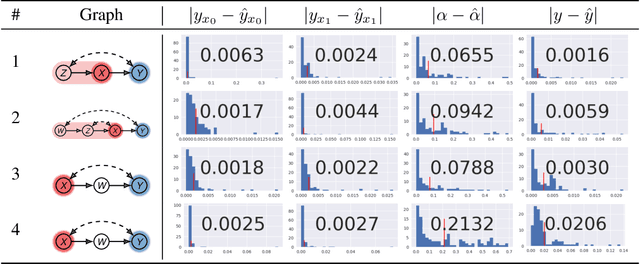

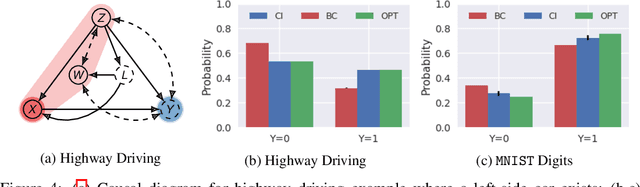

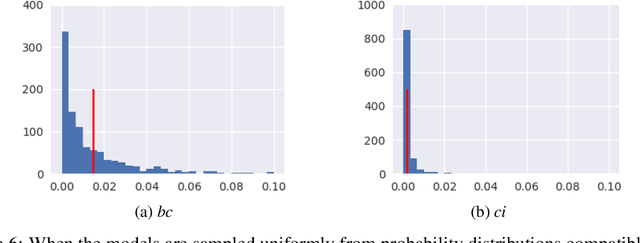

Abstract:"Monkey see monkey do" is an age-old adage, referring to na\"ive imitation without a deep understanding of a system's underlying mechanics. Indeed, if a demonstrator has access to information unavailable to the imitator (monkey), such as a different set of sensors, then no matter how perfectly the imitator models its perceived environment (See), attempting to reproduce the demonstrator's behavior (Do) can lead to poor outcomes. Imitation learning in the presence of a mismatch between demonstrator and imitator has been studied in the literature under the rubric of causal imitation learning (Zhang et al., 2020), but existing solutions are limited to single-stage decision-making. This paper investigates the problem of causal imitation learning in sequential settings, where the imitator must make multiple decisions per episode. We develop a graphical criterion that is necessary and sufficient for determining the feasibility of causal imitation, providing conditions when an imitator can match a demonstrator's performance despite differing capabilities. Finally, we provide an efficient algorithm for determining imitability and corroborate our theory with simulations.

Causal Imitation Learning with Unobserved Confounders

Aug 12, 2022

Abstract:One of the common ways children learn is by mimicking adults. Imitation learning focuses on learning policies with suitable performance from demonstrations generated by an expert, with an unspecified performance measure, and unobserved reward signal. Popular methods for imitation learning start by either directly mimicking the behavior policy of an expert (behavior cloning) or by learning a reward function that prioritizes observed expert trajectories (inverse reinforcement learning). However, these methods rely on the assumption that covariates used by the expert to determine her/his actions are fully observed. In this paper, we relax this assumption and study imitation learning when sensory inputs of the learner and the expert differ. First, we provide a non-parametric, graphical criterion that is complete (both necessary and sufficient) for determining the feasibility of imitation from the combinations of demonstration data and qualitative assumptions about the underlying environment, represented in the form of a causal model. We then show that when such a criterion does not hold, imitation could still be feasible by exploiting quantitative knowledge of the expert trajectories. Finally, we develop an efficient procedure for learning the imitating policy from experts' trajectories.

Efficient Identification in Linear Structural Causal Models with Instrumental Cutsets

Oct 29, 2019

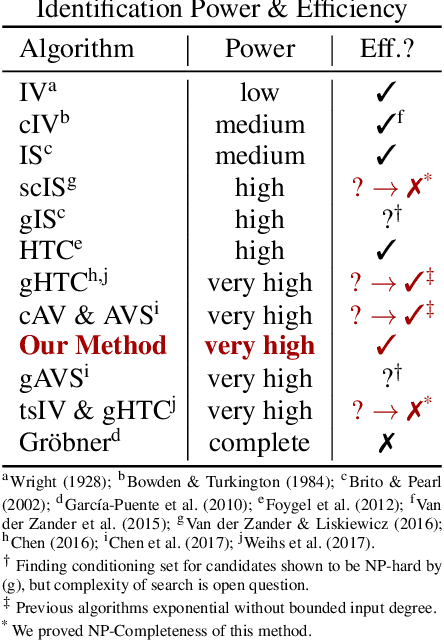

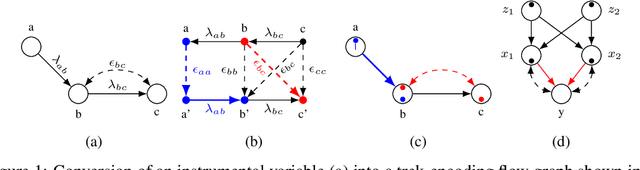

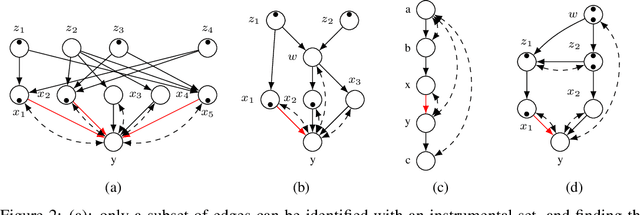

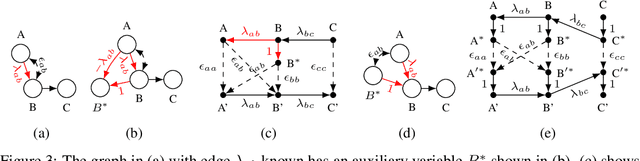

Abstract:One of the most common mistakes made when performing data analysis is attributing causal meaning to regression coefficients. Formally, a causal effect can only be computed if it is identifiable from a combination of observational data and structural knowledge about the domain under investigation (Pearl, 2000, Ch. 5). Building on the literature of instrumental variables (IVs), a plethora of methods has been developed to identify causal effects in linear systems. Almost invariably, however, the most powerful such methods rely on exponential-time procedures. In this paper, we investigate graphical conditions to allow efficient identification in arbitrary linear structural causal models (SCMs). In particular, we develop a method to efficiently find unconditioned instrumental subsets, which are generalizations of IVs that can be used to tame the complexity of many canonical algorithms found in the literature. Further, we prove that determining whether an effect can be identified with TSID (Weihs et al., 2017), a method more powerful than unconditioned instrumental sets and other efficient identification algorithms, is NP-Complete. Finally, building on the idea of flow constraints, we introduce a new and efficient criterion called Instrumental Cutsets (IC), which is able to solve for parameters missed by all other existing polynomial-time algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge