Daniel Hunter

SqueezeNAS: Fast neural architecture search for faster semantic segmentation

Aug 08, 2019

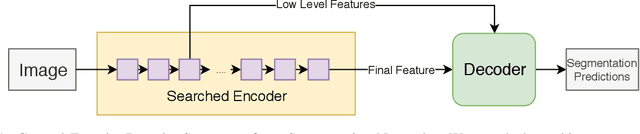

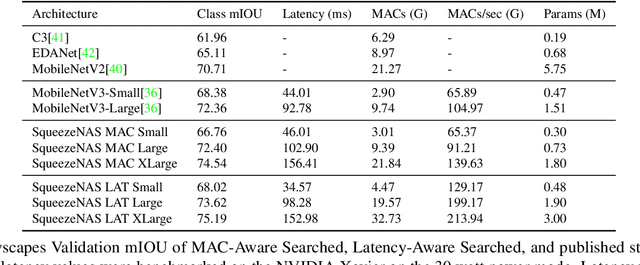

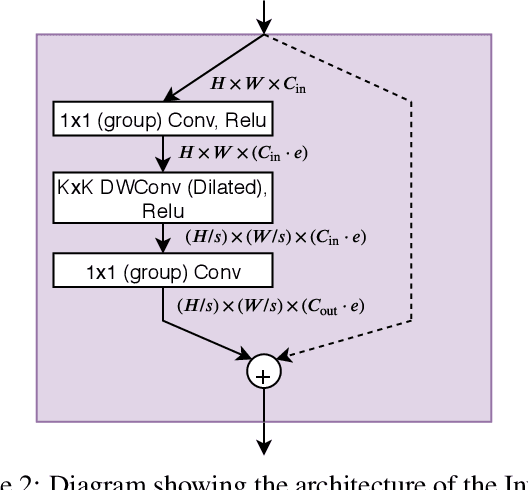

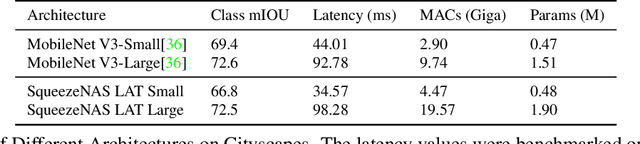

Abstract:For real time applications utilizing Deep Neural Networks (DNNs), it is critical that the models achieve high-accuracy on the target task and low-latency inference on the target computing platform. While Neural Architecture Search (NAS) has been effectively used to develop low-latency networks for image classification, there has been relatively little effort to use NAS to optimize DNN architectures for other vision tasks. In this work, we present what we believe to be the first proxyless hardware-aware search targeted for dense semantic segmentation. With this approach, we advance the state-of-the-art accuracy for latency-optimized networks on the Cityscapes semantic segmentation dataset. Our latency-optimized small SqueezeNAS network achieves 68.02% validation class mIOU with less than 35 ms inference times on the NVIDIA AGX Xavier. Our latency-optimized large SqueezeNAS network achieves 73.62% class mIOU with less than 100 ms inference times. We demonstrate that significant performance gains are possible by utilizing NAS to find networks optimized for both the specific task and inference hardware. We also present detailed analysis comparing our networks to recent state-of-the-art architectures.

Uncertain Reasoning Using Maximum Entropy Inference

Mar 27, 2013Abstract:The use of maximum entropy inference in reasoning with uncertain information is commonly justified by an information-theoretic argument. This paper discusses a possible objection to this information-theoretic justification and shows how it can be met. I then compare maximum entropy inference with certain other currently popular methods for uncertain reasoning. In making such a comparison, one must distinguish between static and dynamic theories of degrees of belief: a static theory concerns the consistency conditions for degrees of belief at a given time; whereas a dynamic theory concerns how one's degrees of belief should change in the light of new information. It is argued that maximum entropy is a dynamic theory and that a complete theory of uncertain reasoning can be gotten by combining maximum entropy inference with probability theory, which is a static theory. This total theory, I argue, is much better grounded than are other theories of uncertain reasoning.

Dempster-Shafer vs. Probabilistic Logic

Mar 27, 2013Abstract:The combination of evidence in Dempster-Shafer theory is compared with the combination of evidence in probabilistic logic. Sufficient conditions are stated for these two methods to agree. It is then shown that these conditions are minimal in the sense that disagreement can occur when any one of them is removed. An example is given in which the traditional assumption of conditional independence of evidence on hypotheses holds and a uniform prior is assumed, but probabilistic logic and Dempster's rule give radically different results for the combination of two evidence events.

Parallel Belief Revision

Mar 27, 2013

Abstract:This paper describes a formal system of belief revision developed by Wolfgang Spohn and shows that this system has a parallel implementation that can be derived from an influence diagram in a manner similar to that in which Bayesian networks are derived. The proof rests upon completeness results for an axiomatization of the notion of conditional independence, with the Spohn system being used as a semantics for the relation of conditional independence.

Non-monotonic Reasoning and the Reversibility of Belief Change

Mar 20, 2013Abstract:Traditional approaches to non-monotonic reasoning fail to satisfy a number of plausible axioms for belief revision and suffer from conceptual difficulties as well. Recent work on ranked preferential models (RPMs) promises to overcome some of these difficulties. Here we show that RPMs are not adequate to handle iterated belief change. Specifically, we show that RPMs do not always allow for the reversibility of belief change. This result indicates the need for numerical strengths of belief.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge