Dan Yamins

The Neural Representation Benchmark and its Evaluation on Brain and Machine

Jan 25, 2013

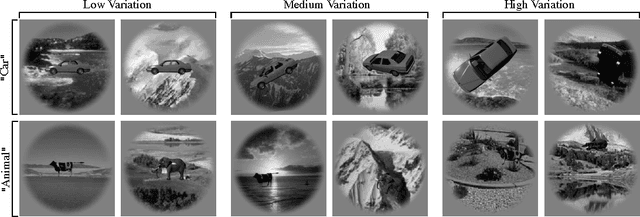

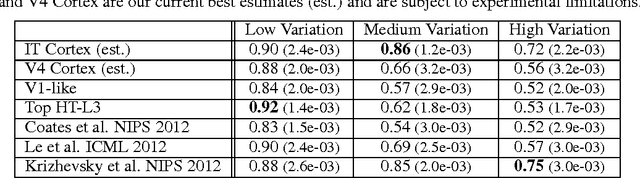

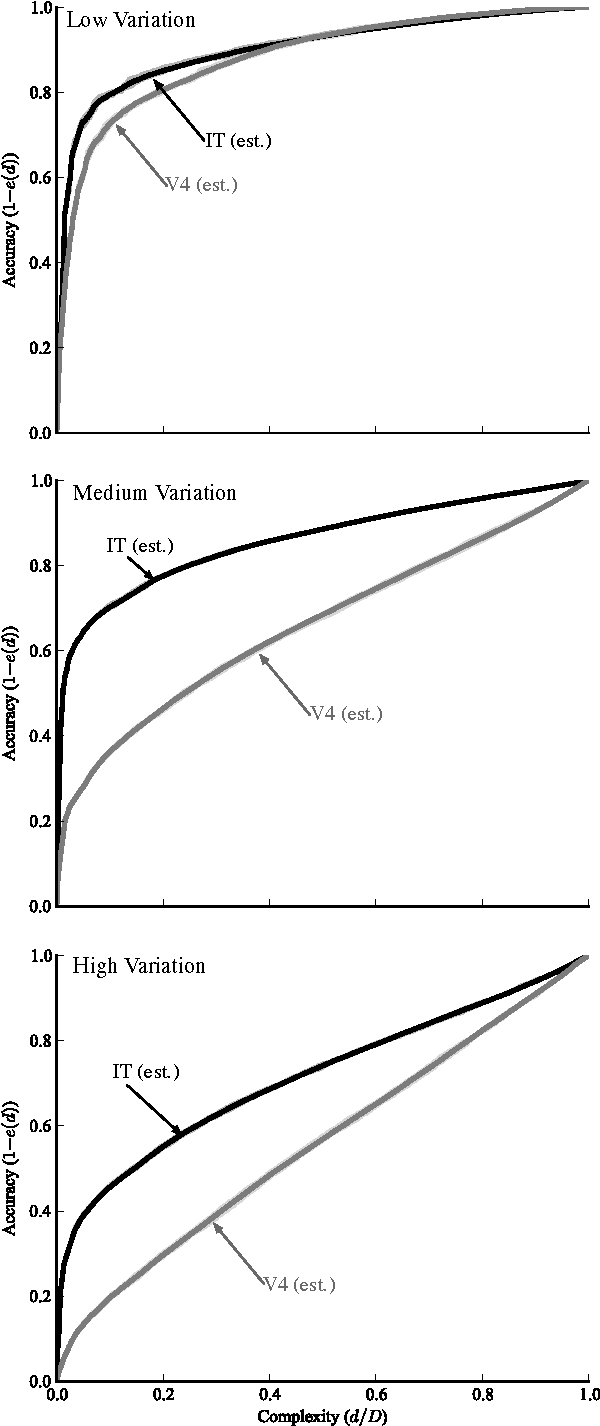

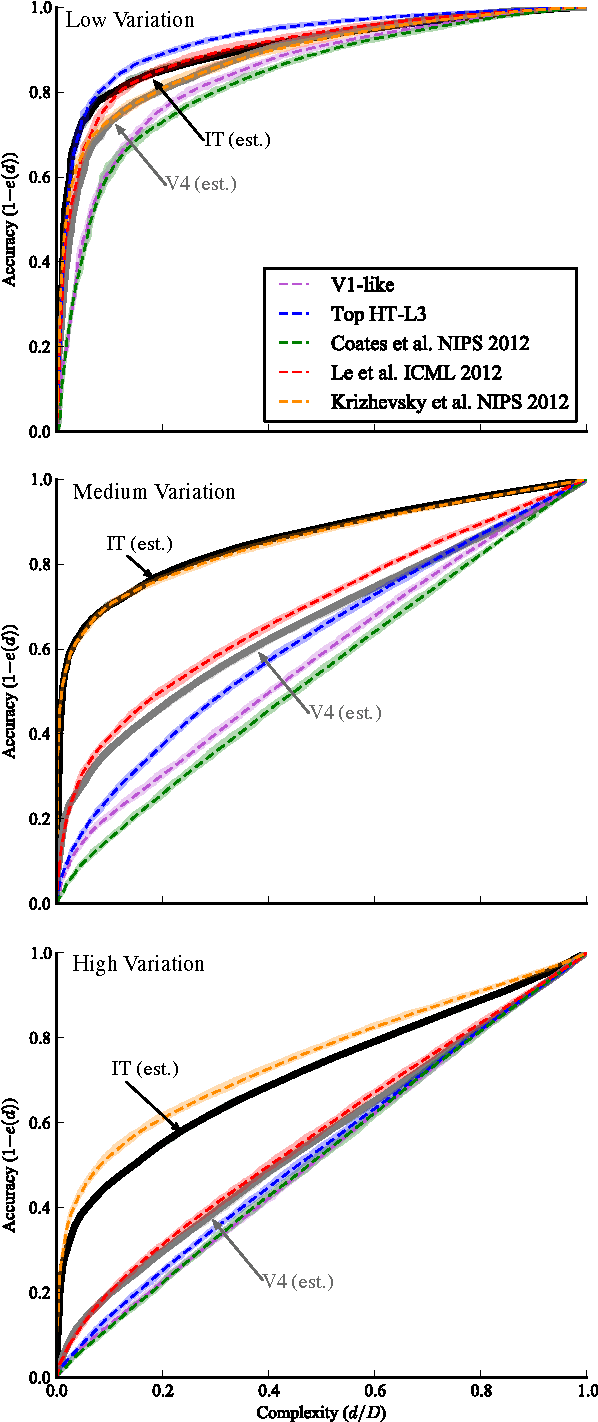

Abstract:A key requirement for the development of effective learning representations is their evaluation and comparison to representations we know to be effective. In natural sensory domains, the community has viewed the brain as a source of inspiration and as an implicit benchmark for success. However, it has not been possible to directly test representational learning algorithms directly against the representations contained in neural systems. Here, we propose a new benchmark for visual representations on which we have directly tested the neural representation in multiple visual cortical areas in macaque (utilizing data from [Majaj et al., 2012]), and on which any computer vision algorithm that produces a feature space can be tested. The benchmark measures the effectiveness of the neural or machine representation by computing the classification loss on the ordered eigendecomposition of a kernel matrix [Montavon et al., 2011]. In our analysis we find that the neural representation in visual area IT is superior to visual area V4. In our analysis of representational learning algorithms, we find that three-layer models approach the representational performance of V4 and the algorithm in [Le et al., 2012] surpasses the performance of V4. Impressively, we find that a recent supervised algorithm [Krizhevsky et al., 2012] achieves performance comparable to that of IT for an intermediate level of image variation difficulty, and surpasses IT at a higher difficulty level. We believe this result represents a major milestone: it is the first learning algorithm we have found that exceeds our current estimate of IT representation performance. We hope that this benchmark will assist the community in matching the representational performance of visual cortex and will serve as an initial rallying point for further correspondence between representations derived in brains and machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge