Daksh Dhingra

VisuoTactile 6D Pose Estimation of an In-Hand Object using Vision and Tactile Sensor Data

Jan 04, 2026Abstract:Knowledge of the 6D pose of an object can benefit in-hand object manipulation. In-hand 6D object pose estimation is challenging because of heavy occlusion produced by the robot's grippers, which can have an adverse effect on methods that rely on vision data only. Many robots are equipped with tactile sensors at their fingertips that could be used to complement vision data. In this paper, we present a method that uses both tactile and vision data to estimate the pose of an object grasped in a robot's hand. To address challenges like lack of standard representation for tactile data and sensor fusion, we propose the use of point clouds to represent object surfaces in contact with the tactile sensor and present a network architecture based on pixel-wise dense fusion. We also extend NVIDIA's Deep Learning Dataset Synthesizer to produce synthetic photo-realistic vision data and corresponding tactile point clouds. Results suggest that using tactile data in addition to vision data improves the 6D pose estimate, and our network generalizes successfully from synthetic training to real physical robots.

* Accepted for publication in IEEE Robotics and Automation Letters (RA-L), January 2022. Presented at ICRA 2022. This is the author's version of the manuscript

Modeling and LQR Control of Insect Sized Flapping Wing Robot

Jun 28, 2024

Abstract:Flying insects can perform rapid, sophisticated maneuvers like backflips, sharp banked turns, and in-flight collision recovery. To emulate these in aerial robots weighing less than a gram, known as flying insect robots (FIRs), a fast and responsive control system is essential. To date, these have largely been, at their core, elaborations of proportional-integral-derivative (PID)-type feedback control. Without exception, their gains have been painstakingly tuned by hand. Aggressive maneuvers have further required task-specific tuning. Optimal control has the potential to mitigate these issues, but has to date only been demonstrated using approxiate models and receding horizon controllers (RHC) that are too computationally demanding to be carried out onboard the robot. Here we used a more accurate stroke-averaged model of forces and torques to implement the first demonstration of optimal control on an FIR that is computationally efficient enough to be performed by a microprocessor carried onboard. We took force and torque measurements from a 150 mg FIR, the UW Robofly, using a custom-built sensitive force-torque sensor, and validated them using motion capture data in free flight. We demonstrated stable hovering (RMS error of about 4 cm) and trajectory tracking maneuvers at translational velocities up to 25 cm/s using an optimal linear quadratic regulator (LQR). These results were enabled by a more accurate model and lay the foundation for future work that uses our improved model and optimal controller in conjunction with recent advances in low-power receding horizon control to perform accurate aggressive maneuvers without iterative, task-specific tuning.

A flexured-gimbal 3-axis force-torque sensor reveals minimal cross-axis coupling in an insect-sized flapping-wing robot

Jun 28, 2024

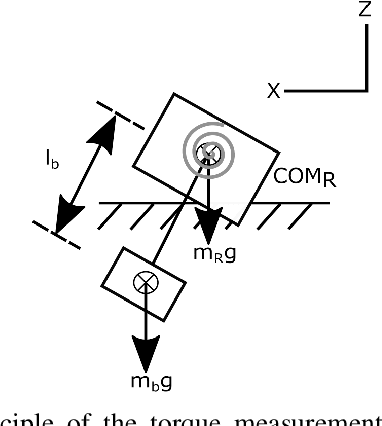

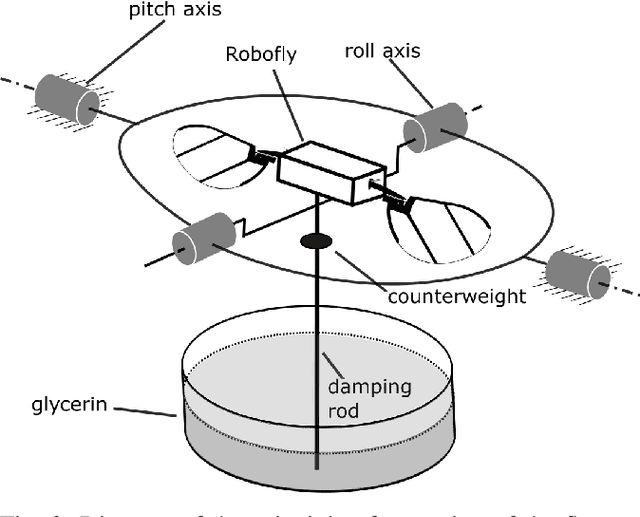

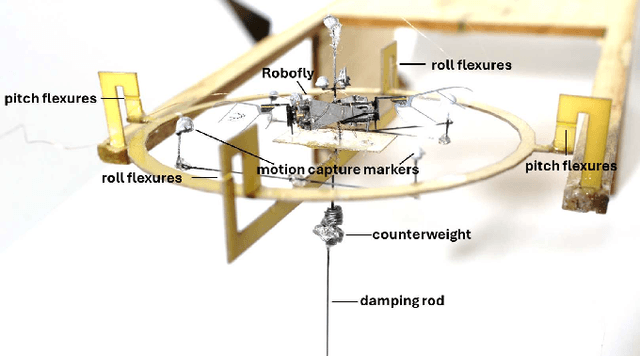

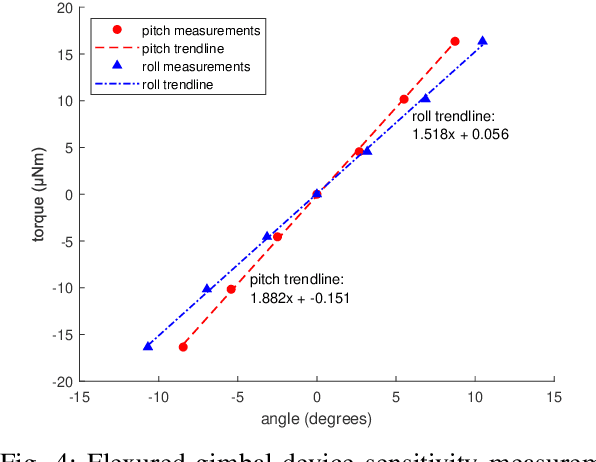

Abstract:The mechanical complexity of flapping wings, their unsteady aerodynamic flow, and challenge of making measurements at the scale of a sub-gram flapping-wing flying insect robot (FIR) make its behavior hard to predict. Knowing the precise mapping from voltage input to torque output, however, can be used to improve their mechanical and flight controller design. To address this challenge, we created a sensitive force-torque sensor based on a flexured gimbal that only requires a standard motion capture system or accelerometer for readout. Our device precisely and accurately measures pitch and roll torques simultaneously, as well as thrust, on a tethered flapping-wing FIR in response to changing voltage input signals. With it, we were able to measure cross-axis coupling of both torque and thrust input commands on a 180 mg FIR, the UW Robofly. We validated these measurements using free-flight experiments. Our results showed that roll and pitch have maximum cross-axis coupling errors of 8.58% and 17.24%, respectively, relative to the range of torque that is possible. Similarly, varying the pitch and roll commands resulted in up to a 5.78% deviation from the commanded thrust, across the entire commanded torque range. Our system, the first to measure two torque axes simultaneously, shows that torque commands have a negligible cross-axis coupling on both torque and thrust.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge