Daesoo Lee

Norwegian University of Science and Technology

Closing the Gap Between Synthetic and Ground Truth Time Series Distributions via Neural Mapping

Jan 29, 2025

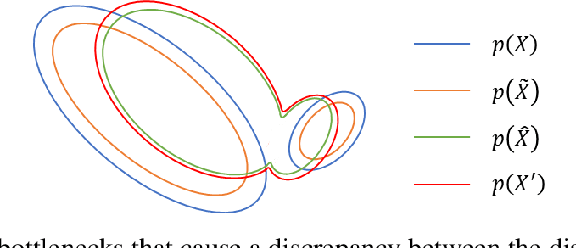

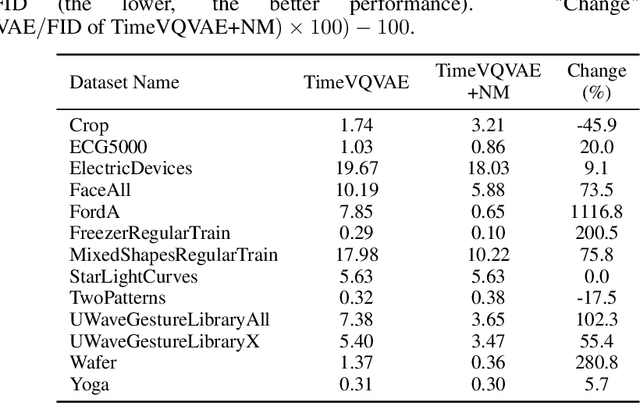

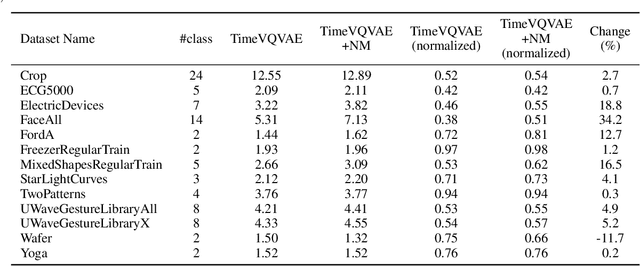

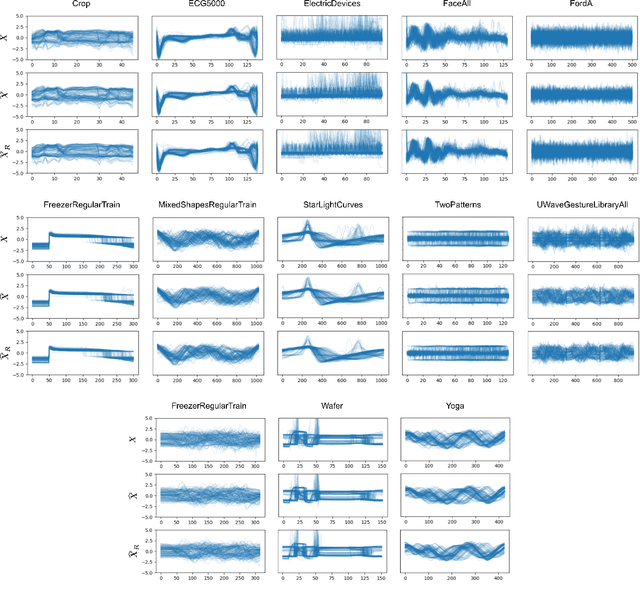

Abstract:In this paper, we introduce Neural Mapper for Vector Quantized Time Series Generator (NM-VQTSG), a novel method aimed at addressing fidelity challenges in vector quantized (VQ) time series generation. VQ-based methods, such as TimeVQVAE, have demonstrated success in generating time series but are hindered by two critical bottlenecks: information loss during compression into discrete latent spaces and deviations in the learned prior distribution from the ground truth distribution. These challenges result in synthetic time series with compromised fidelity and distributional accuracy. To overcome these limitations, NM-VQTSG leverages a U-Net-based neural mapping model to bridge the distributional gap between synthetic and ground truth time series. To be more specific, the model refines synthetic data by addressing artifacts introduced during generation, effectively aligning the distributions of synthetic and real data. Importantly, NM-VQTSG can be used for synthetic time series generated by any VQ-based generative method. We evaluate NM-VQTSG across diverse datasets from the UCR Time Series Classification archive, demonstrating its capability to consistently enhance fidelity in both unconditional and conditional generation tasks. The improvements are evidenced by significant improvements in FID, IS, and conditional FID, additionally backed up by visual inspection in a data space and a latent space. Our findings establish NM-VQTSG as a new method to improve the quality of synthetic time series. Our implementation is available on \url{https://github.com/ML4ITS/TimeVQVAE}.

Blending Low and High-Level Semantics of Time Series for Better Masked Time Series Generation

Aug 29, 2024Abstract:State-of-the-art approaches in time series generation (TSG), such as TimeVQVAE, utilize vector quantization-based tokenization to effectively model complex distributions of time series. These approaches first learn to transform time series into a sequence of discrete latent vectors, and then a prior model is learned to model the sequence. The discrete latent vectors, however, only capture low-level semantics (\textit{e.g.,} shapes). We hypothesize that higher-fidelity time series can be generated by training a prior model on more informative discrete latent vectors that contain both low and high-level semantics (\textit{e.g.,} characteristic dynamics). In this paper, we introduce a novel framework, termed NC-VQVAE, to integrate self-supervised learning into those TSG methods to derive a discrete latent space where low and high-level semantics are captured. Our experimental results demonstrate that NC-VQVAE results in a considerable improvement in the quality of synthetic samples.

Explainable Anomaly Detection using Masked Latent Generative Modeling

Nov 22, 2023

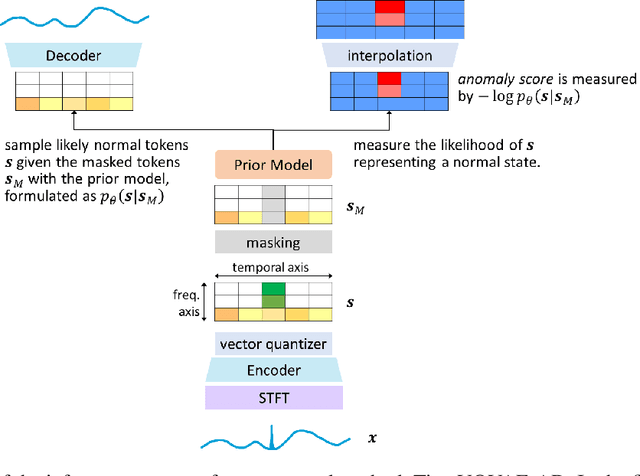

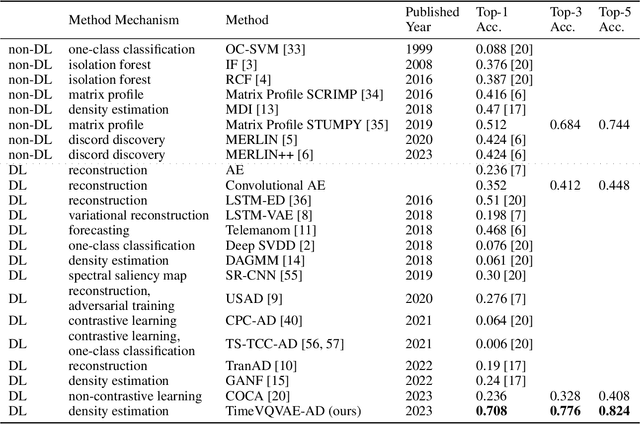

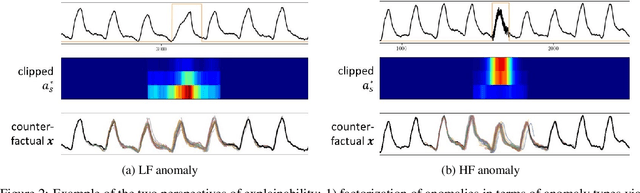

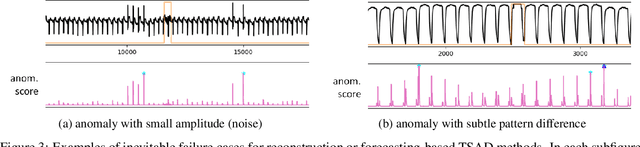

Abstract:We present a novel time series anomaly detection method that achieves excellent detection accuracy while offering a superior level of explainability. Our proposed method, TimeVQVAE-AD, leverages masked generative modeling adapted from the cutting-edge time series generation method known as TimeVQVAE. The prior model is trained on the discrete latent space of a time-frequency domain. Notably, the dimensional semantics of the time-frequency domain are preserved in the latent space, enabling us to compute anomaly scores across different frequency bands, which provides a better insight into the detected anomalies. Additionally, the generative nature of the prior model allows for sampling likely normal states for detected anomalies, enhancing the explainability of the detected anomalies through counterfactuals. Our experimental evaluation on the UCR Time Series Anomaly archive demonstrates that TimeVQVAE-AD significantly surpasses the existing methods in terms of detection accuracy and explainability.

Latent Diffusion Model for Conditional Reservoir Facies Generation

Nov 03, 2023Abstract:Creating accurate and geologically realistic reservoir facies based on limited measurements is crucial for field development and reservoir management, especially in the oil and gas sector. Traditional two-point geostatistics, while foundational, often struggle to capture complex geological patterns. Multi-point statistics offers more flexibility, but comes with its own challenges. With the rise of Generative Adversarial Networks (GANs) and their success in various fields, there has been a shift towards using them for facies generation. However, recent advances in the computer vision domain have shown the superiority of diffusion models over GANs. Motivated by this, a novel Latent Diffusion Model is proposed, which is specifically designed for conditional generation of reservoir facies. The proposed model produces high-fidelity facies realizations that rigorously preserve conditioning data. It significantly outperforms a GAN-based alternative.

Masked Generative Modeling with Enhanced Sampling Scheme

Sep 14, 2023

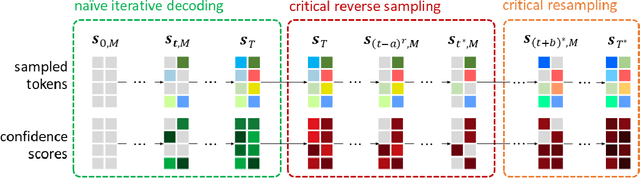

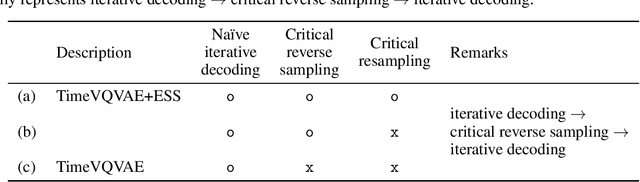

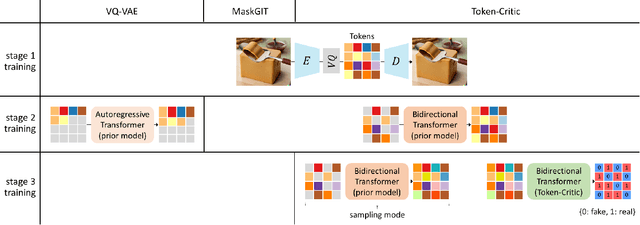

Abstract:This paper presents a novel sampling scheme for masked non-autoregressive generative modeling. We identify the limitations of TimeVQVAE, MaskGIT, and Token-Critic in their sampling processes, and propose Enhanced Sampling Scheme (ESS) to overcome these limitations. ESS explicitly ensures both sample diversity and fidelity, and consists of three stages: Naive Iterative Decoding, Critical Reverse Sampling, and Critical Resampling. ESS starts by sampling a token set using the naive iterative decoding as proposed in MaskGIT, ensuring sample diversity. Then, the token set undergoes the critical reverse sampling, masking tokens leading to unrealistic samples. After that, critical resampling reconstructs masked tokens until the final sampling step is reached to ensure high fidelity. Critical resampling uses confidence scores obtained from a self-Token-Critic to better measure the realism of sampled tokens, while critical reverse sampling uses the structure of the quantized latent vector space to discover unrealistic sample paths. We demonstrate significant performance gains of ESS in both unconditional sampling and class-conditional sampling using all the 128 datasets in the UCR Time Series archive.

Vector Quantized Time Series Generation with a Bidirectional Prior Model

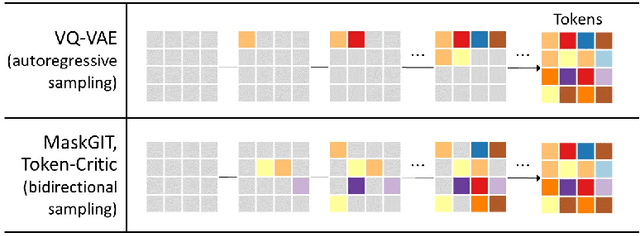

Mar 15, 2023Abstract:Time series generation (TSG) studies have mainly focused on the use of Generative Adversarial Networks (GANs) combined with recurrent neural network (RNN) variants. However, the fundamental limitations and challenges of training GANs still remain. In addition, the RNN-family typically has difficulties with temporal consistency between distant timesteps. Motivated by the successes in the image generation (IMG) domain, we propose TimeVQVAE, the first work, to our knowledge, that uses vector quantization (VQ) techniques to address the TSG problem. Moreover, the priors of the discrete latent spaces are learned with bidirectional transformer models that can better capture global temporal consistency. We also propose VQ modeling in a time-frequency domain, separated into low-frequency (LF) and high-frequency (HF). This allows us to retain important characteristics of the time series and, in turn, generate new synthetic signals that are of better quality, with sharper changes in modularity, than its competing TSG methods. Our experimental evaluation is conducted on all datasets from the UCR archive, using well-established metrics in the IMG literature, such as Fr\'echet inception distance and inception scores. Our implementation on GitHub: \url{https://github.com/ML4ITS/TimeVQVAE}.

Better Reasoning Behind Classification Predictions with BERT for Fake News Detection

Jul 23, 2022

Abstract:Fake news detection has become a major task to solve as there has been an increasing number of fake news on the internet in recent years. Although many classification models have been proposed based on statistical learning methods showing good results, reasoning behind the classification performances may not be enough. In the self-supervised learning studies, it has been highlighted that a quality of representation (embedding) space matters and directly affects a downstream task performance. In this study, a quality of the representation space is analyzed visually and analytically in terms of linear separability for different classes on a real and fake news dataset. To further add interpretability to a classification model, a modification of Class Activation Mapping (CAM) is proposed. The modified CAM provides a CAM score for each word token, where the CAM score on a word token denotes a level of focus on that word token to make the prediction. Finally, it is shown that the naive BERT model topped with a learnable linear layer is enough to achieve robust performance while being compatible with CAM.

VNIbCReg: VICReg with Neighboring-Invariance and better-Covariance Evaluated on Non-stationary Seismic Signal Time Series

Apr 08, 2022

Abstract:One of the latest self-supervised learning (SSL) methods, VICReg, showed a great performance both in the linear evaluation and the fine-tuning evaluation. However, VICReg is proposed in computer vision and it learns by pulling representations of random crops of an image while maintaining the representation space by the variance and covariance loss. However, VICReg would be ineffective on non-stationary time series where different parts/crops of input should be differently encoded to consider the non-stationarity. Another recent SSL proposal, Temporal Neighborhood Coding (TNC) is effective for encoding non-stationary time series. This study shows that a combination of a VICReg-style method and TNC is very effective for SSL on non-stationary time series, where a non-stationary seismic signal time series is used as an evaluation dataset.

Reinforcement Learning-Based Automatic Berthing System

Dec 03, 2021

Abstract:Previous studies on automatic berthing systems based on artificial neural network (ANN) showed great berthing performance by training the ANN with ship berthing data as training data. However, because the ANN requires a large amount of training data to yield robust performance, the ANN-based automatic berthing system is somewhat limited due to the difficulty in obtaining the berthing data. In this study, to overcome this difficulty, the automatic berthing system based on one of the reinforcement learning (RL) algorithms, proximal policy optimization (PPO), is proposed because the RL algorithms can learn an optimal control policy through trial-and-error by interacting with a given environment and does not require any pre-obtained training data, where the control policy in the proposed PPO-based automatic berthing system controls revolutions per second (RPS) and rudder angle of a ship. Finally, it is shown that the proposed PPO-based automatic berthing system eliminates the need for obtaining the training dataset and shows great potential for the actual berthing application.

VIbCReg: Variance-Invariance-better-Covariance Regularization for Self-Supervised Learning on Time Series

Sep 02, 2021

Abstract:Self-supervised learning for image representations has recently had many breakthroughs with respect to linear evaluation and fine-tuning evaluation. These approaches rely on both cleverly crafted loss functions and training setups to avoid the feature collapse problem. In this paper, we improve on the recently proposed VICReg paper, which introduced a loss function that does not rely on specialized training loops to converge to useful representations. Our method improves on a covariance term proposed in VICReg, and in addition we augment the head of the architecture by an IterNorm layer that greatly accelerates convergence of the model. Our model achieves superior performance on linear evaluation and fine-tuning evaluation on a subset of the UCR time series classification archive and the PTB-XL ECG dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge