Dániel Szilágyi

Hamiltonian Monte Carlo for efficient Gaussian sampling: long and random steps

Sep 26, 2022Abstract:Hamiltonian Monte Carlo (HMC) is a Markov chain algorithm for sampling from a high-dimensional distribution with density $e^{-f(x)}$, given access to the gradient of $f$. A particular case of interest is that of a $d$-dimensional Gaussian distribution with covariance matrix $\Sigma$, in which case $f(x) = x^\top \Sigma^{-1} x$. We show that HMC can sample from a distribution that is $\varepsilon$-close in total variation distance using $\widetilde{O}(\sqrt{\kappa} d^{1/4} \log(1/\varepsilon))$ gradient queries, where $\kappa$ is the condition number of $\Sigma$. Our algorithm uses long and random integration times for the Hamiltonian dynamics. This contrasts with (and was motivated by) recent results that give an $\widetilde\Omega(\kappa d^{1/2})$ query lower bound for HMC with fixed integration times, even for the Gaussian case.

Quantum algorithms for Second-Order Cone Programming and Support Vector Machines

Aug 23, 2019

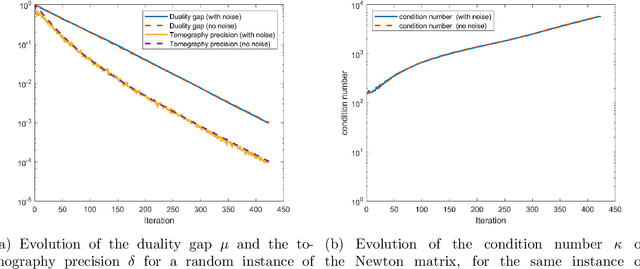

Abstract:Second order cone programs (SOCPs) are a class of structured convex optimization problems that generalize linear programs. We present a quantum algorithm for SOCPs based on a quantum variant of the interior point method. Our algorithm outputs a classical solution to the SOCP with objective value $\epsilon$ close to the optimal in time $\widetilde{O} \left( n\sqrt{r} \frac{\zeta \kappa}{\delta^2} \log \left(1/\epsilon\right) \right)$ where $r$ is the rank and $n$ the dimension of the SOCP, $\delta$ bounds the distance from strict feasibility for the intermediate solutions, $\zeta$ is a parameter bounded by $\sqrt{n}$, and $\kappa$ is an upper bound on the condition number of matrices arising in the classical interior point method for SOCPs. We present applications to the support vector machine (SVM) problem in machine learning that reduces to SOCPs. We provide experimental evidence that the quantum algorithm achieves an asymptotic speedup over classical SVM algorithms with a running time $\widetilde{O}(n^{2.557})$ for random SVM instances. The best known classical algorithms for such instances have complexity $\widetilde{O} \left( n^{\omega+0.5}\log(1/\epsilon) \right)$, where $\omega$ is the matrix multiplication exponent that has a theoretical value of around $2.373$, but is closer to $3$ in practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge