Cristopher Gómez

Visual SLAM-based Localization and Navigation for Service Robots: The Pepper Case

Nov 20, 2018

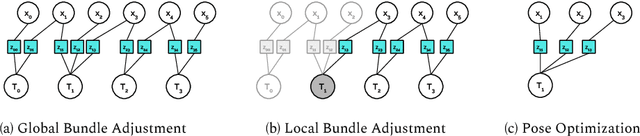

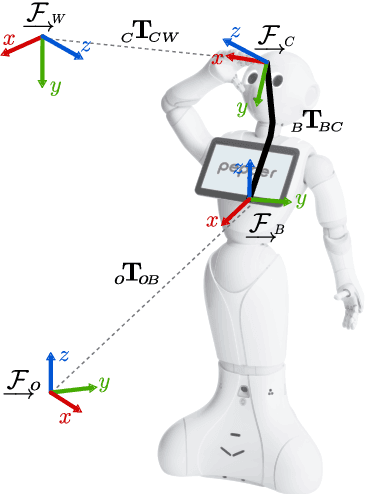

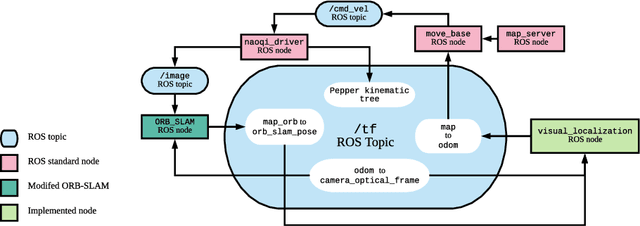

Abstract:We propose a Visual-SLAM based localization and navigation system for service robots. Our system is built on top of the ORB-SLAM monocular system but extended by the inclusion of wheel odometry in the estimation procedures. As a case study, the proposed system is validated using the Pepper robot, whose short-range LIDARs and RGB-D camera do not allow the robot to self-localize in large environments. The localization system is tested in navigation tasks using Pepper in two different environments: a medium-size laboratory, and a large-size hall.

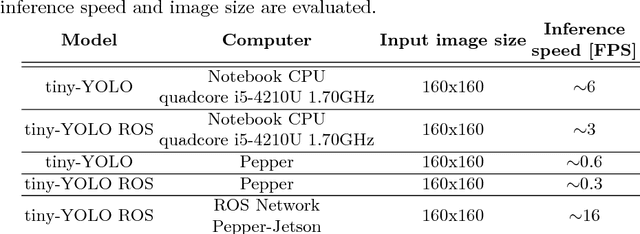

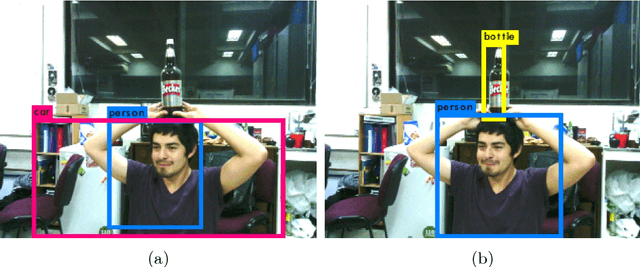

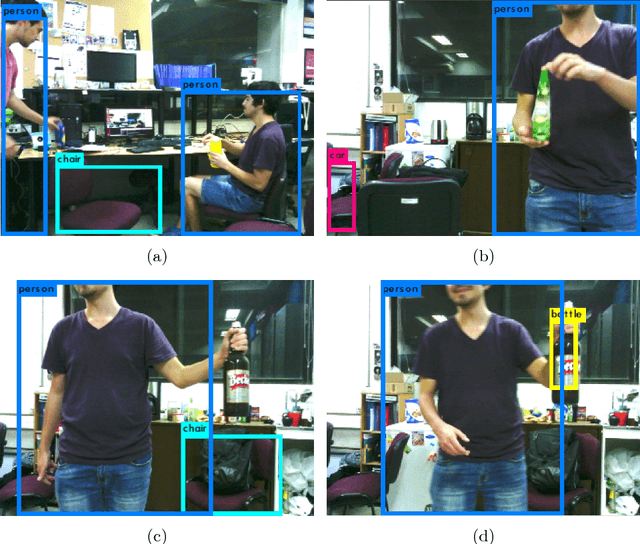

Near Real-Time Object Recognition for Pepper based on Deep Neural Networks Running on a Backpack

Nov 20, 2018

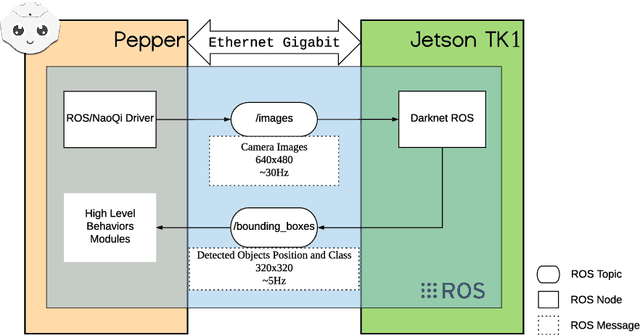

Abstract:The main goal of the paper is to provide Pepper with a near real-time object recognition system based on deep neural networks. The proposed system is based on YOLO (You Only Look Once), a deep neural network that is able to detect and recognize objects robustly and at a high speed. In addition, considering that YOLO cannot be run in the Pepper's internal computer in near real-time, we propose to use a Backpack for Pepper, which holds a Jetson TK1 card and a battery. By using this card, Pepper is able to robustly detect and recognize objects in images of 320x320 pixels at about 5 frames per second.

* Proceedings of 22th RoboCup International Symposium, Montreal, Canada, 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge