Cristian Millán-Arias

Reinforcement Learning for UAV control with Policy and Reward Shaping

Dec 06, 2022Abstract:In recent years, unmanned aerial vehicle (UAV) related technology has expanded knowledge in the area, bringing to light new problems and challenges that require solutions. Furthermore, because the technology allows processes usually carried out by people to be automated, it is in great demand in industrial sectors. The automation of these vehicles has been addressed in the literature, applying different machine learning strategies. Reinforcement learning (RL) is an automation framework that is frequently used to train autonomous agents. RL is a machine learning paradigm wherein an agent interacts with an environment to solve a given task. However, learning autonomously can be time consuming, computationally expensive, and may not be practical in highly-complex scenarios. Interactive reinforcement learning allows an external trainer to provide advice to an agent while it is learning a task. In this study, we set out to teach an RL agent to control a drone using reward-shaping and policy-shaping techniques simultaneously. Two simulated scenarios were proposed for the training; one without obstacles and one with obstacles. We also studied the influence of each technique. The results show that an agent trained simultaneously with both techniques obtains a lower reward than an agent trained using only a policy-based approach. Nevertheless, the agent achieves lower execution times and less dispersion during training.

* 9 pages, 9 figures, Preprint accepted at the 41st International Conference of the Chilean Computer Science Society, SCCC 2022, Santiago, Chile, 2022

Learning Proxemic Behavior Using Reinforcement Learning with Cognitive Agents

Aug 08, 2021

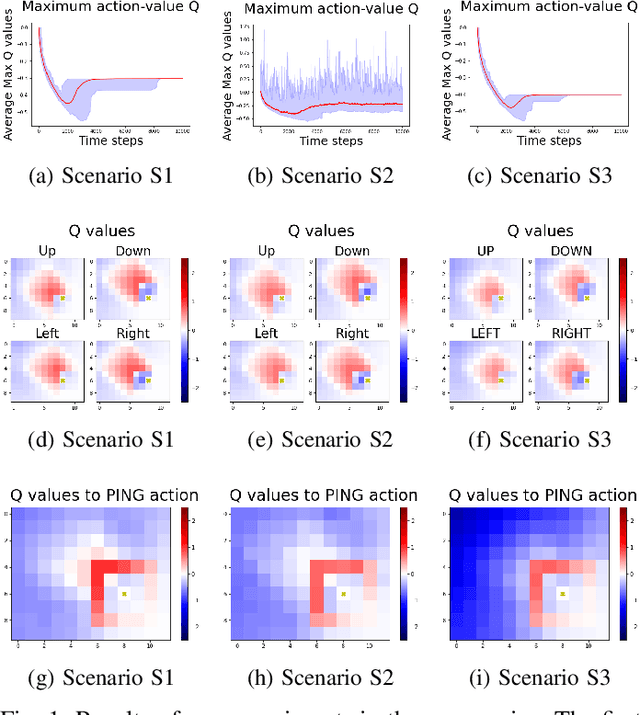

Abstract:Proxemics is a branch of non-verbal communication concerned with studying the spatial behavior of people and animals. This behavior is an essential part of the communication process due to delimit the acceptable distance to interact with another being. With increasing research on human-agent interaction, new alternatives are needed that allow optimal communication, avoiding agents feeling uncomfortable. Several works consider proxemic behavior with cognitive agents, where human-robot interaction techniques and machine learning are implemented. However, environments consider fixed personal space and that the agent previously knows it. In this work, we aim to study how agents behave in environments based on proxemic behavior, and propose a modified gridworld to that aim. This environment considers an issuer with proxemic behavior that provides a disagreement signal to the agent. Our results show that the learning agent can identify the proxemic space when the issuer gives feedback about agent performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge