Constance Beguier

SecureFedYJ: a safe feature Gaussianization protocol for Federated Learning

Oct 04, 2022

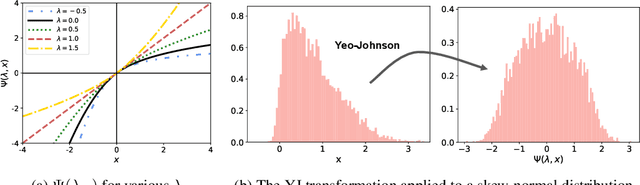

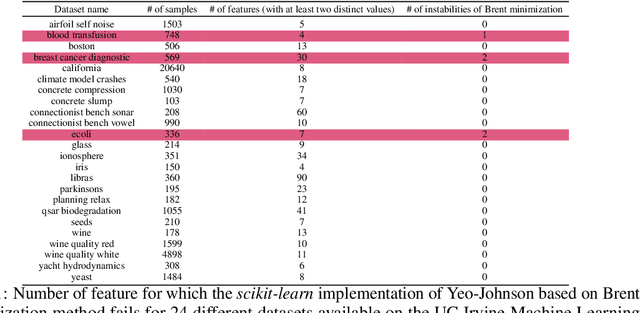

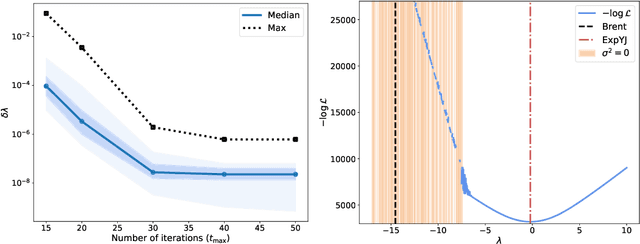

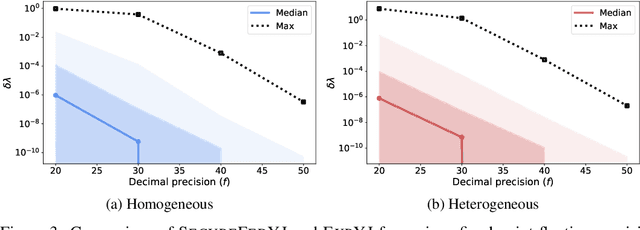

Abstract:The Yeo-Johnson (YJ) transformation is a standard parametrized per-feature unidimensional transformation often used to Gaussianize features in machine learning. In this paper, we investigate the problem of applying the YJ transformation in a cross-silo Federated Learning setting under privacy constraints. For the first time, we prove that the YJ negative log-likelihood is in fact convex, which allows us to optimize it with exponential search. We numerically show that the resulting algorithm is more stable than the state-of-the-art approach based on the Brent minimization method. Building on this simple algorithm and Secure Multiparty Computation routines, we propose SecureFedYJ, a federated algorithm that performs a pooled-equivalent YJ transformation without leaking more information than the final fitted parameters do. Quantitative experiments on real data demonstrate that, in addition to being secure, our approach reliably normalizes features across silos as well as if data were pooled, making it a viable approach for safe federated feature Gaussianization.

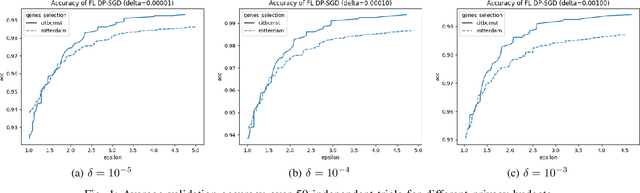

Differentially Private Federated Learning for Cancer Prediction

Jan 08, 2021

Abstract:Since 2014, the NIH funded iDASH (integrating Data for Analysis, Anonymization, SHaring) National Center for Biomedical Computing has hosted yearly competitions on the topic of private computing for genomic data. For one track of the 2020 iteration of this competition, participants were challenged to produce an approach to federated learning (FL) training of genomic cancer prediction models using differential privacy (DP), with submissions ranked according to held-out test accuracy for a given set of DP budgets. More precisely, in this track, we are tasked with training a supervised model for the prediction of breast cancer occurrence from genomic data split between two virtual centers while ensuring data privacy with respect to model transfer via DP. In this article, we present our 3rd place submission to this competition. During the competition, we encountered two main challenges discussed in this article: i) ensuring correctness of the privacy budget evaluation and ii) achieving an acceptable trade-off between prediction performance and privacy budget.

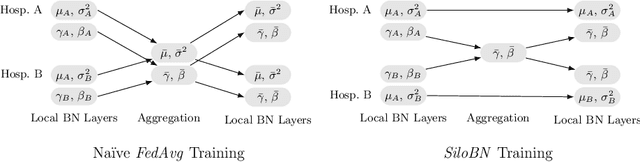

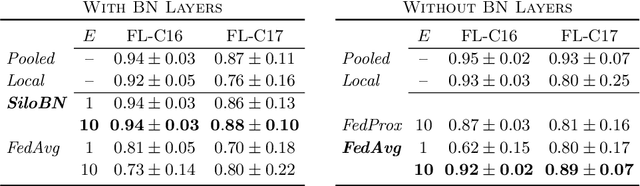

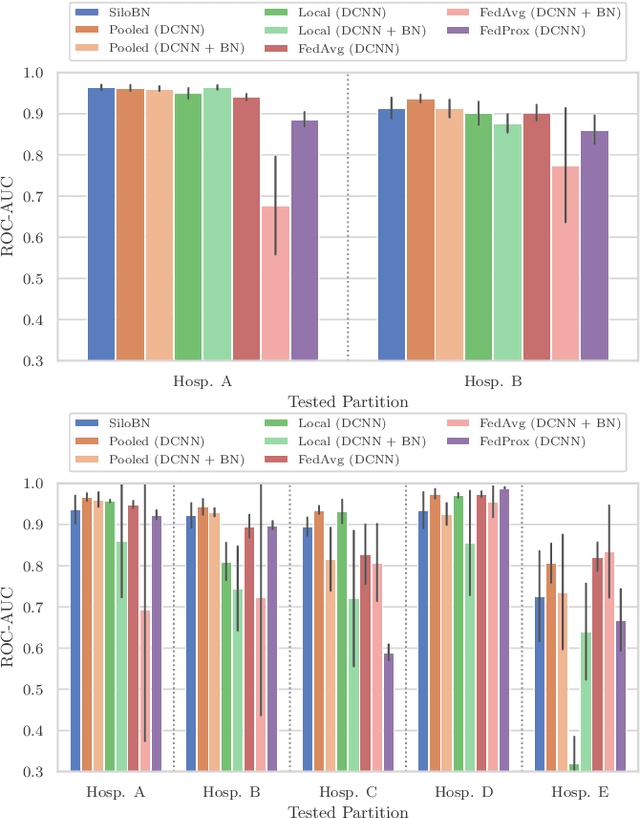

Siloed Federated Learning for Multi-Centric Histopathology Datasets

Aug 17, 2020

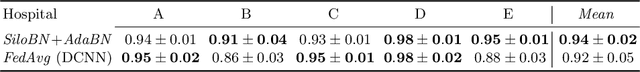

Abstract:While federated learning is a promising approach for training deep learning models over distributed sensitive datasets, it presents new challenges for machine learning, especially when applied in the medical domain where multi-centric data heterogeneity is common. Building on previous domain adaptation works, this paper proposes a novel federated learning approach for deep learning architectures via the introduction of local-statistic batch normalization (BN) layers, resulting in collaboratively-trained, yet center-specific models. This strategy improves robustness to data heterogeneity while also reducing the potential for information leaks by not sharing the center-specific layer activation statistics. We benchmark the proposed method on the classification of tumorous histopathology image patches extracted from the Camelyon16 and Camelyon17 datasets. We show that our approach compares favorably to previous state-of-the-art methods, especially for transfer learning across datasets.

SAFER: Sparse secure Aggregation for FEderated leaRning

Jul 29, 2020

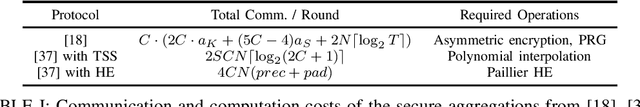

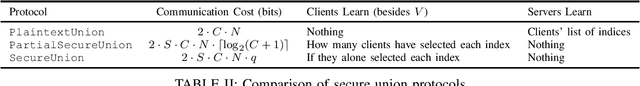

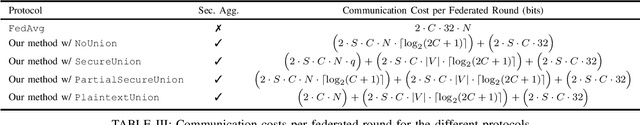

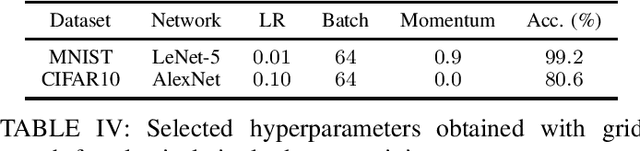

Abstract:Federated learning enables one to train a common machine learning model across separate, privately-held datasets via distributed model training. During federated model training, only intermediate model parameters are transmitted to a central server which aggregates these models to create a new common model, thus exposing only intermediate model parameters rather than the training data itself. However, some attacks (e.g. membership inference) are able to infer properties of private data from these intermediate model parameters. Hence, performing the aggregation of these client-specific model parameters in a secure way is required. Additionally, the communication cost is often the bottleneck of the federated systems, especially for large neural networks. So, limiting the number and the size of communications is necessary to efficiently train large neural architectures. In this article, we present an efficient and secure protocol for performing secure aggregations over compressed model updates in the context of collaborative, few-party federated learning, a context common in the medical, healthcare, and biotechnical use-cases of federated systems. By making compression-based federated techniques amenable to secure computation, we develop a secure aggregation protocol between multiple servers with very low communication and computation costs and without preprocessing overhead. Our experiments demonstrate the efficiency of this new approach for secure federated training of deep convolutional neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge