Connor W. Herron

Joint-Space Control of a Structurally Elastic Humanoid Robot

Nov 18, 2024

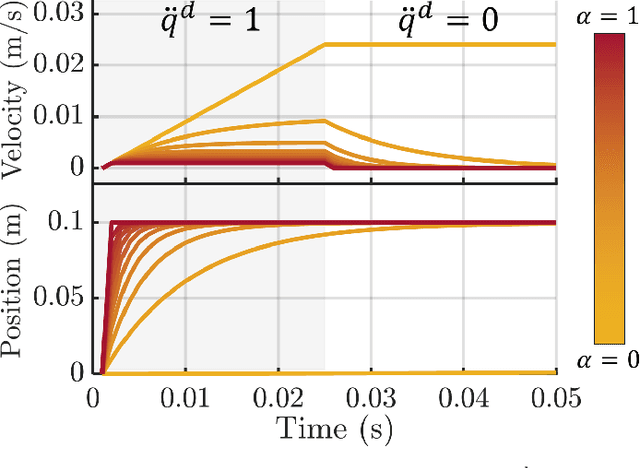

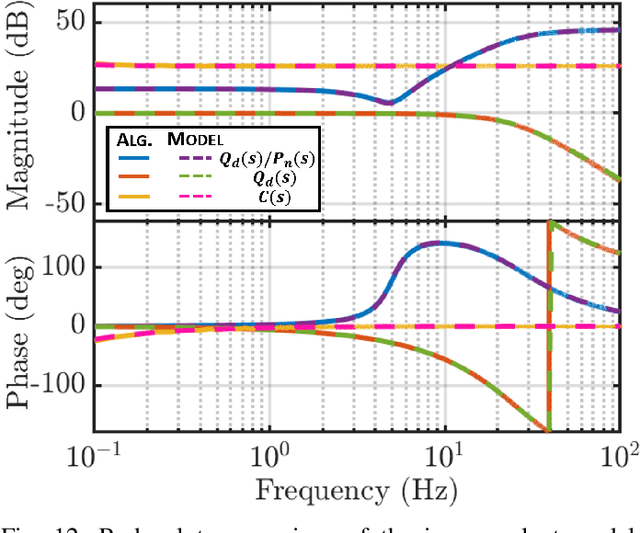

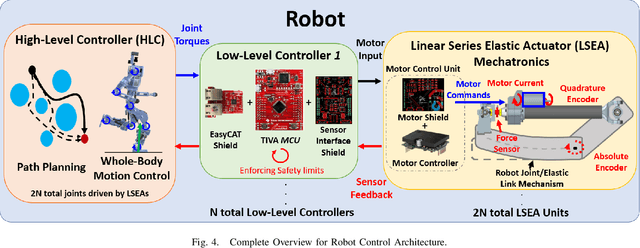

Abstract:In this work, the joint-control strategy is presented for the humanoid robot, PANDORA, whose structural components are designed to be compliant. As opposed to contemporary approaches which design the elasticity internal to the actuator housing, PANDORA's structural components are designed to be compliant under load or, in other words, structurally elastic. To maintain the rapid design benefit of additive manufacturing, this joint control strategy employs a disturbance observer (DOB) modeled from an ideal elastic actuator. This robust controller treats the model variation from the structurally elastic components as a disturbance and eliminates the need for system identification of the 3D printed parts. This enables mechanical design engineers to iterate on the 3D printed linkages without requiring consistent tuning from the joint controller. Two sets of hardware results are presented for validating the controller. The first set of results are conducted on an ideal elastic actuator testbed that drives an unmodeled, 1 DoF weighted pendulum with a 10 kg mass. The results support the claim that the DOB can handle significant model variation. The second set of results is from a robust balancing experiment conducted on the 12 DoF lower body of PANDORA. The robot maintains balance while an operator applies 50 N pushes to the pelvis, where the actuator tracking results are presented for the left leg.

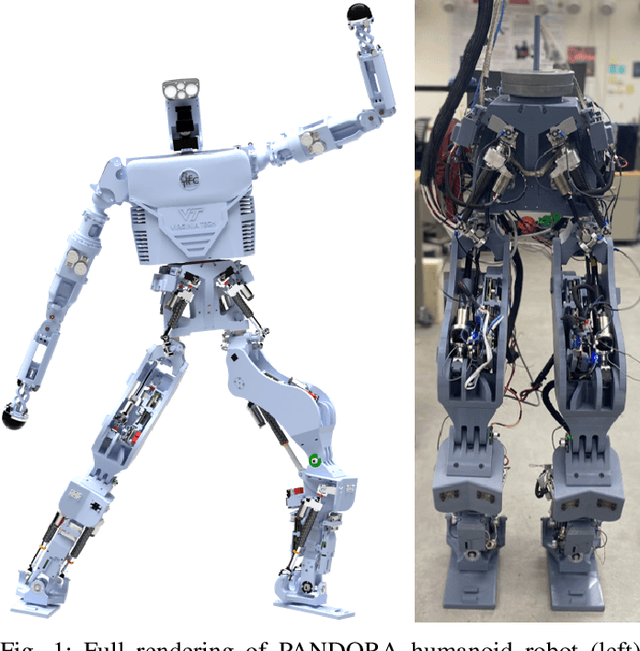

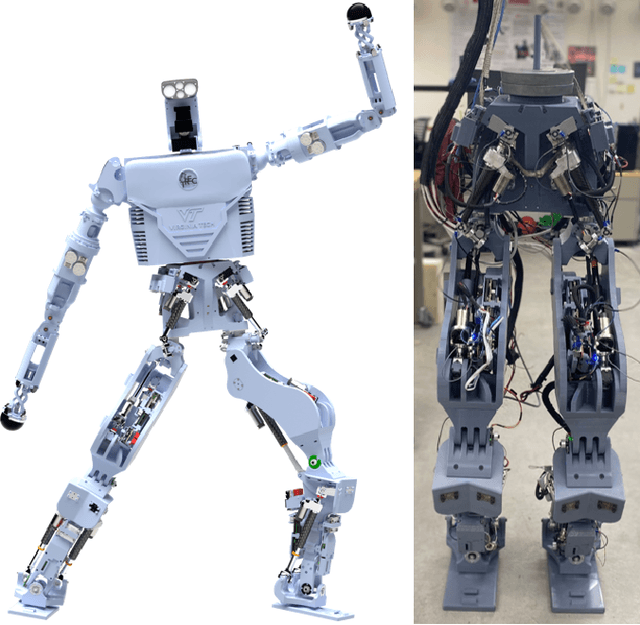

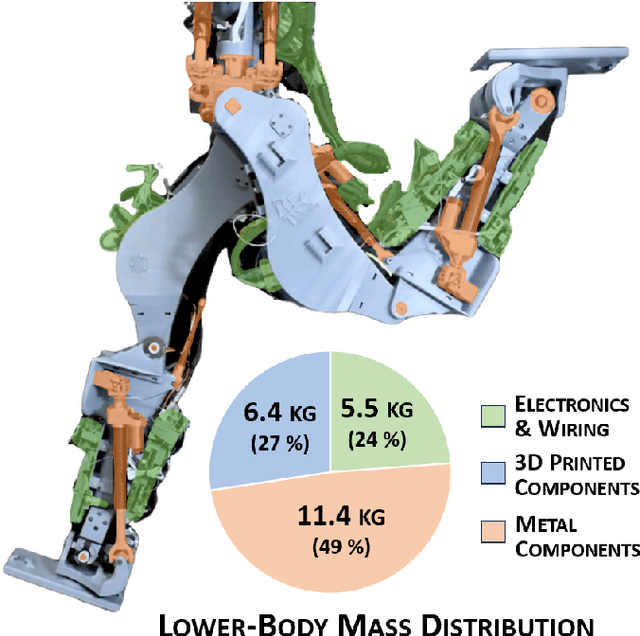

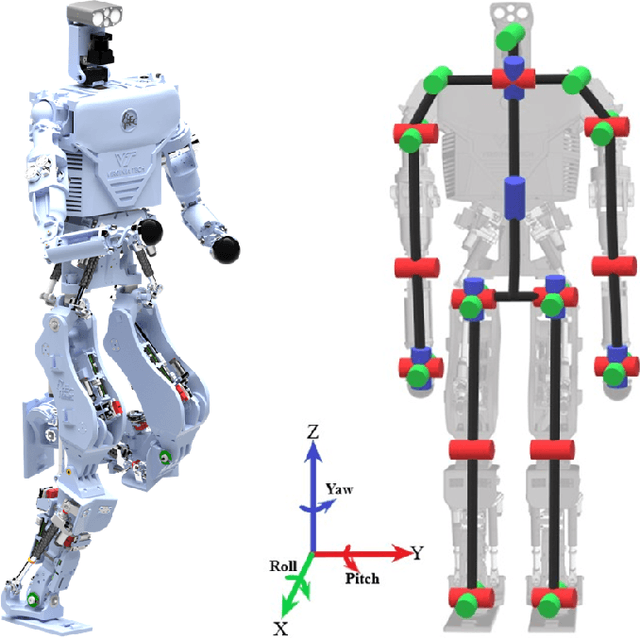

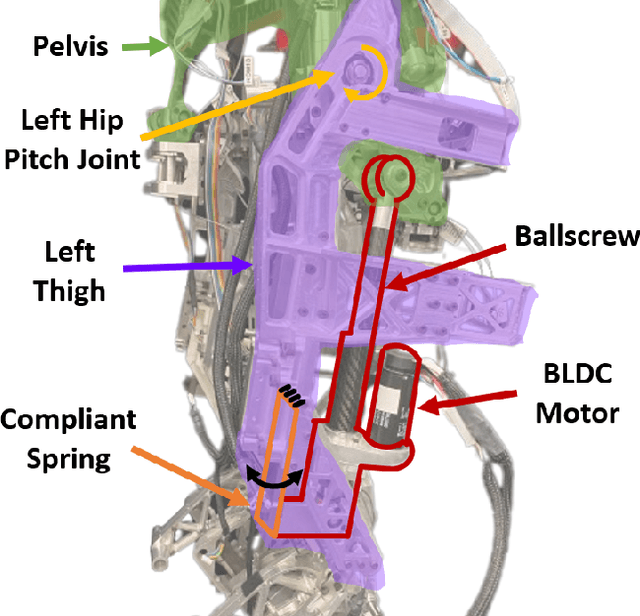

PANDORA: The Open-Source, Structurally Elastic Humanoid Robot

Jul 26, 2024

Abstract:In this work, the novel, open-source humanoid robot, PANDORA, is presented where a majority of the structural elements are manufactured using 3D-printed compliant materials. As opposed to contemporary approaches that incorporate the elastic element into the actuator mechanisms, PANDORA is designed to be compliant under load, or in other words, structurally elastic. This design approach lowers manufacturing cost and time, design complexity, and assembly time while introducing controls challenges in state estimation, joint and whole-body control. This work features an in-depth description on the mechanical and electrical subsystems including details regarding additive manufacturing benefits and drawbacks, usage and placement of sensors, and networking between devices. In addition, the design of structural elastic components and their effects on overall performance from an estimation and control perspective are discussed. Finally, results are presented which demonstrate the robot completing a robust balancing objective in the presence of disturbances and stepping behaviors.

Software Implementation of Digital Filtering via Tustin's Bilinear Transform

Jan 05, 2024Abstract:The purpose of this work is to provide some notes on a software implementation for digital filtering via Tustins Bilinear Transform. The first section discusses how to solve for the input and output coefficients by hand using a generalized approach called Horners method. The second section presents some results of this generalized digital filtering approach using the IHMC Open Robotics Software stack and Simulation Construction Set 2. This generalized approach can solve for the digital coefficients for any causal transfer function.

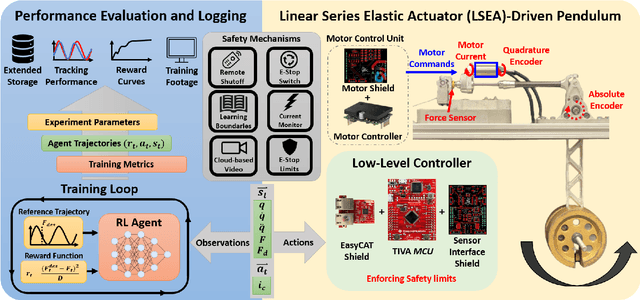

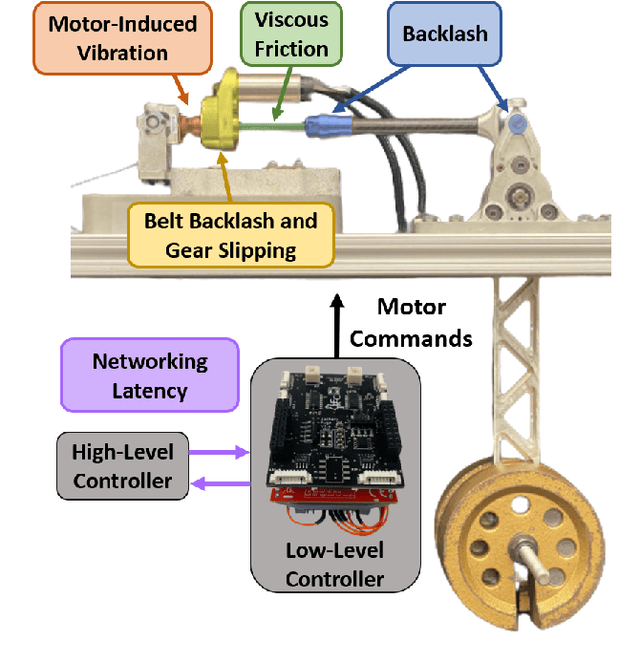

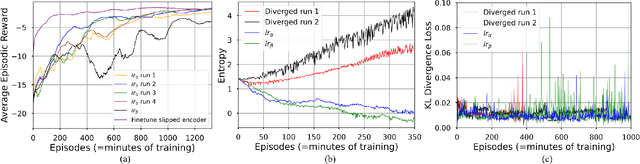

Real-Time Model-Free Deep Reinforcement Learning for Force Control of a Series Elastic Actuator

Apr 11, 2023

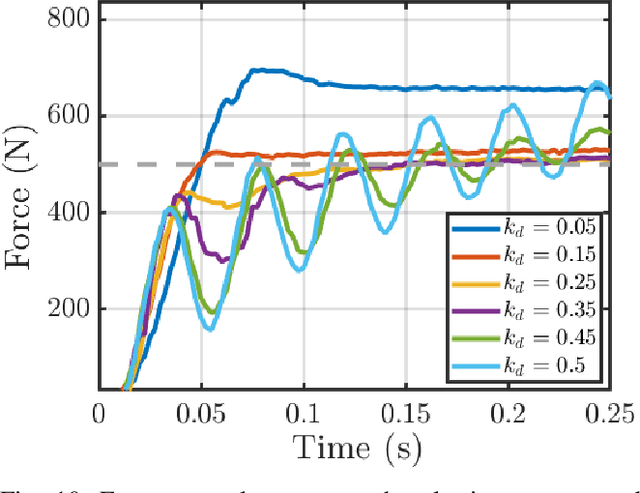

Abstract:Many state-of-the art robotic applications utilize series elastic actuators (SEAs) with closed-loop force control to achieve complex tasks such as walking, lifting, and manipulation. Model-free PID control methods are more prone to instability due to nonlinearities in the SEA where cascaded model-based robust controllers can remove these effects to achieve stable force control. However, these model-based methods require detailed investigations to characterize the system accurately. Deep reinforcement learning (DRL) has proved to be an effective model-free method for continuous control tasks, where few works deal with hardware learning. This paper describes the training process of a DRL policy on hardware of an SEA pendulum system for tracking force control trajectories from 0.05 - 0.35 Hz at 50 N amplitude using the Proximal Policy Optimization (PPO) algorithm. Safety mechanisms are developed and utilized for training the policy for 12 hours (overnight) without an operator present within the full 21 hours training period. The tracking performance is evaluated showing improvements of $25$ N in mean absolute error when comparing the first 18 min. of training to the full 21 hours for a 50 N amplitude, 0.1 Hz sinusoid desired force trajectory. Finally, the DRL policy exhibits better tracking and stability margins when compared to a model-free PID controller for a 50 N chirp force trajectory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge