Colin M. Potts

Potential-Based Reward Shaping For Intrinsic Motivation

Feb 12, 2024

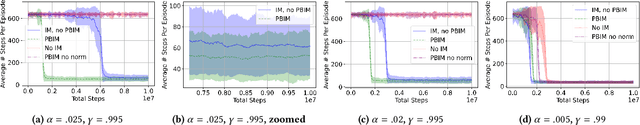

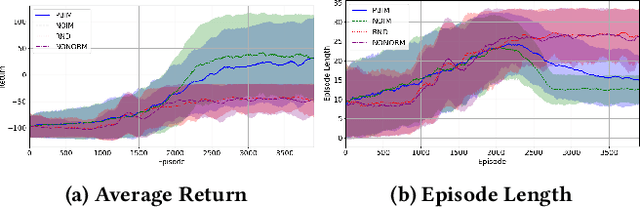

Abstract:Recently there has been a proliferation of intrinsic motivation (IM) reward-shaping methods to learn in complex and sparse-reward environments. These methods can often inadvertently change the set of optimal policies in an environment, leading to suboptimal behavior. Previous work on mitigating the risks of reward shaping, particularly through potential-based reward shaping (PBRS), has not been applicable to many IM methods, as they are often complex, trainable functions themselves, and therefore dependent on a wider set of variables than the traditional reward functions that PBRS was developed for. We present an extension to PBRS that we prove preserves the set of optimal policies under a more general set of functions than has been previously proven. We also present {\em Potential-Based Intrinsic Motivation} (PBIM), a method for converting IM rewards into a potential-based form that is useable without altering the set of optimal policies. Testing in the MiniGrid DoorKey and Cliff Walking environments, we demonstrate that PBIM successfully prevents the agent from converging to a suboptimal policy and can speed up training.

Modeling Risk in Reinforcement Learning: A Literature Mapping

Dec 08, 2023

Abstract:Safe reinforcement learning deals with mitigating or avoiding unsafe situations by reinforcement learning (RL) agents. Safe RL approaches are based on specific risk representations for particular problems or domains. In order to analyze agent behaviors, compare safe RL approaches, and effectively transfer techniques between application domains, it is necessary to understand the types of risk specific to safe RL problems. We performed a systematic literature mapping with the objective to characterize risk in safe RL. Based on the obtained results, we present definitions, characteristics, and types of risk that hold on multiple application domains. Our literature mapping covers literature from the last 5 years (2017-2022), from a variety of knowledge areas (AI, finance, engineering, medicine) where RL approaches emphasize risk representation and management. Our mapping covers 72 papers filtered systematically from over thousands of papers on the topic. Our proposed notion of risk covers a variety of representations, disciplinary differences, common training exercises, and types of techniques. We encourage researchers to include explicit and detailed accounts of risk in future safe RL research reports, using this mapping as a starting point. With this information, researchers and practitioners could draw stronger conclusions on the effectiveness of techniques on different problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge