Cindy Tseng

Peeking Into The Future For Contextual Biasing

Dec 19, 2025

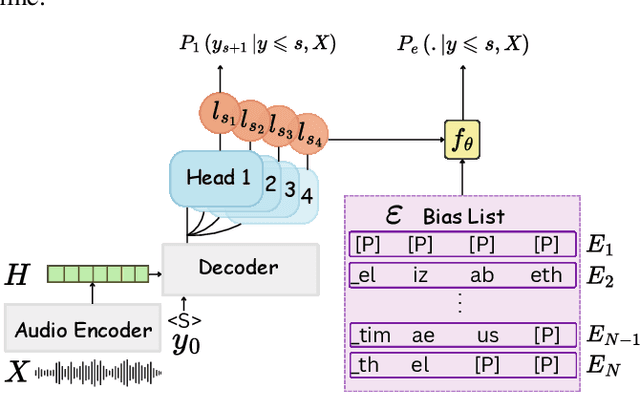

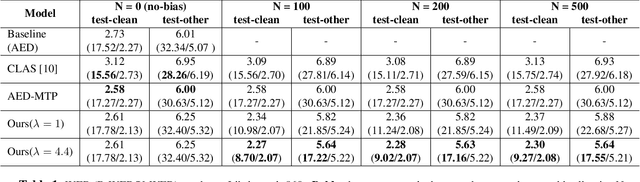

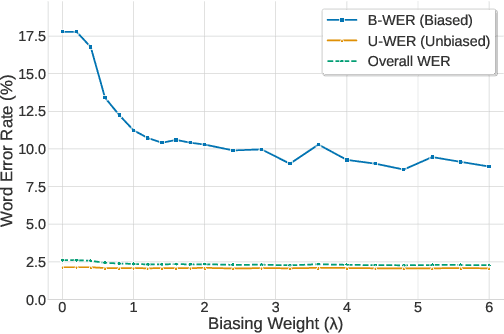

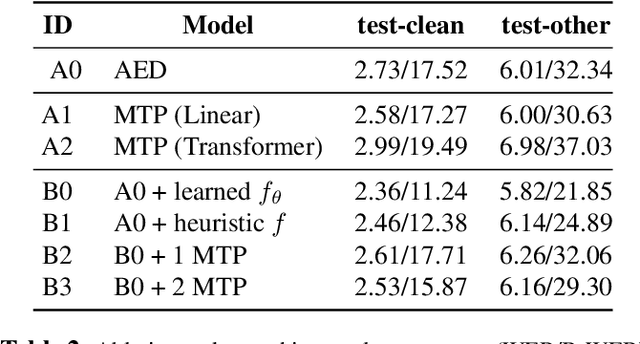

Abstract:While end-to-end (E2E) automatic speech recognition (ASR) models excel at general transcription, they struggle to recognize rare or unseen named entities (e.g., contact names, locations), which are critical for downstream applications like virtual assistants. In this paper, we propose a contextual biasing method for attention based encoder decoder (AED) models using a list of candidate named entities. Instead of predicting only the next token, we simultaneously predict multiple future tokens, enabling the model to "peek into the future" and score potential candidate entities in the entity list. Moreover, our approach leverages the multi-token prediction logits directly without requiring additional entity encoders or cross-attention layers, significantly reducing architectural complexity. Experiments on Librispeech demonstrate that our approach achieves up to 50.34% relative improvement in named entity word error rate compared to the baseline AED model.

Chunk Based Speech Pre-training with High Resolution Finite Scalar Quantization

Sep 19, 2025Abstract:Low latency speech human-machine communication is becoming increasingly necessary as speech technology advances quickly in the last decade. One of the primary factors behind the advancement of speech technology is self-supervised learning. Most self-supervised learning algorithms are designed with full utterance assumption and compromises have to made if partial utterances are presented, which are common in the streaming applications. In this work, we propose a chunk based self-supervised learning (Chunk SSL) algorithm as an unified solution for both streaming and offline speech pre-training. Chunk SSL is optimized with the masked prediction loss and an acoustic encoder is encouraged to restore indices of those masked speech frames with help from unmasked frames in the same chunk and preceding chunks. A copy and append data augmentation approach is proposed to conduct efficient chunk based pre-training. Chunk SSL utilizes a finite scalar quantization (FSQ) module to discretize input speech features and our study shows a high resolution FSQ codebook, i.e., a codebook with vocabulary size up to a few millions, is beneficial to transfer knowledge from the pre-training task to the downstream tasks. A group masked prediction loss is employed during pre-training to alleviate the high memory and computation cost introduced by the large codebook. The proposed approach is examined in two speech to text tasks, i.e., speech recognition and speech translation. Experimental results on the \textsc{Librispeech} and \textsc{Must-C} datasets show that the proposed method could achieve very competitive results for speech to text tasks at both streaming and offline modes.

Transducer Consistency Regularization for Speech to Text Applications

Oct 09, 2024

Abstract:Consistency regularization is a commonly used practice to encourage the model to generate consistent representation from distorted input features and improve model generalization. It shows significant improvement on various speech applications that are optimized with cross entropy criterion. However, it is not straightforward to apply consistency regularization for the transducer-based approaches, which are widely adopted for speech applications due to the competitive performance and streaming characteristic. The main challenge is from the vast alignment space of the transducer optimization criterion and not all the alignments within the space contribute to the model optimization equally. In this study, we present Transducer Consistency Regularization (TCR), a consistency regularization method for transducer models. We apply distortions such as spec augmentation and dropout to create different data views and minimize the distribution difference. We utilize occupational probabilities to give different weights on transducer output distributions, thus only alignments close to oracle alignments would contribute to the model learning. Our experiments show the proposed method is superior to other consistency regularization implementations and could effectively reduce word error rate (WER) by 4.3\% relatively comparing with a strong baseline on the \textsc{Librispeech} dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge