Chung Hyuk Park

DXM-TransFuse U-net: Dual Cross-Modal Transformer Fusion U-net for Automated Nerve Identification

Feb 27, 2022

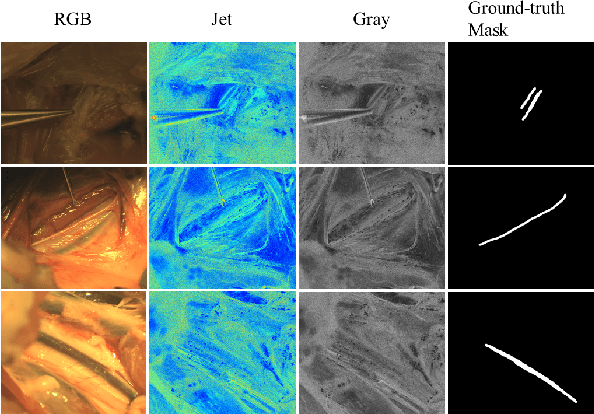

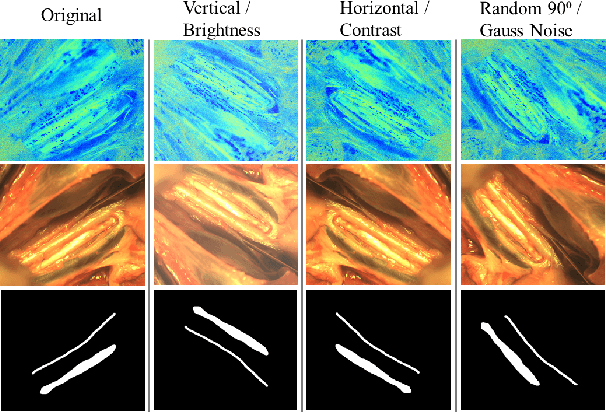

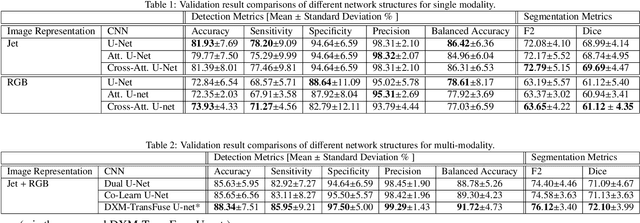

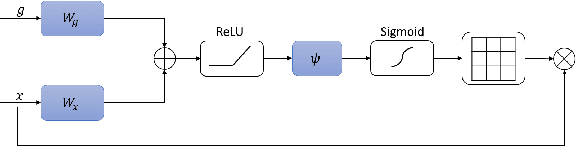

Abstract:Accurate nerve identification is critical during surgical procedures for preventing any damages to nerve tissues. Nerve injuries can lead to long-term detrimental effects for patients as well as financial overburdens. In this study, we develop a deep-learning network framework using the U-Net architecture with a Transformer block based fusion module at the bottleneck to identify nerve tissues from a multi-modal optical imaging system. By leveraging and extracting the feature maps of each modality independently and using each modalities information for cross-modal interactions, we aim to provide a solution that would further increase the effectiveness of the imaging systems for enabling the noninvasive intraoperative nerve identification.

A MultiModal Social Robot Toward Personalized Emotion Interaction

Oct 08, 2021

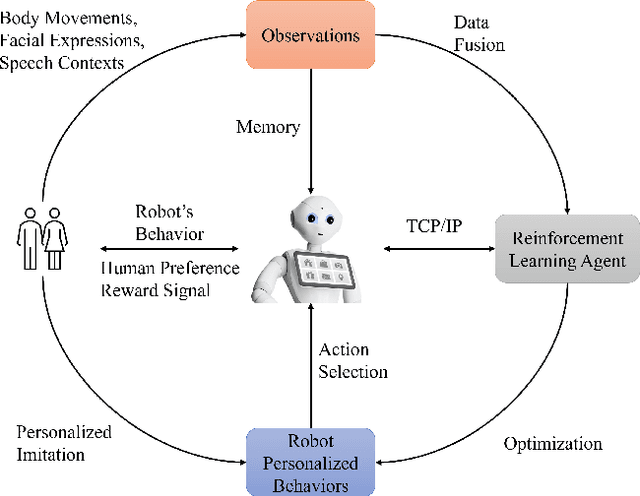

Abstract:Human emotions are expressed through multiple modalities, including verbal and non-verbal information. Moreover, the affective states of human users can be the indicator for the level of engagement and successful interaction, suitable for the robot to use as a rewarding factor to optimize robotic behaviors through interaction. This study demonstrates a multimodal human-robot interaction (HRI) framework with reinforcement learning to enhance the robotic interaction policy and personalize emotional interaction for a human user. The goal is to apply this framework in social scenarios that can let the robots generate a more natural and engaging HRI framework.

An Interactive Robotic Framework to Facilitate Sensory Experiences for Children with ASD

Jan 03, 2019

Abstract:The diagnosis of Autism Spectrum Disorder (ASD) in children is commonly accompanied by a diagnosis of sensory processing disorders as well. Abnormalities are usually reported in multiple sensory processing domains, showing a higher prevalence of unusual responses, particularly to tactile, auditory and visual stimuli. This paper discusses a novel robot-based framework designed to target sensory difficulties faced by children with ASD in a controlled setting. The setup consists of a number of sensory stations, together with robotic agents that navigate the stations and interact with the stimuli as they are presented. These stimuli are designed to resemble real world scenarios that form a common part of one's everyday experiences. Given the strong interest of children with ASD in technology in general and robots in particular, we attempt to utilize our robotic platform to demonstrate socially acceptable responses to the stimuli in an interactive, pedagogical setting that encourages the child's social, motor and vocal skills, while providing a diverse sensory experience. A user study was conducted to evaluate the efficacy of the proposed framework, with a total of 18 participants (5 with ASD and 13 typically developing) between the ages of 4 and 12 years. We describe our methods of data collection, coding of video data and the analysis of the results obtained from the study. We also discuss the limitations of the current work and detail our plans for the future work to improve the validity of the obtained results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge