Baijun Xie

DXM-TransFuse U-net: Dual Cross-Modal Transformer Fusion U-net for Automated Nerve Identification

Feb 27, 2022

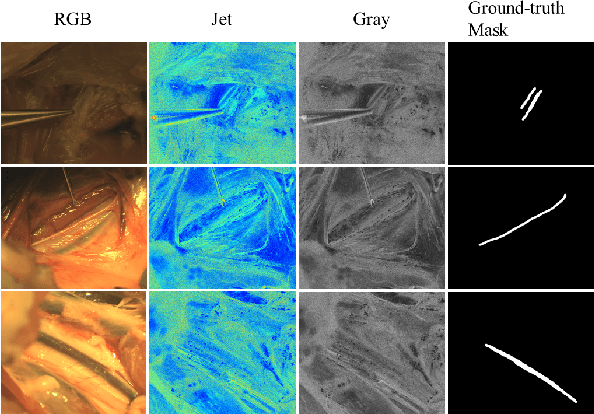

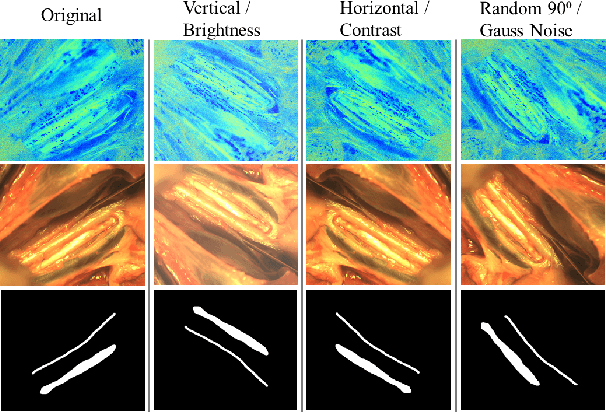

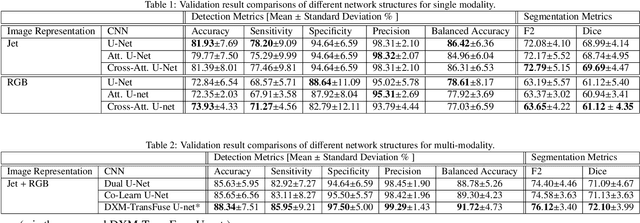

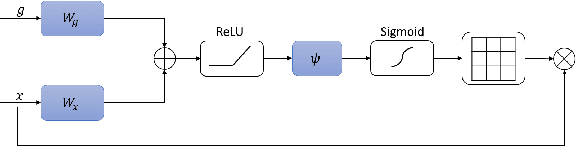

Abstract:Accurate nerve identification is critical during surgical procedures for preventing any damages to nerve tissues. Nerve injuries can lead to long-term detrimental effects for patients as well as financial overburdens. In this study, we develop a deep-learning network framework using the U-Net architecture with a Transformer block based fusion module at the bottleneck to identify nerve tissues from a multi-modal optical imaging system. By leveraging and extracting the feature maps of each modality independently and using each modalities information for cross-modal interactions, we aim to provide a solution that would further increase the effectiveness of the imaging systems for enabling the noninvasive intraoperative nerve identification.

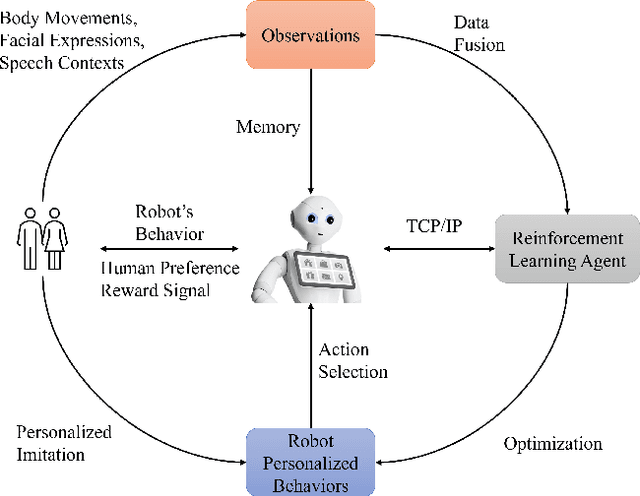

A MultiModal Social Robot Toward Personalized Emotion Interaction

Oct 08, 2021

Abstract:Human emotions are expressed through multiple modalities, including verbal and non-verbal information. Moreover, the affective states of human users can be the indicator for the level of engagement and successful interaction, suitable for the robot to use as a rewarding factor to optimize robotic behaviors through interaction. This study demonstrates a multimodal human-robot interaction (HRI) framework with reinforcement learning to enhance the robotic interaction policy and personalize emotional interaction for a human user. The goal is to apply this framework in social scenarios that can let the robots generate a more natural and engaging HRI framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge