A MultiModal Social Robot Toward Personalized Emotion Interaction

Paper and Code

Oct 08, 2021

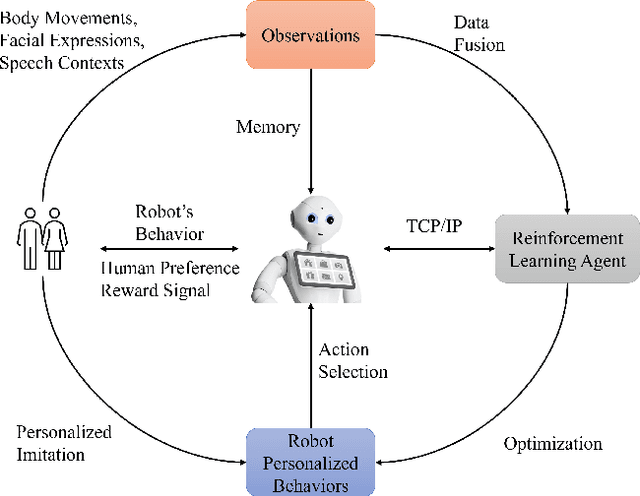

Human emotions are expressed through multiple modalities, including verbal and non-verbal information. Moreover, the affective states of human users can be the indicator for the level of engagement and successful interaction, suitable for the robot to use as a rewarding factor to optimize robotic behaviors through interaction. This study demonstrates a multimodal human-robot interaction (HRI) framework with reinforcement learning to enhance the robotic interaction policy and personalize emotional interaction for a human user. The goal is to apply this framework in social scenarios that can let the robots generate a more natural and engaging HRI framework.

* Presented at AI-HRI symposium as part of AAAI-FSS 2021

(arXiv:2109.10836)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge