Christos Verikoukis

XAI-Driven Client Selection for Federated Learning in Scalable 6G Network Slicing

Mar 16, 2025

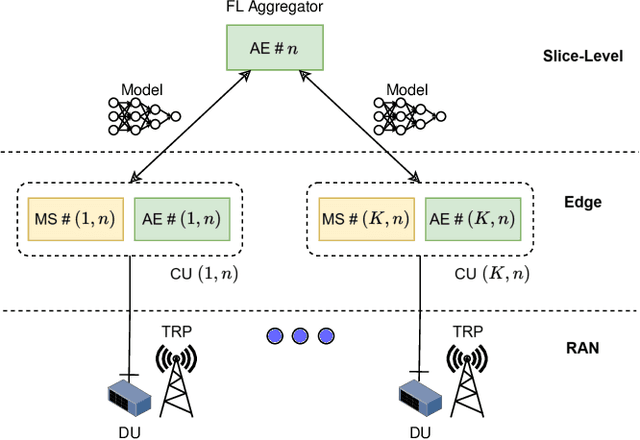

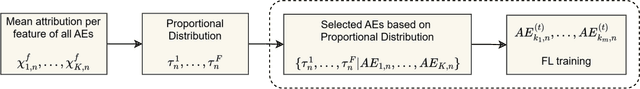

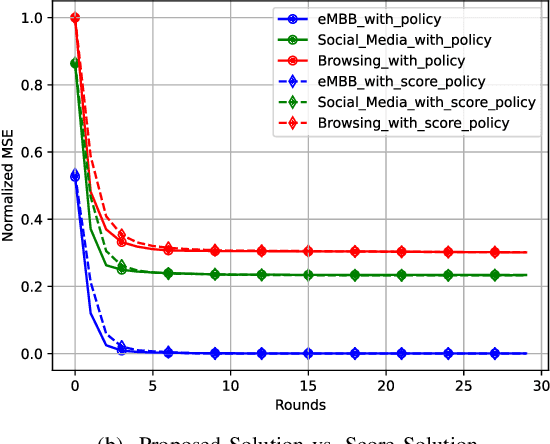

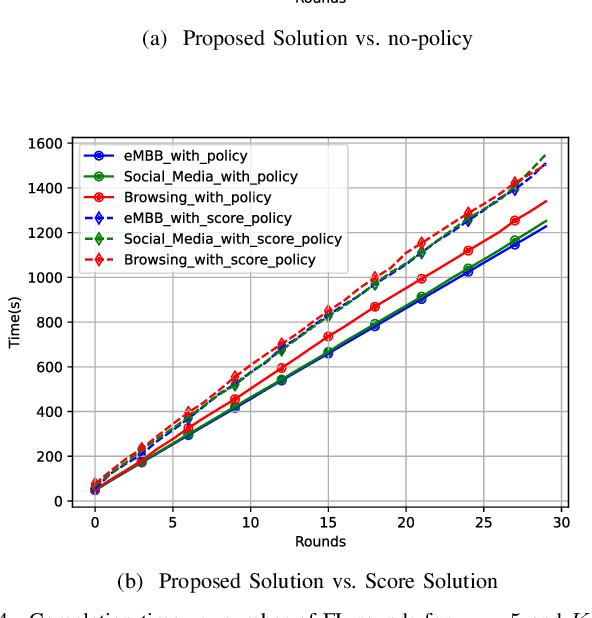

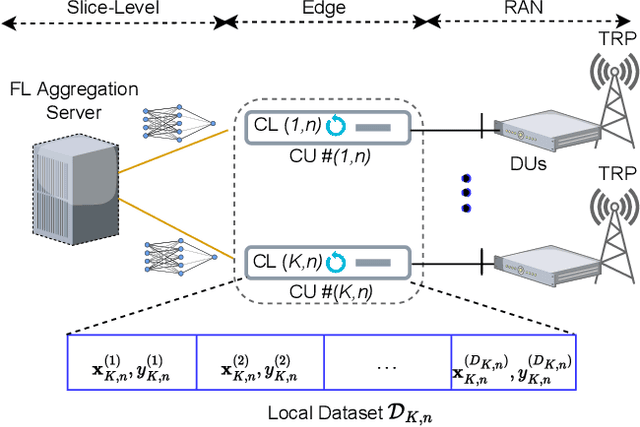

Abstract:In recent years, network slicing has embraced artificial intelligence (AI) models to manage the growing complexity of communication networks. In such a situation, AI-driven zero-touch network automation should present a high degree of flexibility and viability, especially when deployed in live production networks. However, centralized controllers suffer from high data communication overhead due to the vast amount of user data, and most network slices are reluctant to share private data. In federated learning systems, selecting trustworthy clients to participate in training is critical for ensuring system performance and reliability. The present paper proposes a new approach to client selection by leveraging an XAI method to guarantee scalable and fast operation of federated learning based analytic engines that implement slice-level resource provisioning at the RAN-Edge in a non-IID scenario. Attributions from XAI are used to guide the selection of devices participating in training. This approach enhances network trustworthiness for users and addresses the black-box nature of neural network models. The simulations conducted outperformed the standard approach in terms of both convergence time and computational cost, while also demonstrating high scalability.

Enhancing AI Transparency: XRL-Based Resource Management and RAN Slicing for 6G ORAN Architecture

Jan 17, 2025Abstract:This research introduces an advanced Explainable Artificial Intelligence (XAI) framework designed to elucidate the decision-making processes of Deep Reinforcement Learning (DRL) agents in ORAN architectures. By offering network-oriented explanations, the proposed scheme addresses the critical challenge of understanding and optimizing the control actions of DRL agents for resource management and allocation. Traditional methods, both model-agnostic and model-specific approaches, fail to address the unique challenges presented by XAI in the dynamic and complex environment of RAN slicing. This paper transcends these limitations by incorporating intent-based action steering, allowing for precise embedding and configuration across various operational timescales. This is particularly evident in its integration with xAPP and rAPP sitting at near-real-time and non-real-time RIC, respectively, enhancing the system's adaptability and performance. Our findings demonstrate the framework's significant impact on improving Key Performance Indicator (KPI)-based rewards, facilitated by the ability to make informed multimodal decisions involving multiple control parameters by a DRL agent. Thus, our work marks a significant step forward in the practical application and effectiveness of XAI in optimizing ORAN resource management strategies.

NetROS-5G: Enhancing Personalization through 5G Network Slicing and Edge Computing in Human-Robot Interactions

Dec 11, 2023Abstract:Robots are increasingly being used in a variety of applications, from manufacturing and healthcare to education and customer service. However, the mobility, power, and price points of these robots often dictate that they do not have sufficient computing power on board to run modern algorithms for personalization in human-robot interaction at desired rates. This can limit the effectiveness of the interaction and limit the potential applications for these robots. 5G connectivity provides a solution to this problem by offering high data rates, bandwidth, and low latency that can facilitate robotics services. Additionally, the widespread availability of cloud computing has made it easy to access almost unlimited computing power at a low cost. Edge computing, which involves placing compute resources closer to the action, can offer even lower latency than cloud computing. In this paper, we explore the potential of combining 5G, edge, and cloud computing to provide improved personalization in human-robot interaction. We design, develop, and demonstrate a new framework, entitled NetROS-5G, to show how the performance gained by utilizing these technologies can overcome network latency and significantly enhance personalization in robotics. Our results show that the integration of 5G network slicing, edge computing, and cloud computing can collectively offer a cost-efficient and superior level of personalization in a modern human-robot interaction scenario.

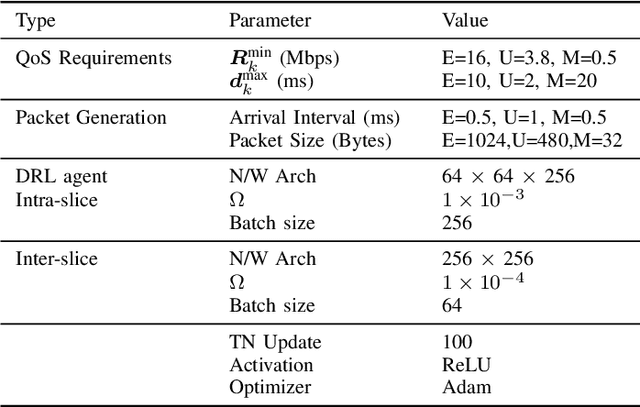

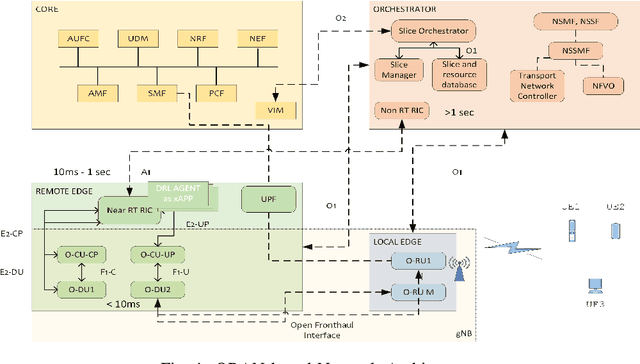

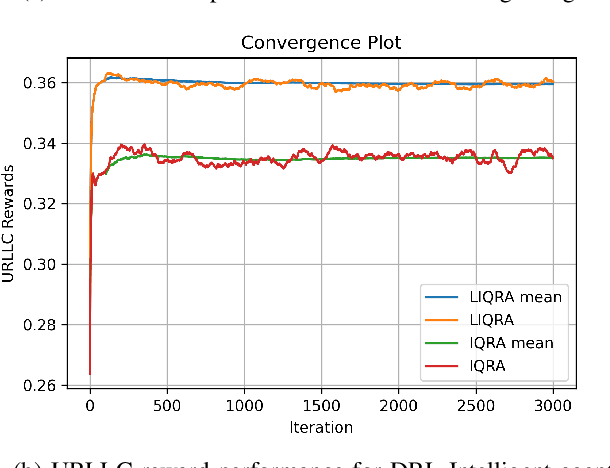

AIaaS for ORAN-based 6G Networks: Multi-time scale slice resource management with DRL

Nov 20, 2023

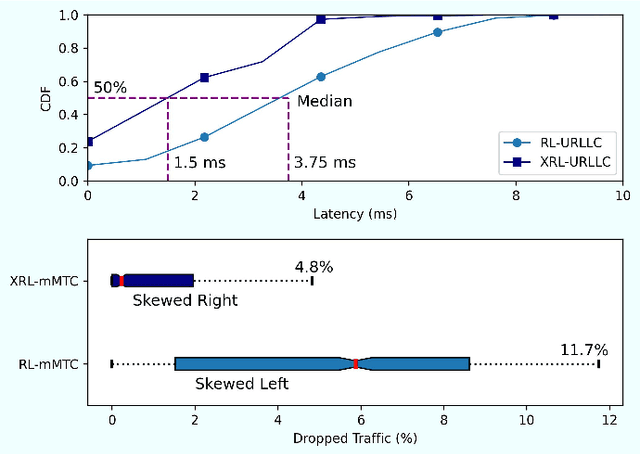

Abstract:This paper addresses how to handle slice resources for 6G networks at different time scales in an architecture based on an open radio access network (ORAN). The proposed solution includes artificial intelligence (AI) at the edge of the network and applies two control-level loops to obtain optimal performance compared to other techniques. The ORAN facilitates programmable network architectures to support such multi-time scale management using AI approaches. The proposed algorithms analyze the maximum utilization of resources from slice performance to take decisions at the inter-slice level. Inter-slice intelligent agents work at a non-real-time level to reconfigure resources within various slices. Further than meeting the slice requirements, the intra-slice objective must also include the minimization of maximum resource utilization. This enables smart utilization of the resources within each slice without affecting slice performance. Here, each xApp that is an intra-slice agent aims at meeting the optimal QoS of the users, but at the same time, some inter-slice objectives should be included to coordinate intra- and inter-slice agents. This is done without penalizing the main intra-slice objective. All intelligent agents use deep reinforcement learning (DRL) algorithms to meet their objectives. We have presented results for enhanced mobile broadband (eMBB), ultra-reliable low latency (URLLC), and massive machine type communication (mMTC) slice categories.

Intelligent QoS aware slice resource allocation with user association parameterization for beyond 5G ORAN based architecture using DRL

Nov 02, 2023

Abstract:The diverse requirements of beyond 5G services increase design complexity and demand dynamic adjustments to the network parameters. This can be achieved with slicing and programmable network architectures such as the open radio access network (ORAN). It facilitates the tuning of the network components exactly to the demands of future-envisioned applications as well as intelligence at the edge of the network. Artificial intelligence (AI) has recently drawn a lot of interest for its potential to solve challenging issues in wireless communication. Due to the non-deterministic, random, and complex behavior of models and parameters involved in the process, radio resource management is one of the topics that needs to be addressed with such techniques. The study presented in this paper proposes quality of service (QoS)-aware intra-slice resource allocation that provides superior performance compared to baseline and state of the art strategies. The slice-dedicated intelligent agents learn how to handle resources at near-RT RIC level time granularities while optimizing various key performance indicators (KPIs) and meeting QoS requirements for each end user. In order to improve KPIs and system performance with various reward functions, the study discusses Markov's decision process (MDP) and deep reinforcement learning (DRL) techniques, notably the deep Q network (DQN). The simulation evaluates the efficacy of the algorithm under dynamic conditions and various network characteristics. Results and analysis demonstrate the improvement in the performance of the network for enhanced mobile broadband (eMBB) and ultra-reliable low latency (URLLC) slice categories.

Towards Bridging the FL Performance-Explainability Trade-Off: A Trustworthy 6G RAN Slicing Use-Case

Jul 24, 2023

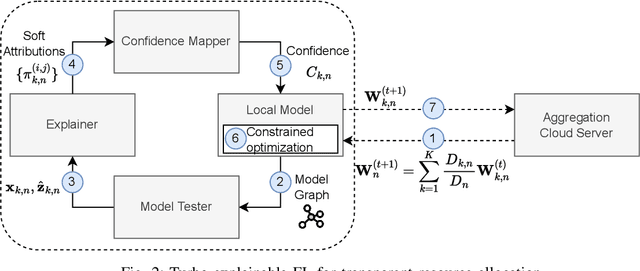

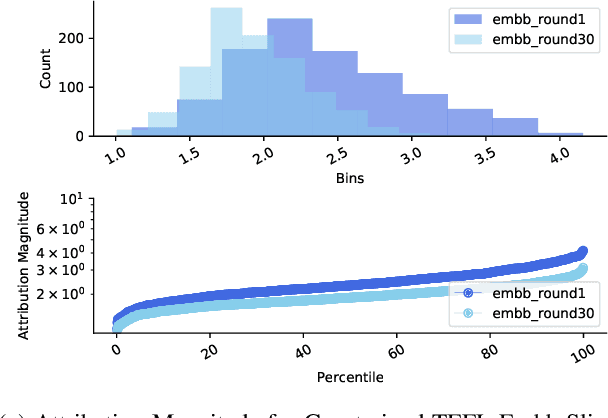

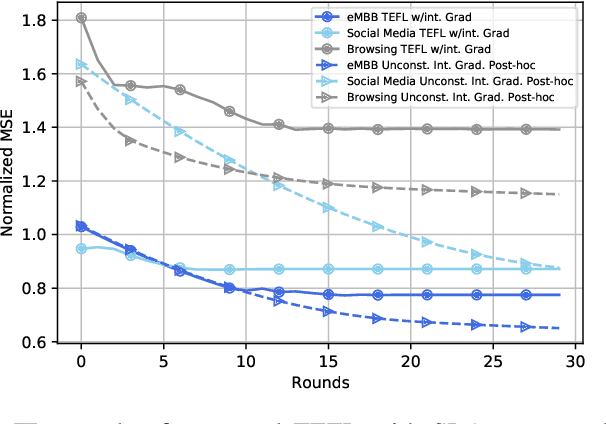

Abstract:In the context of sixth-generation (6G) networks, where diverse network slices coexist, the adoption of AI-driven zero-touch management and orchestration (MANO) becomes crucial. However, ensuring the trustworthiness of AI black-boxes in real deployments is challenging. Explainable AI (XAI) tools can play a vital role in establishing transparency among the stakeholders in the slicing ecosystem. But there is a trade-off between AI performance and explainability, posing a dilemma for trustworthy 6G network slicing because the stakeholders require both highly performing AI models for efficient resource allocation and explainable decision-making to ensure fairness, accountability, and compliance. To balance this trade off and inspired by the closed loop automation and XAI methodologies, this paper presents a novel explanation-guided in-hoc federated learning (FL) approach where a constrained resource allocation model and an explainer exchange -- in a closed loop (CL) fashion -- soft attributions of the features as well as inference predictions to achieve a transparent 6G network slicing resource management in a RAN-Edge setup under non-independent identically distributed (non-IID) datasets. In particular, we quantitatively validate the faithfulness of the explanations via the so-called attribution-based confidence metric that is included as a constraint to guide the overall training process in the run-time FL optimization task. In this respect, Integrated-Gradient (IG) as well as Input $\times$ Gradient and SHAP are used to generate the attributions for our proposed in-hoc scheme, wherefore simulation results under different methods confirm its success in tackling the performance-explainability trade-off and its superiority over the unconstrained Integrated-Gradient post-hoc FL baseline.

Explanation-Guided Fair Federated Learning for Transparent 6G RAN Slicing

Jul 18, 2023Abstract:Future zero-touch artificial intelligence (AI)-driven 6G network automation requires building trust in the AI black boxes via explainable artificial intelligence (XAI), where it is expected that AI faithfulness would be a quantifiable service-level agreement (SLA) metric along with telecommunications key performance indicators (KPIs). This entails exploiting the XAI outputs to generate transparent and unbiased deep neural networks (DNNs). Motivated by closed-loop (CL) automation and explanation-guided learning (EGL), we design an explanation-guided federated learning (EGFL) scheme to ensure trustworthy predictions by exploiting the model explanation emanating from XAI strategies during the training run time via Jensen-Shannon (JS) divergence. Specifically, we predict per-slice RAN dropped traffic probability to exemplify the proposed concept while respecting fairness goals formulated in terms of the recall metric which is included as a constraint in the optimization task. Finally, the comprehensiveness score is adopted to measure and validate the faithfulness of the explanations quantitatively. Simulation results show that the proposed EGFL-JS scheme has achieved more than $50\%$ increase in terms of comprehensiveness compared to different baselines from the literature, especially the variant EGFL-KL that is based on the Kullback-Leibler Divergence. It has also improved the recall score with more than $25\%$ relatively to unconstrained-EGFL.

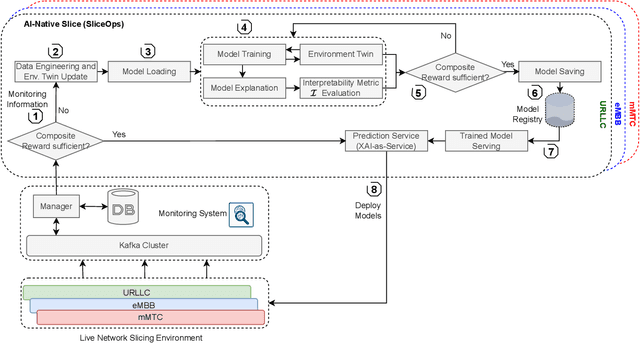

SliceOps: Explainable MLOps for Streamlined Automation-Native 6G Networks

Jul 04, 2023

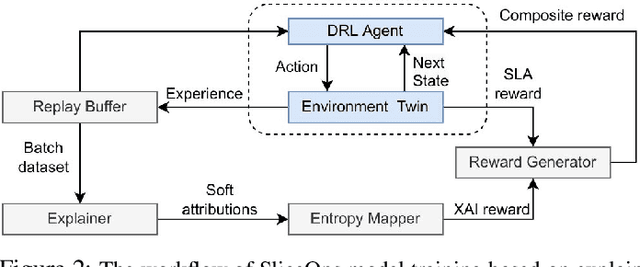

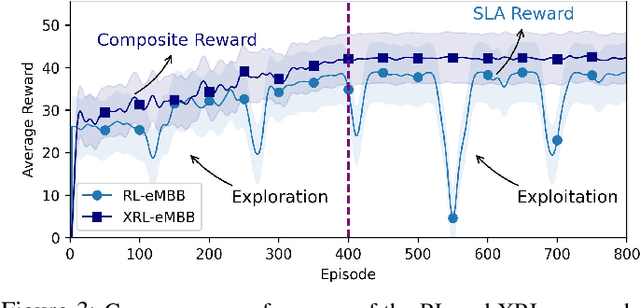

Abstract:Sixth-generation (6G) network slicing is the backbone of future communications systems. It inaugurates the era of extreme ultra-reliable and low-latency communication (xURLLC) and pervades the digitalization of the various vertical immersive use cases. Since 6G inherently underpins artificial intelligence (AI), we propose a systematic and standalone slice termed SliceOps that is natively embedded in the 6G architecture, which gathers and manages the whole AI lifecycle through monitoring, re-training, and deploying the machine learning (ML) models as a service for the 6G slices. By leveraging machine learning operations (MLOps) in conjunction with eXplainable AI (XAI), SliceOps strives to cope with the opaqueness of black-box AI using explanation-guided reinforcement learning (XRL) to fulfill transparency, trustworthiness, and interpretability in the network slicing ecosystem. This article starts by elaborating on the architectural and algorithmic aspects of SliceOps. Then, the deployed cloud-native SliceOps working is exemplified via a latency-aware resource allocation problem. The deep RL (DRL)-based SliceOps agents within slices provide AI services aiming to allocate optimal radio resources and impede service quality degradation. Simulation results demonstrate the effectiveness of SliceOps-driven slicing. The article discusses afterward the SliceOps challenges and limitations. Finally, the key open research directions corresponding to the proposed approach are identified.

TEFL: Turbo Explainable Federated Learning for 6G Trustworthy Zero-Touch Network Slicing

Oct 18, 2022

Abstract:Sixth-generation (6G) networks anticipate intelligently supporting a massive number of coexisting and heterogeneous slices associated with various vertical use cases. Such a context urges the adoption of artificial intelligence (AI)-driven zero-touch management and orchestration (MANO) of the end-to-end (E2E) slices under stringent service level agreements (SLAs). Specifically, the trustworthiness of the AI black-boxes in real deployment can be achieved by explainable AI (XAI) tools to build transparency between the interacting actors in the slicing ecosystem, such as tenants, infrastructure providers and operators. Inspired by the turbo principle, this paper presents a novel iterative explainable federated learning (FL) approach where a constrained resource allocation model and an \emph{explainer} exchange -- in a closed loop (CL) fashion -- soft attributions of the features as well as inference predictions to achieve a transparent and SLA-aware zero-touch service management (ZSM) of 6G network slices at RAN-Edge setup under non-independent identically distributed (non-IID) datasets. In particular, we quantitatively validate the faithfulness of the explanations via the so-called attribution-based \emph{confidence metric} that is included as a constraint in the run-time FL optimization task. In this respect, Integrated-Gradient (IG) as well as Input $\times$ Gradient and SHAP are used to generate the attributions for the turbo explainable FL (TEFL), wherefore simulation results under different methods confirm its superiority over an unconstrained Integrated-Gradient \emph{post-hoc} FL baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge