Christopher Schlett Fabian Bamberg

Deep Shape Analysis on Abdominal Organs for Diabetes Prediction

Aug 06, 2018

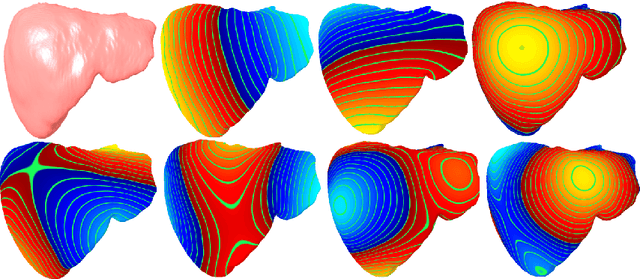

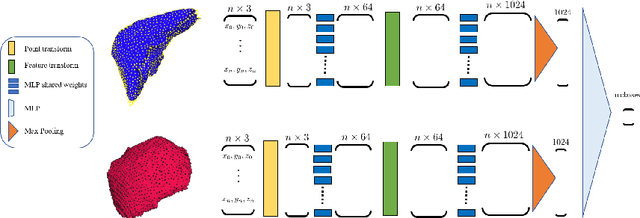

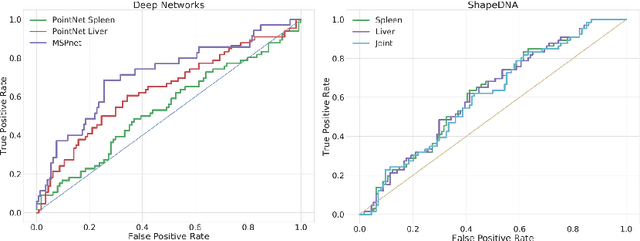

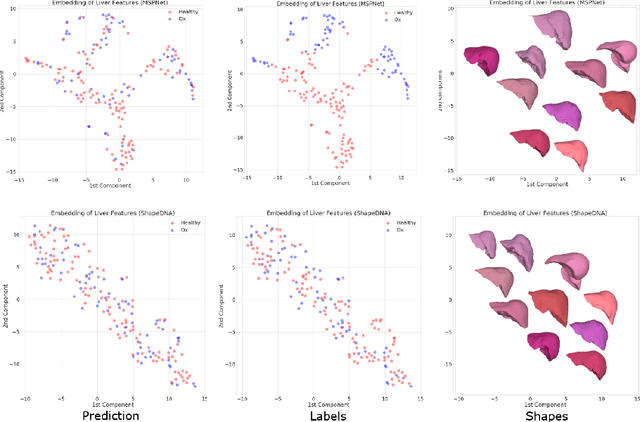

Abstract:Morphological analysis of organs based on images is a key task in medical imaging computing. Several approaches have been proposed for the quantitative assessment of morphological changes, and they have been widely used for the analysis of the effects of aging, disease and other factors in organ morphology. In this work, we propose a deep neural network for predicting diabetes on abdominal shapes. The network directly operates on raw point clouds without requiring mesh processing or shape alignment. Instead of relying on hand-crafted shape descriptors, an optimal representation is learned in the end-to-end training stage of the network. For comparison, we extend the state-of-the-art shape descriptor BrainPrint to the AbdomenPrint. Our results demonstrate that the network learns shape representations that better separates healthy and diabetic individuals than traditional representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge