Christoph Wehner

Anwendung von Causal-Discovery-Algorithmen zur Root-Cause-Analyse in der Fahrzeugmontage

Jul 23, 2024Abstract:Root Cause Analysis (RCA) is a quality management method that aims to systematically investigate and identify the cause-and-effect relationships of problems and their underlying causes. Traditional methods are based on the analysis of problems by subject matter experts. In modern production processes, large amounts of data are collected. For this reason, increasingly computer-aided and data-driven methods are used for RCA. One of these methods are Causal Discovery Algorithms (CDA). This publication demonstrates the application of CDA on data from the assembly of a leading automotive manufacturer. The algorithms used learn the causal structure between the characteristics of the manufactured vehicles, the ergonomics and the temporal scope of the involved assembly processes, and quality-relevant product features based on representative data. This publication compares various CDAs in terms of their suitability in the context of quality management. For this purpose, the causal structures learned by the algorithms as well as their runtime are compared. This publication provides a contribution to quality management and demonstrates how CDAs can be used for RCA in assembly processes.

Knowledge Graph Enhanced Retrieval-Augmented Generation for Failure Mode and Effects Analysis

Jun 26, 2024

Abstract:Failure mode and effects analysis (FMEA) is a critical tool for mitigating potential failures, particular during ramp-up phases of new products. However, its effectiveness is often limited by the missing reasoning capabilities of the FMEA tools, which are usually tabular structured. Meanwhile, large language models (LLMs) offer novel prospects for fine-tuning on custom datasets for reasoning within FMEA contexts. However, LLMs face challenges in tasks that require factual knowledge, a gap that retrieval-augmented generation (RAG) approaches aim to fill. RAG retrieves information from a non-parametric data store and uses a language model to generate responses. Building on this idea, we propose to advance the non-parametric data store with a knowledge graph (KG). By enhancing the RAG framework with a KG, our objective is to leverage analytical and semantic question-answering capabilities on FMEA data. This paper contributes by presenting a new ontology for FMEA observations, an algorithm for creating vector embeddings from the FMEA KG, and a KG enhanced RAG framework. Our approach is validated through a human study and we measure the performance of the context retrieval recall and precision.

From Latent to Lucid: Transforming Knowledge Graph Embeddings into Interpretable Structures

Jun 03, 2024

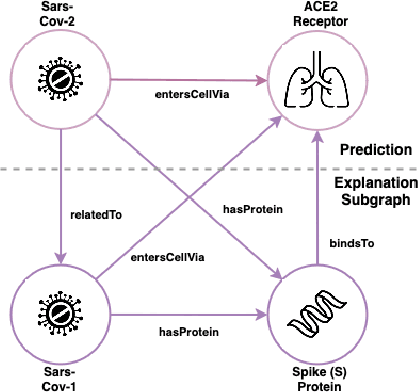

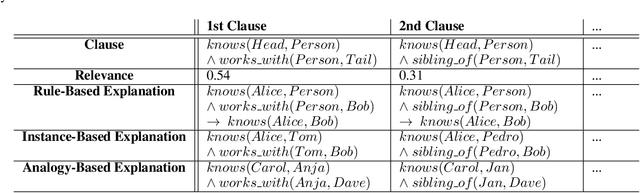

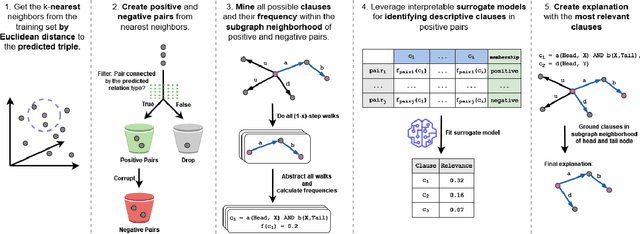

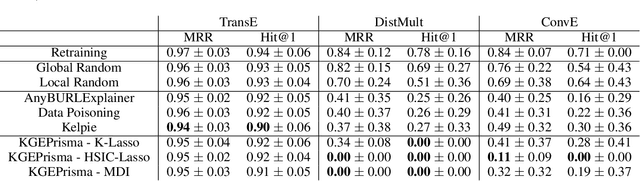

Abstract:This paper introduces a post-hoc explainable AI method tailored for Knowledge Graph Embedding models. These models are essential to Knowledge Graph Completion yet criticized for their opaque, black-box nature. Despite their significant success in capturing the semantics of knowledge graphs through high-dimensional latent representations, their inherent complexity poses substantial challenges to explainability. Unlike existing methods, our approach directly decodes the latent representations encoded by Knowledge Graph Embedding models, leveraging the principle that similar embeddings reflect similar behaviors within the Knowledge Graph. By identifying distinct structures within the subgraph neighborhoods of similarly embedded entities, our method identifies the statistical regularities on which the models rely and translates these insights into human-understandable symbolic rules and facts. This bridges the gap between the abstract representations of Knowledge Graph Embedding models and their predictive outputs, offering clear, interpretable insights. Key contributions include a novel post-hoc explainable AI method for Knowledge Graph Embedding models that provides immediate, faithful explanations without retraining, facilitating real-time application even on large-scale knowledge graphs. The method's flexibility enables the generation of rule-based, instance-based, and analogy-based explanations, meeting diverse user needs. Extensive evaluations show our approach's effectiveness in delivering faithful and well-localized explanations, enhancing the transparency and trustworthiness of Knowledge Graph Embedding models.

Comprehensible Artificial Intelligence on Knowledge Graphs: A survey

Apr 04, 2024Abstract:Artificial Intelligence applications gradually move outside the safe walls of research labs and invade our daily lives. This is also true for Machine Learning methods on Knowledge Graphs, which has led to a steady increase in their application since the beginning of the 21st century. However, in many applications, users require an explanation of the Artificial Intelligences decision. This led to increased demand for Comprehensible Artificial Intelligence. Knowledge Graphs epitomize fertile soil for Comprehensible Artificial Intelligence, due to their ability to display connected data, i.e. knowledge, in a human- as well as machine-readable way. This survey gives a short history to Comprehensible Artificial Intelligence on Knowledge Graphs. Furthermore, we contribute by arguing that the concept Explainable Artificial Intelligence is overloaded and overlapping with Interpretable Machine Learning. By introducing the parent concept Comprehensible Artificial Intelligence, we provide a clear-cut distinction of both concepts while accounting for their similarities. Thus, we provide in this survey a case for Comprehensible Artificial Intelligence on Knowledge Graphs consisting of Interpretable Machine Learning on Knowledge Graphs and Explainable Artificial Intelligence on Knowledge Graphs. This leads to the introduction of a novel taxonomy for Comprehensible Artificial Intelligence on Knowledge Graphs. In addition, a comprehensive overview of the research on Comprehensible Artificial Intelligence on Knowledge Graphs is presented and put into the context of the taxonomy. Finally, research gaps in the field of Comprehensible Artificial Intelligence on Knowledge Graphs are identified for future research.

Interactive and Intelligent Root Cause Analysis in Manufacturing with Causal Bayesian Networks and Knowledge Graphs

Jan 20, 2024

Abstract:Root Cause Analysis (RCA) in the manufacturing of electric vehicles is the process of identifying fault causes. Traditionally, the RCA is conducted manually, relying on process expert knowledge. Meanwhile, sensor networks collect significant amounts of data in the manufacturing process. Using this data for RCA makes it more efficient. However, purely data-driven methods like Causal Bayesian Networks have problems scaling to large-scale, real-world manufacturing processes due to the vast amount of potential cause-effect relationships (CERs). Furthermore, purely data-driven methods have the potential to leave out already known CERs or to learn spurious CERs. The paper contributes by proposing an interactive and intelligent RCA tool that combines expert knowledge of an electric vehicle manufacturing process and a data-driven machine learning method. It uses reasoning over a large-scale Knowledge Graph of the manufacturing process while learning a Causal Bayesian Network. In addition, an Interactive User Interface enables a process expert to give feedback to the root cause graph by adding and removing information to the Knowledge Graph. The interactive and intelligent RCA tool reduces the learning time of the Causal Bayesian Network while decreasing the number of spurious CERs. Thus, the interactive and intelligent RCA tool closes the feedback loop between expert and machine learning method.

Explainable Online Lane Change Predictions on a Digital Twin with a Layer Normalized LSTM and Layer-wise Relevance Propagation

Apr 04, 2022

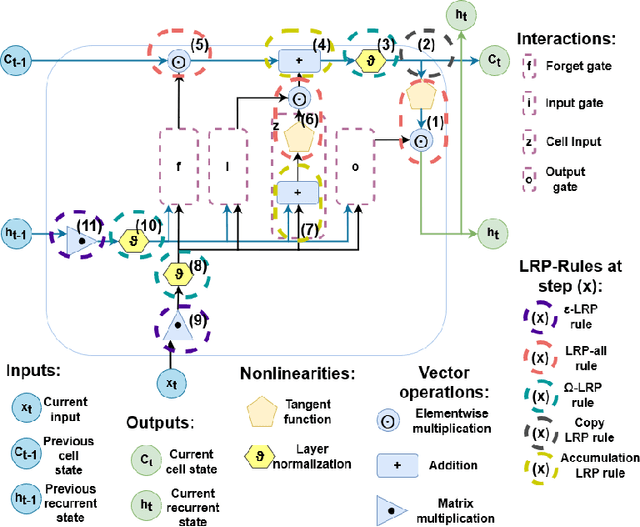

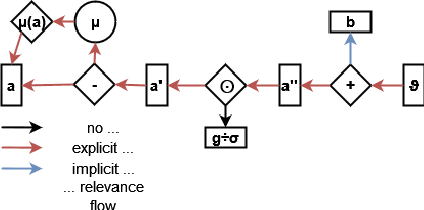

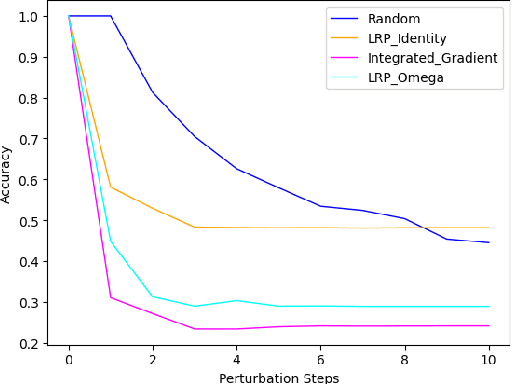

Abstract:Artificial Intelligence and Digital Twins play an integral role in driving innovation in the domain of intelligent driving. Long short-term memory (LSTM) is a leading driver in the field of lane change prediction for manoeuvre anticipation. However, the decision-making process of such models is complex and non-transparent, hence reducing the trustworthiness of the smart solution. This work presents an innovative approach and a technical implementation for explaining lane change predictions of layer normalized LSTMs using Layer-wise Relevance Propagation (LRP). The core implementation includes consuming live data from a digital twin on a German highway, live predictions and explanations of lane changes by extending LRP to layer normalized LSTMs, and an interface for communicating and explaining the predictions to a human user. We aim to demonstrate faithful, understandable, and adaptable explanations of lane change prediction to increase the adoption and trustworthiness of AI systems that involve humans. Our research also emphases that explainability and state-of-the-art performance of ML models for manoeuvre anticipation go hand in hand without negatively affecting predictive effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge