Christof Büskens

Let Hybrid A* Path Planner Obey Traffic Rules: A Deep Reinforcement Learning-Based Planning Framework

Jul 01, 2024

Abstract:Deep reinforcement learning (DRL) allows a system to interact with its environment and take actions by training an efficient policy that maximizes self-defined rewards. In autonomous driving, it can be used as a strategy for high-level decision making, whereas low-level algorithms such as the hybrid A* path planning have proven their ability to solve the local trajectory planning problem. In this work, we combine these two methods where the DRL makes high-level decisions such as lane change commands. After obtaining the lane change command, the hybrid A* planner is able to generate a collision-free trajectory to be executed by a model predictive controller (MPC). In addition, the DRL algorithm is able to keep the lane change command consistent within a chosen time-period. Traffic rules are implemented using linear temporal logic (LTL), which is then utilized as a reward function in DRL. Furthermore, we validate the proposed method on a real system to demonstrate its feasibility from simulation to implementation on real hardware.

Indoor Localization for an Autonomous Model Car: A Marker-Based Multi-Sensor Fusion Framework

Oct 08, 2023

Abstract:Global navigation satellite systems readily provide accurate position information when localizing a robot outdoors. However, an analogous standard solution does not exist yet for mobile robots operating indoors. This paper presents an integrated framework for indoor localization and experimental validation of an autonomous driving system based on an advanced driver-assistance system (ADAS) model car. The global pose of the model car is obtained by fusing information from fiducial markers, inertial sensors and wheel odometry. In order to achieve robust localization, we investigate and compare two extensions to the Extended Kalman Filter; first with adaptive noise tuning and second with Chi-squared test for measurement outlier detection. An efficient and low-cost ground truth measurement method using a single LiDAR sensor is also proposed to validate the results. The performance of the localization algorithms is tested on a complete autonomous driving system with trajectory planning and model predictive control.

Time-Dependent Hybrid-State A* and Optimal Control for Autonomous Vehicles in Arbitrary and Dynamic Environment

Nov 08, 2019

Abstract:The development of driving functions for autonomous vehicles in urban environments is still a challenging task. In comparison with driving on motorways, a wide variety of moving road users, such as pedestrians or cyclists, but also the strongly varying and sometimes very narrow road layout pose special challenges. The ability to make fast decisions about exact maneuvers and to execute them by applying sophisticated control commands is one of the key requirements for autonomous vehicles in such situations. In this context we present an algorithmic concept of three correlated methods. Its basis is a novel technique for the automated generation of a free-space polygon, providing a generic representation of the currently drivable area. We then develop a time-dependent hybrid-state A* algorithm as a model-based planner for the efficient and precise computation of possible driving maneuvers in arbitrary dynamic environments. While on the one hand its results can be used as a basis for making short-term decisions, we also show their applicability as an initial guess for a subsequent trajectory optimization in order to compute applicable control signals. Finally, we provide numerical results for a variety of simulated situations demonstrating the efficiency and robustness of the proposed methods.

Controlling an Autonomous Vehicle with Deep Reinforcement Learning

Sep 24, 2019

Abstract:We present a control approach for autonomous vehicles based on deep reinforcement learning. A neural network agent is trained to map its estimated state to acceleration and steering commands given the objective of reaching a specific target state while considering detected obstacles. Learning is performed using state-of-the-art proximal policy optimization in combination with a simulated environment. Training from scratch takes five to nine hours. The resulting agent is evaluated within simulation and subsequently applied to control a full-size research vehicle. For this, the autonomous exploration of a parking lot is considered, including turning maneuvers and obstacle avoidance. Altogether, this work is among the first examples to successfully apply deep reinforcement learning to a real vehicle.

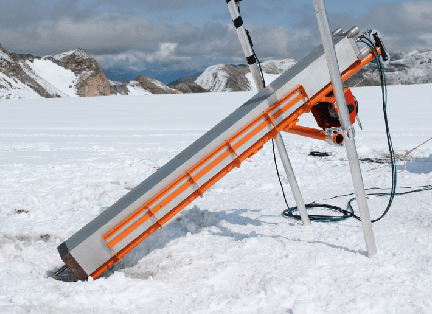

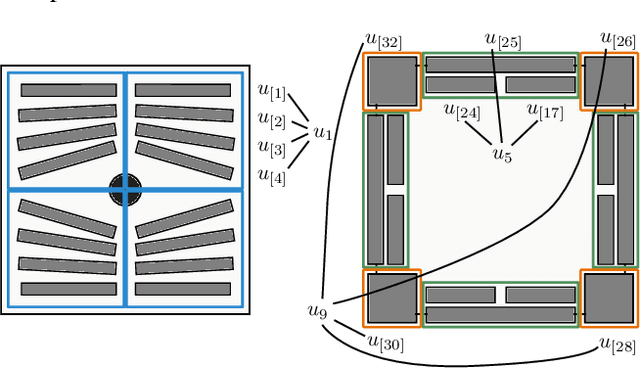

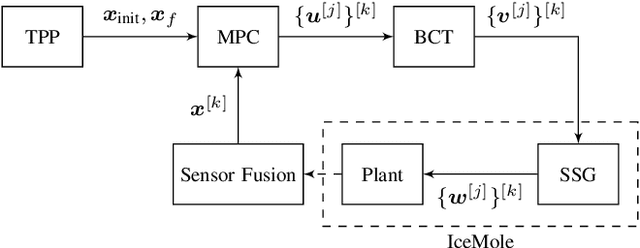

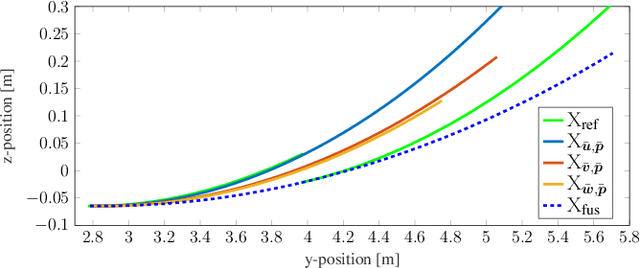

Optimization Strategies for Real-Time Control of an Autonomous Melting Probe

Apr 17, 2018

Abstract:We present an optimization-based approach for trajectory planning and control of a maneuverable melting probe with a high number of binary control variables. The dynamics of the system are modeled by a set of ordinary differential equations with a priori knowledge of system parameters of the melting process. The original planning problem is handled as an optimal control problem. Then, optimal control is used for reference trajectory planning as well as in an MPC-like algorithm. Finally, to determine binary control variables, a MINLP fitting approach is presented. The proposed strategy has recently been tested during experiments on the Langenferner glacier. The data obtained is used for model improvement by means of automated parameter identification.

* to be published in Proceedings of the 2018 American Control Conference, 7 pages, 6 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge