Christian Sigg

Deep learning meets tree phenology modeling: PhenoFormer vs. process-based models

Oct 30, 2024

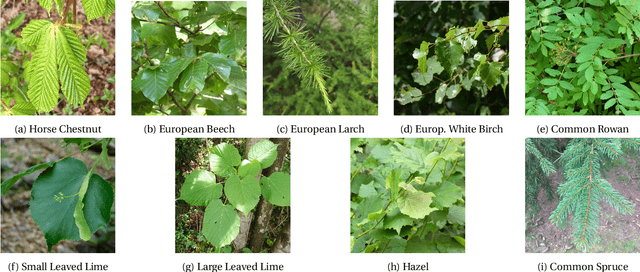

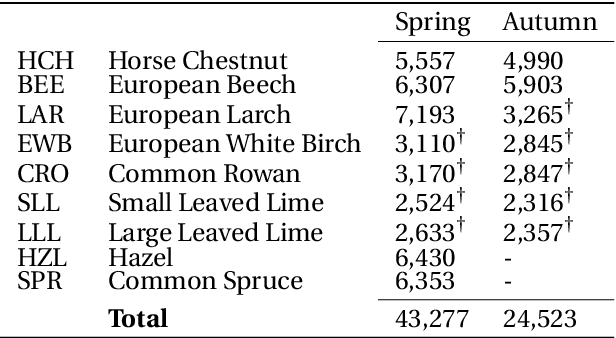

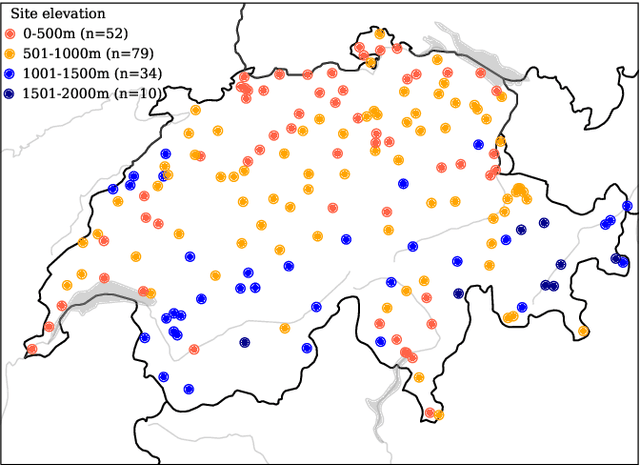

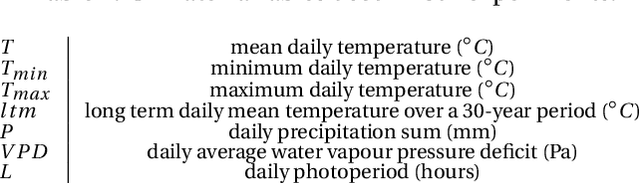

Abstract:Phenology, the timing of cyclical plant life events such as leaf emergence and coloration, is crucial in the bio-climatic system. Climate change drives shifts in these phenological events, impacting ecosystems and the climate itself. Accurate phenology models are essential to predict the occurrence of these phases under changing climatic conditions. Existing methods include hypothesis-driven process models and data-driven statistical approaches. Process models account for dormancy stages and various phenology drivers, while statistical models typically rely on linear or traditional machine learning techniques. Research shows that process models often outperform statistical methods when predicting under climate conditions outside historical ranges, especially with climate change scenarios. However, deep learning approaches remain underexplored in climate phenology modeling. We introduce PhenoFormer, a neural architecture better suited than traditional statistical methods at predicting phenology under shift in climate data distribution, while also bringing significant improvements or performing on par to the best performing process-based models. Our numerical experiments on a 70-year dataset of 70,000 phenological observations from 9 woody species in Switzerland show that PhenoFormer outperforms traditional machine learning methods by an average of 13% R2 and 1.1 days RMSE for spring phenology, and 11% R2 and 0.7 days RMSE for autumn phenology, while matching or exceeding the best process-based models. Our results demonstrate that deep learning has the potential to be a valuable methodological tool for accurate climate-phenology prediction, and our PhenoFormer is a first promising step in improving phenological predictions before a complete understanding of the underlying physiological mechanisms is available.

Photographic Visualization of Weather Forecasts with Generative Adversarial Networks

Mar 29, 2022

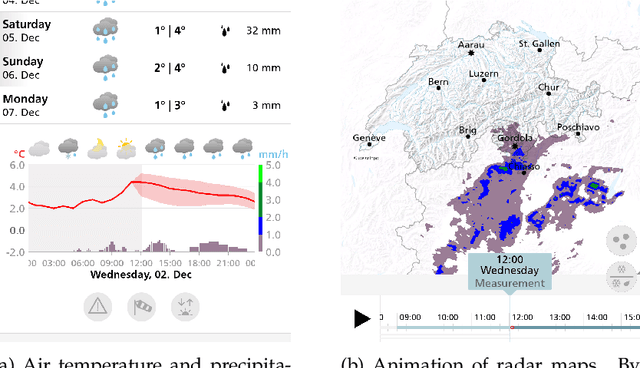

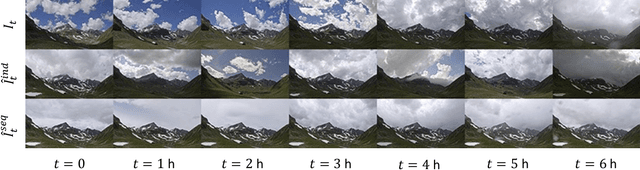

Abstract:Outdoor webcam images are an information-dense yet accessible visualization of past and present weather conditions, and are consulted by meteorologists and the general public alike. Weather forecasts, however, are still communicated as text, pictograms or charts. We therefore introduce a novel method that uses photographic images to also visualize future weather conditions. This is challenging, because photographic visualizations of weather forecasts should look real, be free of obvious artifacts, and should match the predicted weather conditions. The transition from observation to forecast should be seamless, and there should be visual continuity between images for consecutive lead times. We use conditional Generative Adversarial Networks to synthesize such visualizations. The generator network, conditioned on the analysis and the forecasting state of the numerical weather prediction (NWP) model, transforms the present camera image into the future. The discriminator network judges whether a given image is the real image of the future, or whether it has been synthesized. Training the two networks against each other results in a visualization method that scores well on all four evaluation criteria. We present results for three camera sites across Switzerland that differ in climatology and terrain. We show that users find it challenging to distinguish real from generated images, performing not much better than if they guessed randomly. The generated images match the atmospheric, ground and illumination conditions of the COSMO-1 NWP model forecast in at least 89 % of the examined cases. Nowcasting sequences of generated images achieve a seamless transition from observation to forecast and attain visual continuity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge