Chitta Ranjan

Large Multistream Data Analytics for Monitoring and Diagnostics in Manufacturing Systems

Dec 26, 2018

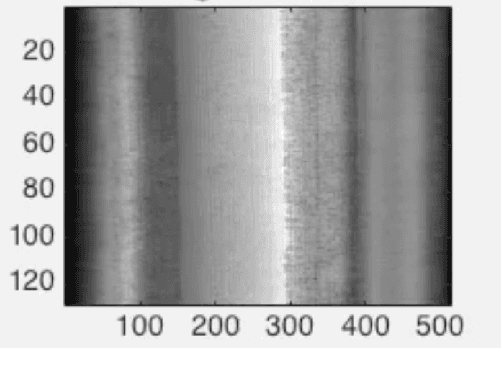

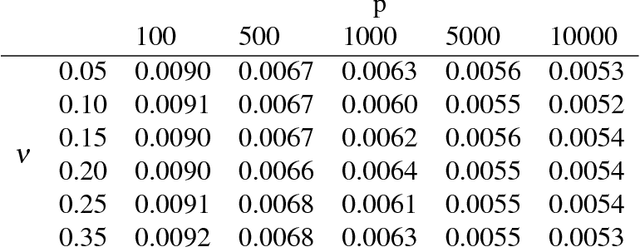

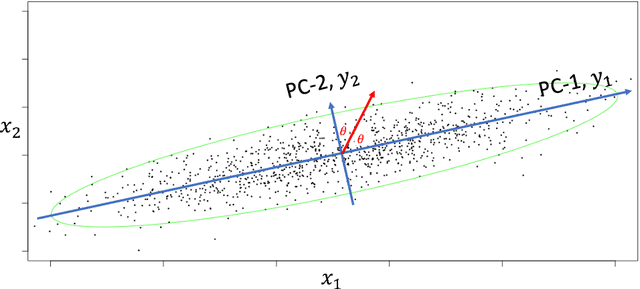

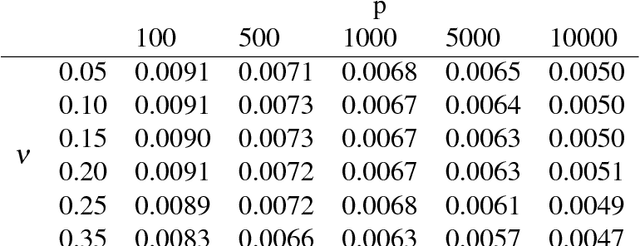

Abstract:The high-dimensionality and volume of large scale multistream data has inhibited significant research progress in developing an integrated monitoring and diagnostics (M&D) approach. This data, also categorized as big data, is becoming common in manufacturing plants. In this paper, we propose an integrated M\&D approach for large scale streaming data. We developed a novel monitoring method named Adaptive Principal Component monitoring (APC) which adaptively chooses PCs that are most likely to vary due to the change for early detection. Importantly, we integrate a novel diagnostic approach, Principal Component Signal Recovery (PCSR), to enable a streamlined SPC. This diagnostics approach draws inspiration from Compressed Sensing and uses Adaptive Lasso for identifying the sparse change in the process. We theoretically motivate our approaches and do a performance evaluation of our integrated M&D method through simulations and case studies.

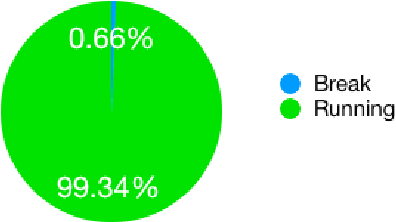

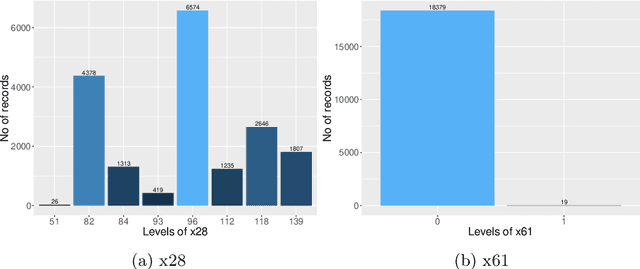

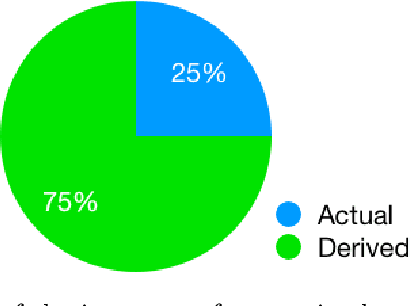

Dataset: Rare Event Classification in Multivariate Time Series

Oct 01, 2018

Abstract:A real-world dataset is provided from a pulp-and-paper manufacturing industry. The dataset comes from a multivariate time series process. The data contains a rare event of paper break that commonly occurs in the industry. The data contains sensor readings at regular time-intervals (x's) and the event label (y). The primary purpose of the data is thought to be building a classification model for early prediction of the rare event. However, it can also be used for multivariate time series data exploration and building other supervised and unsupervised models.

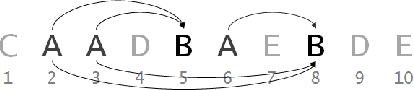

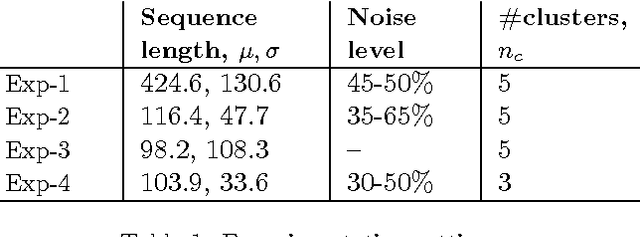

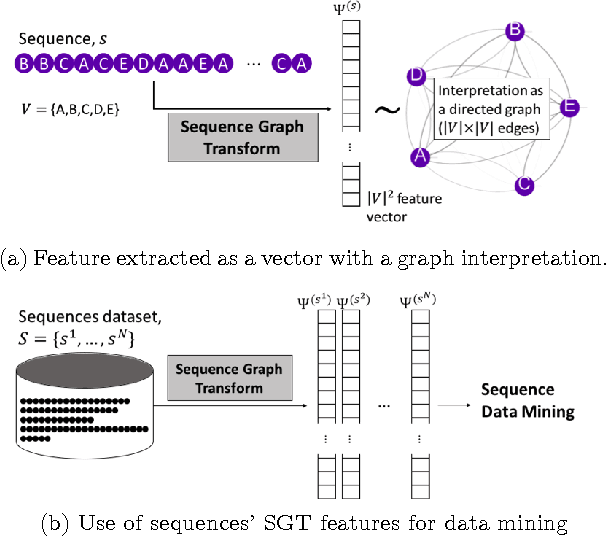

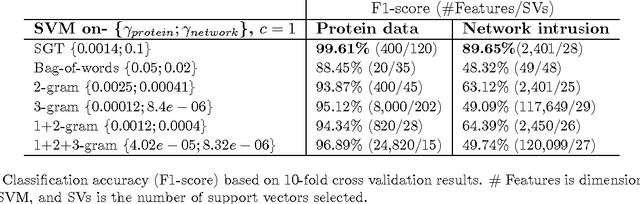

Sequence Graph Transform (SGT): A Feature Extraction Function for Sequence Data Mining (Extended Version)

Apr 30, 2017

Abstract:The ubiquitous presence of sequence data across fields such as the web, healthcare, bioinformatics, and text mining has made sequence mining a vital research area. However, sequence mining is particularly challenging because of difficulty in finding (dis)similarity/distance between sequences. This is because a distance measure between sequences is not obvious due to their unstructuredness---arbitrary strings of arbitrary length. Feature representations, such as n-grams, are often used but they either compromise on extracting both short- and long-term sequence patterns or have a high computation. We propose a new function, Sequence Graph Transform (SGT), that extracts the short- and long-term sequence features and embeds them in a finite-dimensional feature space. Importantly, SGT has low computation and can extract any amount of short- to long-term patterns without any increase in the computation, also proved theoretically in this paper. Due to this, SGT yields superior result with significantly higher accuracy and lower computation compared to the existing methods. We show it via several experimentation and SGT's real world application for clustering, classification, search and visualization as examples.

The Impact of Estimation: A New Method for Clustering and Trajectory Estimation in Patient Flow Modeling

Jan 30, 2017

Abstract:The ability to accurately forecast and control inpatient census, and thereby workloads, is a critical and longstanding problem in hospital management. Majority of current literature focuses on optimal scheduling of inpatients, but largely ignores the process of accurate estimation of the trajectory of patients throughout the treatment and recovery process. The result is that current scheduling models are optimizing based on inaccurate input data. We developed a Clustering and Scheduling Integrated (CSI) approach to capture patient flows through a network of hospital services. CSI functions by clustering patients into groups based on similarity of trajectory using a novel Semi-Markov model (SMM)-based clustering scheme proposed in this paper, as opposed to clustering by admit type or condition as in previous literature. The methodology is validated by simulation and then applied to real patient data from a partner hospital where we see it outperforms current methods. Further, we demonstrate that extant optimization methods achieve significantly better results on key hospital performance measures under CSI, compared with traditional estimation approaches, increasing elective admissions by 97% and utilization by 22% compared to 30% and 8% using traditional estimation techniques. From a theoretical standpoint, the SMM-clustering is a novel approach applicable to any temporal-spatial stochastic data that is prevalent in many industries and application areas.

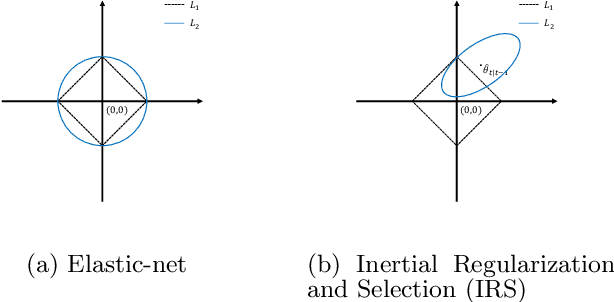

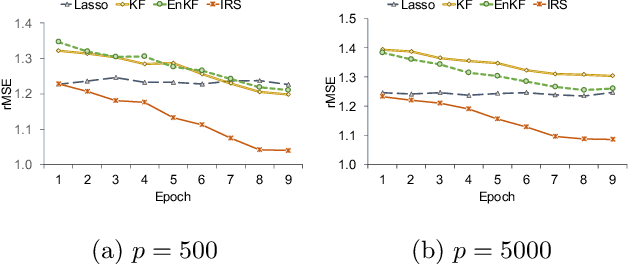

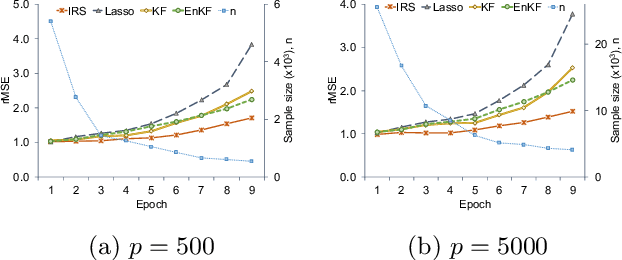

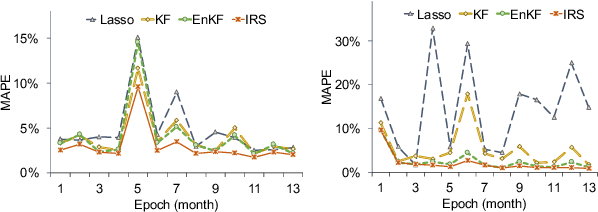

Inertial Regularization and Selection (IRS): Sequential Regression in High-Dimension and Sparsity

Jan 10, 2017

Abstract:In this paper, we develop a new sequential regression modeling approach for data streams. Data streams are commonly found around us, e.g in a retail enterprise sales data is continuously collected every day. A demand forecasting model is an important outcome from the data that needs to be continuously updated with the new incoming data. The main challenge in such modeling arises when there is a) high dimensional and sparsity, b) need for an adaptive use of prior knowledge, and/or c) structural changes in the system. The proposed approach addresses these challenges by incorporating an adaptive L1-penalty and inertia terms in the loss function, and thus called Inertial Regularization and Selection (IRS). The former term performs model selection to handle the first challenge while the latter is shown to address the last two challenges. A recursive estimation algorithm is developed, and shown to outperform the commonly used state-space models, such as Kalman Filters, in experimental studies and real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge