Cheol-Hwan Yoo

Effective Shortcut Technique for GAN

Jan 27, 2022

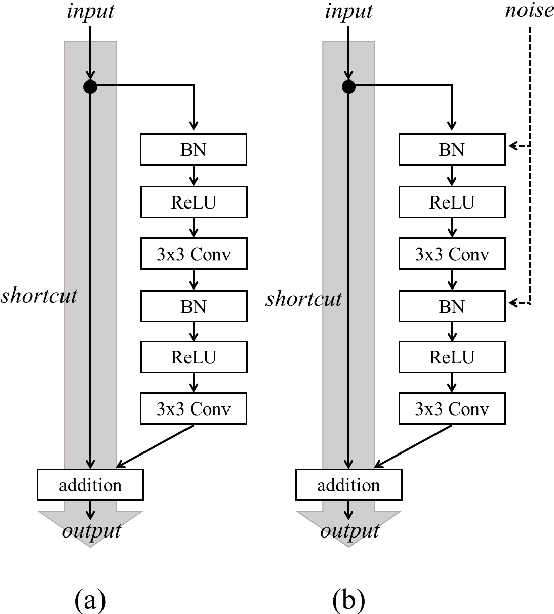

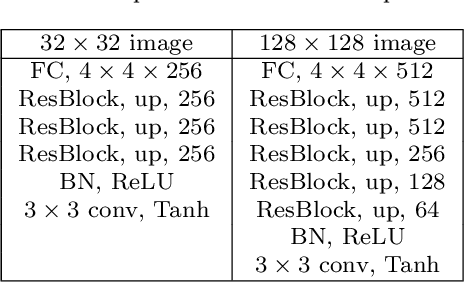

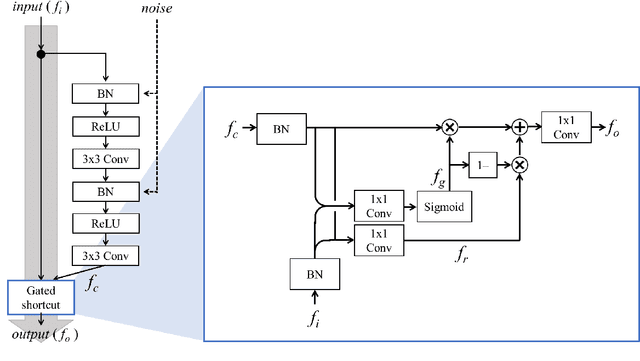

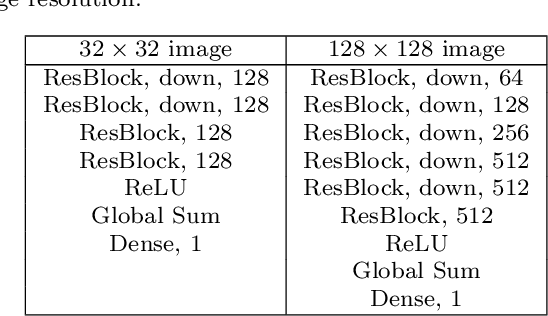

Abstract:In recent years, generative adversarial network (GAN)-based image generation techniques design their generators by stacking up multiple residual blocks. The residual block generally contains a shortcut, \ie skip connection, which effectively supports information propagation in the network. In this paper, we propose a novel shortcut method, called the gated shortcut, which not only embraces the strength point of the residual block but also further boosts the GAN performance. More specifically, based on the gating mechanism, the proposed method leads the residual block to keep (or remove) information that is relevant (or irrelevant) to the image being generated. To demonstrate that the proposed method brings significant improvements in the GAN performance, this paper provides extensive experimental results on the various standard datasets such as CIFAR-10, CIFAR-100, LSUN, and tiny-ImageNet. Quantitative evaluations show that the gated shortcut achieves the impressive GAN performance in terms of Frechet inception distance (FID) and Inception score (IS). For instance, the proposed method improves the FID and IS scores on the tiny-ImageNet dataset from 35.13 to 27.90 and 20.23 to 23.42, respectively.

A Simple yet Effective Way for Improving the Performance of GANs

Dec 11, 2019

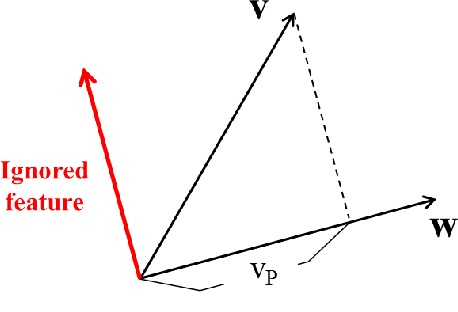

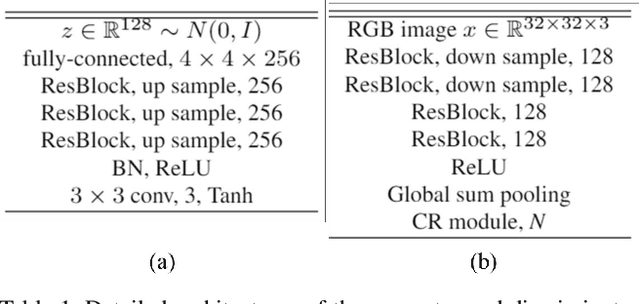

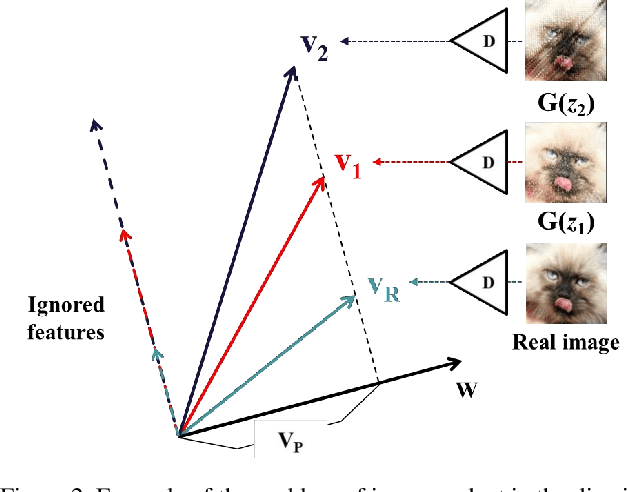

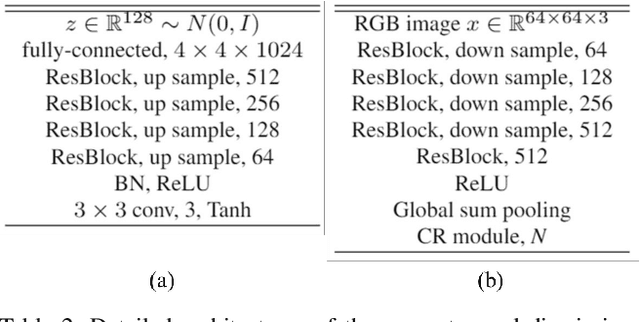

Abstract:This paper presents a simple but effective way that improves the performance of generative adversarial networks (GANs) without imposing the training overhead or modifying the network architectures of existing methods. The proposed method employs a novel cascading rejection (CR) module for discriminator, which extracts multiple non-overlapped features in an iterative manner. The CR module supports the discriminator to effectively distinguish between real and generated images, which results in a strong penalization to the generator. In order to deceive the robust discriminator containing the CR module, the generator produces the images that are more similar to the real images. Since the proposed CR module requires only a few simple vector operations, it can be readily applied to existing frameworks with marginal training overheads. Quantitative evaluations on various datasets including CIFAR-10, Celeb-HQ, LSUN, and tiny-ImageNet confirm that the proposed method significantly improves the performance of GANs and conditional GANs in terms of Frechet inception distance (FID) indicating the diversity and visual appearance of the generated images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge