Chengfang Li

Anomaly Detection and Generation with Diffusion Models: A Survey

Jun 11, 2025

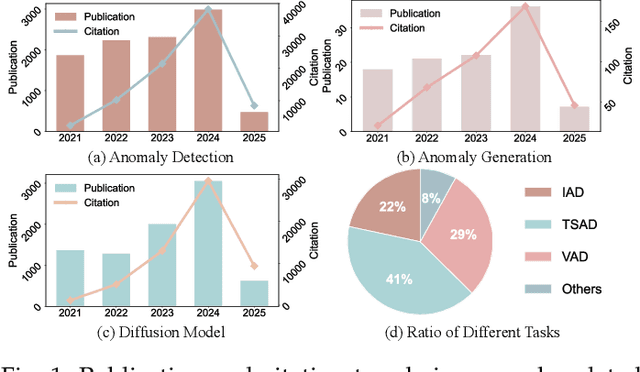

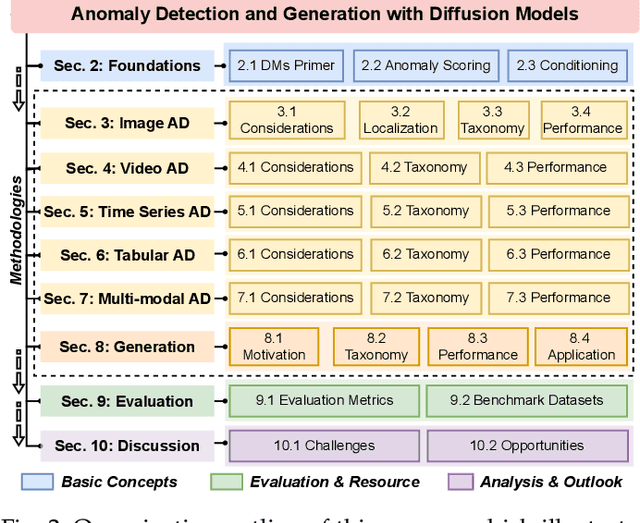

Abstract:Anomaly detection (AD) plays a pivotal role across diverse domains, including cybersecurity, finance, healthcare, and industrial manufacturing, by identifying unexpected patterns that deviate from established norms in real-world data. Recent advancements in deep learning, specifically diffusion models (DMs), have sparked significant interest due to their ability to learn complex data distributions and generate high-fidelity samples, offering a robust framework for unsupervised AD. In this survey, we comprehensively review anomaly detection and generation with diffusion models (ADGDM), presenting a tutorial-style analysis of the theoretical foundations and practical implementations and spanning images, videos, time series, tabular, and multimodal data. Crucially, unlike existing surveys that often treat anomaly detection and generation as separate problems, we highlight their inherent synergistic relationship. We reveal how DMs enable a reinforcing cycle where generation techniques directly address the fundamental challenge of anomaly data scarcity, while detection methods provide critical feedback to improve generation fidelity and relevance, advancing both capabilities beyond their individual potential. A detailed taxonomy categorizes ADGDM methods based on anomaly scoring mechanisms, conditioning strategies, and architectural designs, analyzing their strengths and limitations. We final discuss key challenges including scalability and computational efficiency, and outline promising future directions such as efficient architectures, conditioning strategies, and integration with foundation models (e.g., visual-language models and large language models). By synthesizing recent advances and outlining open research questions, this survey aims to guide researchers and practitioners in leveraging DMs for innovative AD solutions across diverse applications.

Rethinking Saliency Map: An Context-aware Perturbation Method to Explain EEG-based Deep Learning Model

May 30, 2022

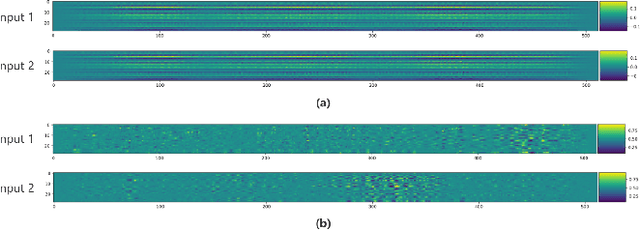

Abstract:Deep learning is widely used to decode the electroencephalogram (EEG) signal. However, there are few attempts to specifically investigate how to explain the EEG-based deep learning models. We conduct a review to summarize the existing works explaining the EEG-based deep learning model. Unfortunately, we find that there is no appropriate method to explain them. Based on the characteristic of EEG data, we suggest a context-aware perturbation method to generate a saliency map from the perspective of the raw EEG signal. Moreover, we also justify that the context information can be used to suppress the artifacts in the EEG-based deep learning model. In practice, some users might want a simple version of the explanation, which only indicates a few features as salient points. To this end, we propose an optional area limitation strategy to restrict the highlighted region. To validate our idea and make a comparison with the other methods, we select three representative EEG-based models to implement experiments on the emotional EEG dataset DEAP. The results of the experiments support the advantages of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge