Cheng-Yu Lin

Think or Remember? Detecting and Directing LLMs Towards Memorization or Generalization

Dec 24, 2024

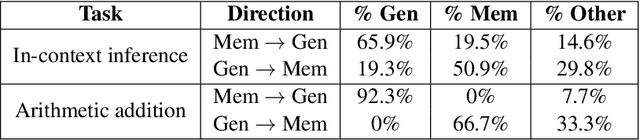

Abstract:In this paper, we explore the foundational mechanisms of memorization and generalization in Large Language Models (LLMs), inspired by the functional specialization observed in the human brain. Our investigation serves as a case study leveraging specially designed datasets and experimental-scale LLMs to lay the groundwork for understanding these behaviors. Specifically, we aim to first enable LLMs to exhibit both memorization and generalization by training with the designed dataset, then (a) examine whether LLMs exhibit neuron-level spatial differentiation for memorization and generalization, (b) predict these behaviors using model internal representations, and (c) steer the behaviors through inference-time interventions. Our findings reveal that neuron-wise differentiation of memorization and generalization is observable in LLMs, and targeted interventions can successfully direct their behavior.

Frequency Reversal Alamouti Code-Based FBMC with Resilience to Inter-Antenna Frequency Offsets

Sep 14, 2022

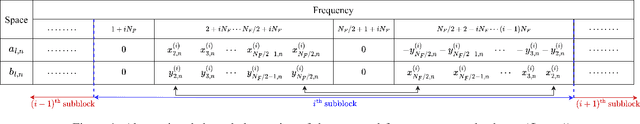

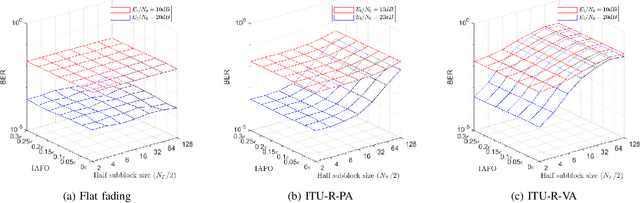

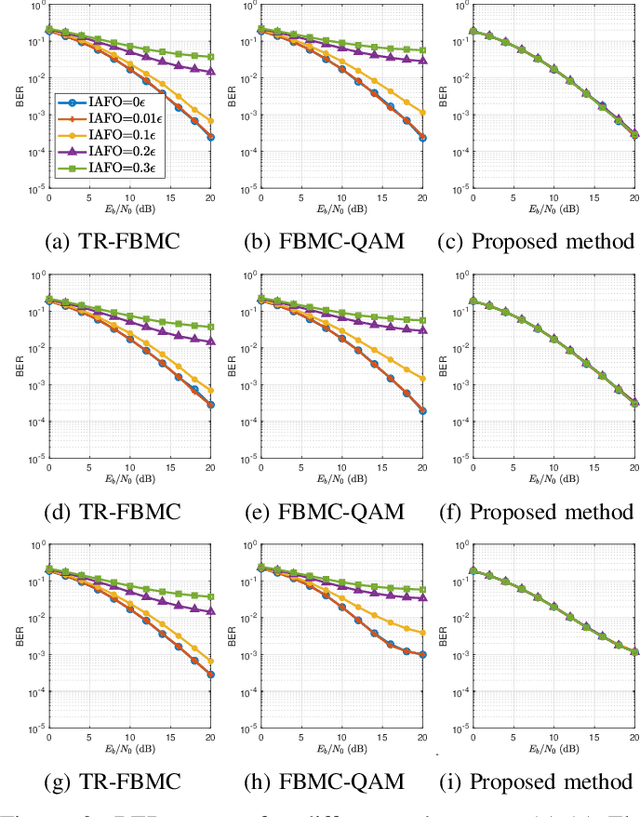

Abstract:Transmit diversity schemes for filter bank multicarrier (FBMC) are known to be challenging. No existing schemes have considered the presence of inter-antenna frequency offset (IAFO), which will result in performance degradation. In this letter, a new transmit scheme based on the frequency reversal Alamouti code (FRAC)-based structure to address the issue of IAFO is proposed and is proven to inherently cancel the inter-antenna inter-carrier interference (ICI) while preserving spatial diversity. Moreover, the proposed FRAC structure is applicable in frequency-selective channels. Numerical results show that the proposed scheme undergoes negligible bit error rate (BER) degradation even with considerable IAFOs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge