Cheng-Fu Chou

M-ErasureBench: A Comprehensive Multimodal Evaluation Benchmark for Concept Erasure in Diffusion Models

Dec 28, 2025Abstract:Text-to-image diffusion models may generate harmful or copyrighted content, motivating research on concept erasure. However, existing approaches primarily focus on erasing concepts from text prompts, overlooking other input modalities that are increasingly critical in real-world applications such as image editing and personalized generation. These modalities can become attack surfaces, where erased concepts re-emerge despite defenses. To bridge this gap, we introduce M-ErasureBench, a novel multimodal evaluation framework that systematically benchmarks concept erasure methods across three input modalities: text prompts, learned embeddings, and inverted latents. For the latter two, we evaluate both white-box and black-box access, yielding five evaluation scenarios. Our analysis shows that existing methods achieve strong erasure performance against text prompts but largely fail under learned embeddings and inverted latents, with Concept Reproduction Rate (CRR) exceeding 90% in the white-box setting. To address these vulnerabilities, we propose IRECE (Inference-time Robustness Enhancement for Concept Erasure), a plug-and-play module that localizes target concepts via cross-attention and perturbs the associated latents during denoising. Experiments demonstrate that IRECE consistently restores robustness, reducing CRR by up to 40% under the most challenging white-box latent inversion scenario, while preserving visual quality. To the best of our knowledge, M-ErasureBench provides the first comprehensive benchmark of concept erasure beyond text prompts. Together with IRECE, our benchmark offers practical safeguards for building more reliable protective generative models.

DiffQRCoder: Diffusion-based Aesthetic QR Code Generation with Scanning Robustness Guided Iterative Refinement

Sep 10, 2024

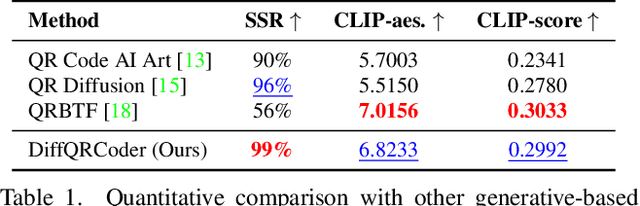

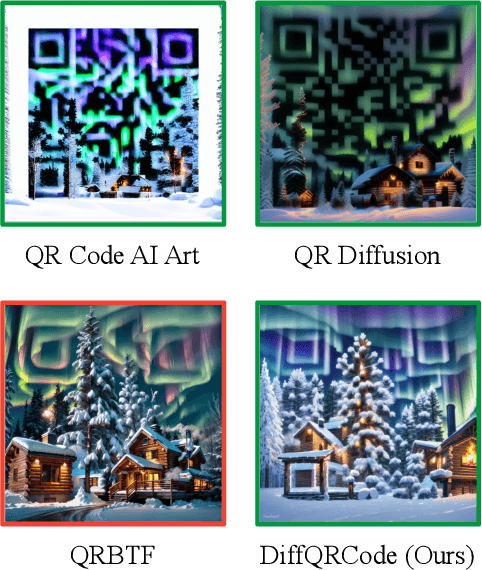

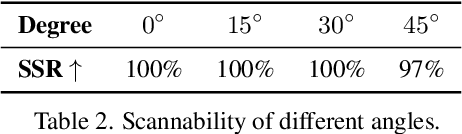

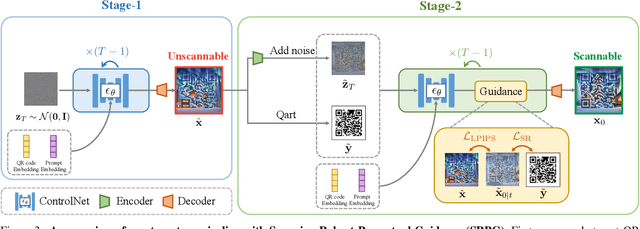

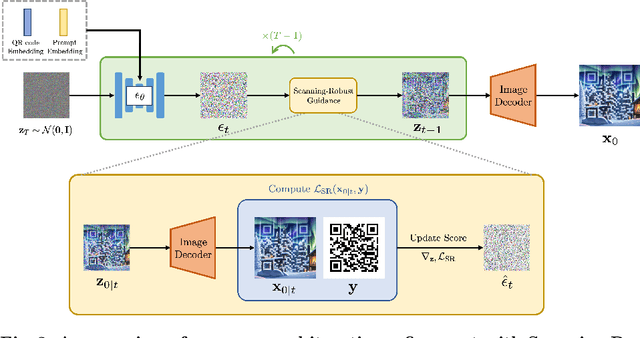

Abstract:With the success of Diffusion Models for image generation, the technologies also have revolutionized the aesthetic Quick Response (QR) code generation. Despite significant improvements in visual attractiveness for the beautified codes, their scannabilities are usually sacrificed and thus hinder their practical uses in real-world scenarios. To address this issue, we propose a novel Diffusion-based QR Code generator (DiffQRCoder) to effectively craft both scannable and visually pleasing QR codes. The proposed approach introduces Scanning-Robust Perceptual Guidance (SRPG), a new diffusion guidance for Diffusion Models to guarantee the generated aesthetic codes to obey the ground-truth QR codes while maintaining their attractiveness during the denoising process. Additionally, we present another post-processing technique, Scanning Robust Manifold Projected Gradient Descent (SR-MPGD), to further enhance their scanning robustness through iterative latent space optimization. With extensive experiments, the results demonstrate that our approach not only outperforms other compared methods in Scanning Success Rate (SSR) with better or comparable CLIP aesthetic score (CLIP-aes.) but also significantly improves the SSR of the ControlNet-only approach from 60% to 99%. The subjective evaluation indicates that our approach achieves promising visual attractiveness to users as well. Finally, even with different scanning angles and the most rigorous error tolerance settings, our approach robustly achieves over 95% SSR, demonstrating its capability for real-world applications.

Pixel Is Not A Barrier: An Effective Evasion Attack for Pixel-Domain Diffusion Models

Aug 21, 2024Abstract:Diffusion Models have emerged as powerful generative models for high-quality image synthesis, with many subsequent image editing techniques based on them. However, the ease of text-based image editing introduces significant risks, such as malicious editing for scams or intellectual property infringement. Previous works have attempted to safeguard images from diffusion-based editing by adding imperceptible perturbations. These methods are costly and specifically target prevalent Latent Diffusion Models (LDMs), while Pixel-domain Diffusion Models (PDMs) remain largely unexplored and robust against such attacks. Our work addresses this gap by proposing a novel attacking framework with a feature representation attack loss that exploits vulnerabilities in denoising UNets and a latent optimization strategy to enhance the naturalness of protected images. Extensive experiments demonstrate the effectiveness of our approach in attacking dominant PDM-based editing methods (e.g., SDEdit) while maintaining reasonable protection fidelity and robustness against common defense methods. Additionally, our framework is extensible to LDMs, achieving comparable performance to existing approaches.

Diffusion-based Aesthetic QR Code Generation via Scanning-Robust Perceptual Guidance

Mar 23, 2024

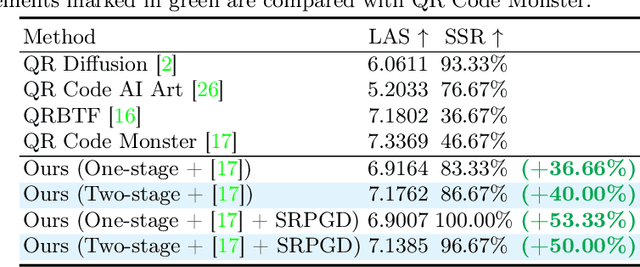

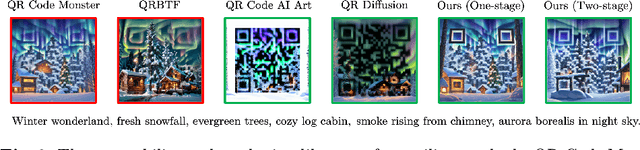

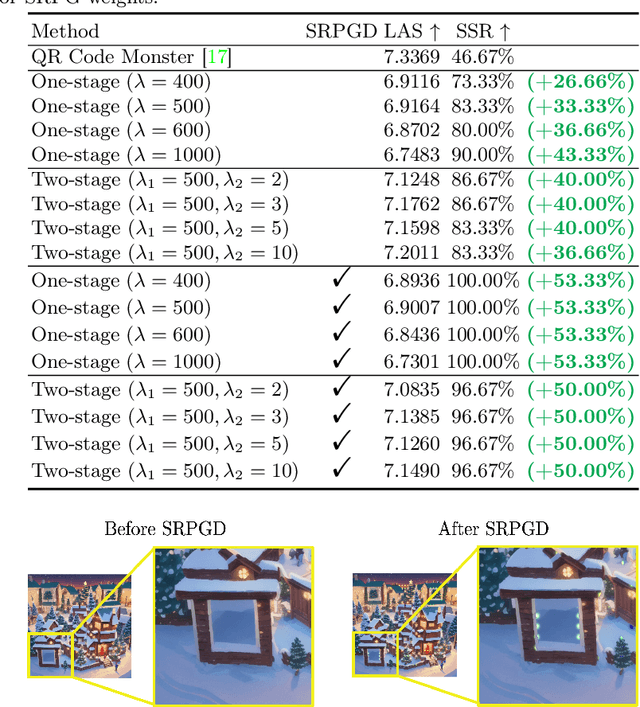

Abstract:QR codes, prevalent in daily applications, lack visual appeal due to their conventional black-and-white design. Integrating aesthetics while maintaining scannability poses a challenge. In this paper, we introduce a novel diffusion-model-based aesthetic QR code generation pipeline, utilizing pre-trained ControlNet and guided iterative refinement via a novel classifier guidance (SRG) based on the proposed Scanning-Robust Loss (SRL) tailored with QR code mechanisms, which ensures both aesthetics and scannability. To further improve the scannability while preserving aesthetics, we propose a two-stage pipeline with Scanning-Robust Perceptual Guidance (SRPG). Moreover, we can further enhance the scannability of the generated QR code by post-processing it through the proposed Scanning-Robust Projected Gradient Descent (SRPGD) post-processing technique based on SRL with proven convergence. With extensive quantitative, qualitative, and subjective experiments, the results demonstrate that the proposed approach can generate diverse aesthetic QR codes with flexibility in detail. In addition, our pipelines outperforming existing models in terms of Scanning Success Rate (SSR) 86.67% (+40%) with comparable aesthetic scores. The pipeline combined with SRPGD further achieves 96.67% (+50%). Our code will be available https://github.com/jwliao1209/DiffQRCode.

A Hybrid Approach for Binary Classification of Imbalanced Data

Jul 07, 2022

Abstract:Binary classification with an imbalanced dataset is challenging. Models tend to consider all samples as belonging to the majority class. Although existing solutions such as sampling methods, cost-sensitive methods, and ensemble learning methods improve the poor accuracy of the minority class, these methods are limited by overfitting problems or cost parameters that are difficult to decide. We propose HADR, a hybrid approach with dimension reduction that consists of data block construction, dimentionality reduction, and ensemble learning with deep neural network classifiers. We evaluate the performance on eight imbalanced public datasets in terms of recall, G-mean, and AUC. The results show that our model outperforms state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge