Charles Kervrann

INRIA

On normalization-equivariance properties of supervised and unsupervised denoising methods: a survey

Feb 23, 2024Abstract:Image denoising is probably the oldest and still one of the most active research topic in image processing. Many methodological concepts have been introduced in the past decades and have improved performances significantly in recent years, especially with the emergence of convolutional neural networks and supervised deep learning. In this paper, we propose a survey of guided tour of supervised and unsupervised learning methods for image denoising, classifying the main principles elaborated during this evolution, with a particular concern given to recent developments in supervised learning. It is conceived as a tutorial organizing in a comprehensive framework current approaches. We give insights on the rationales and limitations of the most performant methods in the literature, and we highlight the common features between many of them. Finally, we focus on on the normalization equivariance properties that is surprisingly not guaranteed with most of supervised methods. It is of paramount importance that intensity shifting or scaling applied to the input image results in a corresponding change in the denoiser output.

A unified framework of non-local parametric methods for image denoising

Feb 21, 2024Abstract:We propose a unified view of non-local methods for single-image denoising, for which BM3D is the most popular representative, that operate by gathering noisy patches together according to their similarities in order to process them collaboratively. Our general estimation framework is based on the minimization of the quadratic risk, which is approximated in two steps, and adapts to photon and electronic noises. Relying on unbiased risk estimation (URE) for the first step and on ``internal adaptation'', a concept borrowed from deep learning theory, for the second, we show that our approach enables to reinterpret and reconcile previous state-of-the-art non-local methods. Within this framework, we propose a novel denoiser called NL-Ridge that exploits linear combinations of patches. While conceptually simpler, we show that NL-Ridge can outperform well-established state-of-the-art single-image denoisers.

Normalization-Equivariant Neural Networks with Application to Image Denoising

Jun 08, 2023Abstract:In many information processing systems, it may be desirable to ensure that any change of the input, whether by shifting or scaling, results in a corresponding change in the system response. While deep neural networks are gradually replacing all traditional automatic processing methods, they surprisingly do not guarantee such normalization-equivariance (scale + shift) property, which can be detrimental in many applications. To address this issue, we propose a methodology for adapting existing neural networks so that normalization-equivariance holds by design. Our main claim is that not only ordinary convolutional layers, but also all activation functions, including the ReLU (rectified linear unit), which are applied element-wise to the pre-activated neurons, should be completely removed from neural networks and replaced by better conditioned alternatives. To this end, we introduce affine-constrained convolutions and channel-wise sort pooling layers as surrogates and show that these two architectural modifications do preserve normalization-equivariance without loss of performance. Experimental results in image denoising show that normalization-equivariant neural networks, in addition to their better conditioning, also provide much better generalization across noise levels.

Unsupervised Linear and Iterative Combinations of Patches for Image Denoising

Dec 01, 2022

Abstract:We introduce a parametric view of non-local two-step denoisers, for which BM3D is a major representative, where quadratic risk minimization is leveraged for unsupervised optimization. Within this paradigm, we propose to extend the underlying mathematical parametric formulation by iteration. This generalization can be expected to further improve the denoising performance, somehow curbed by the impracticality of repeating the second stage for all two-step denoisers. The resulting formulation involves estimating an even larger amount of parameters in a unsupervised manner which is all the more challenging. Focusing on the parameterized form of NL-Ridge, the simplest but also most efficient non-local two-step denoiser, we propose a progressive scheme to approximate the parameters minimizing the risk. In the end, the denoised images are made up of iterative linear combinations of patches. Experiments on artificially noisy images but also on real-world noisy images demonstrate that our method compares favorably with the very best unsupervised denoisers such as WNNM, outperforming the recent deep-learning-based approaches, while being much faster.

Towards a unified view of unsupervised non-local methods for image denoising: the NL-Ridge approach

Mar 01, 2022

Abstract:We propose a unified view of unsupervised non-local methods for image denoising that linearily combine noisy image patches. The best methods, established in different modeling and estimation frameworks, are two-step algorithms. Leveraging Stein's unbiased risk estimate (SURE) for the first step and the "internal adaptation", a concept borrowed from deep learning theory, for the second one, we show that our NL-Ridge approach enables to reconcile several patch aggregation methods for image denoising. In the second step, our closed-form aggregation weights are computed through multivariate Ridge regressions. Experiments on artificially noisy images demonstrate that NL-Ridge may outperform well established state-of-the-art unsupervised denoisers such as BM3D and NL-Bayes, as well as recent unsupervised deep learning methods, while being simpler conceptually.

DCT2net: an interpretable shallow CNN for image denoising

Jul 31, 2021

Abstract:This work tackles the issue of noise removal from images, focusing on the well-known DCT image denoising algorithm. The latter, stemming from signal processing, has been well studied over the years. Though very simple, it is still used in crucial parts of state-of-the-art "traditional" denoising algorithms such as BM3D. Since a few years however, deep convolutional neural networks (CNN) have outperformed their traditional counterparts, making signal processing methods less attractive. In this paper, we demonstrate that a DCT denoiser can be seen as a shallow CNN and thereby its original linear transform can be tuned through gradient descent in a supervised manner, improving considerably its performance. This gives birth to a fully interpretable CNN called DCT2net. To deal with remaining artifacts induced by DCT2net, an original hybrid solution between DCT and DCT2net is proposed combining the best that these two methods can offer; DCT2net is selected to process non-stationary image patches while DCT is optimal for piecewise smooth patches. Experiments on artificially noisy images demonstrate that two-layer DCT2net provides comparable results to BM3D and is as fast as DnCNN algorithm composed of more than a dozen of layers.

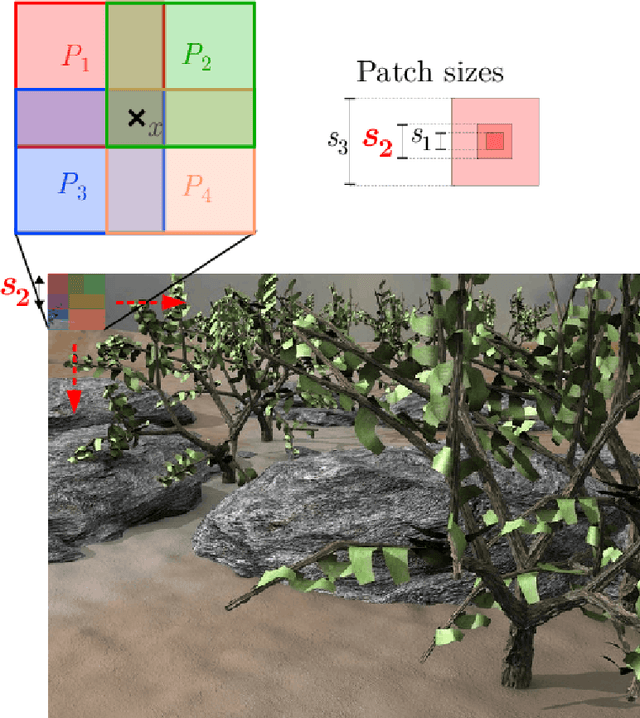

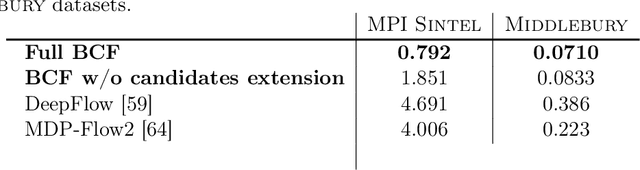

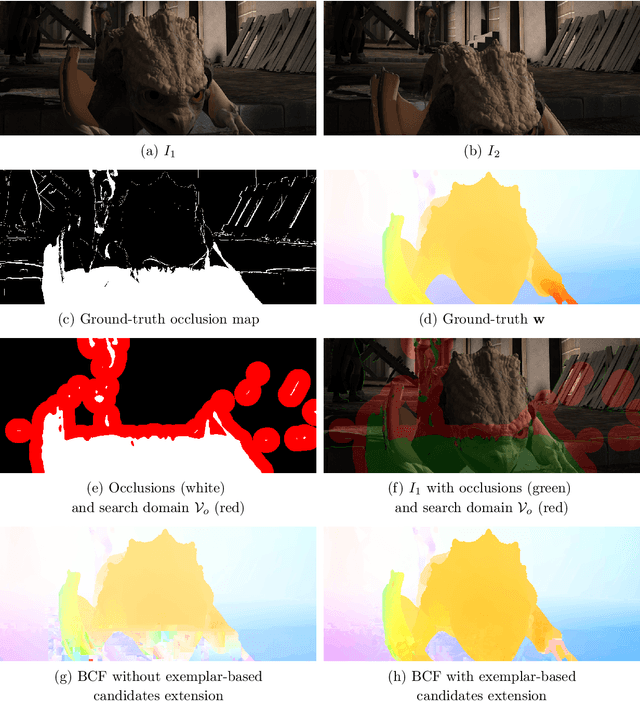

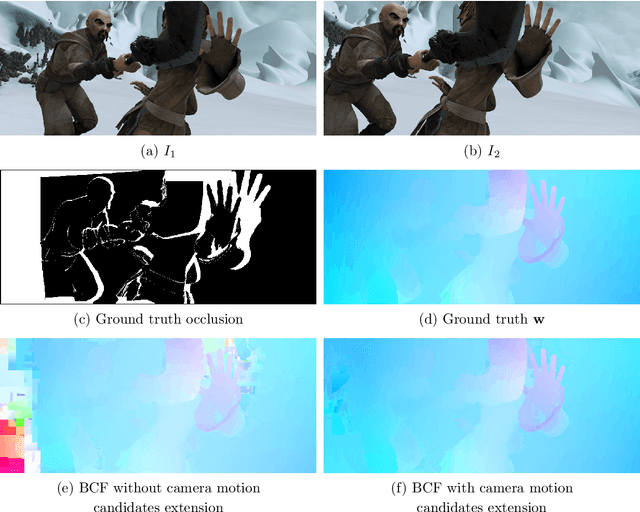

Aggregation of local parametric candidates with exemplar-based occlusion handling for optical flow

Jul 22, 2014

Abstract:Handling all together large displacements, motion details and occlusions remains an open issue for reliable computation of optical flow in a video sequence. We propose a two-step aggregation paradigm to address this problem. The idea is to supply local motion candidates at every pixel in a first step, and then to combine them to determine the global optical flow field in a second step. We exploit local parametric estimations combined with patch correspondences and we experimentally demonstrate that they are sufficient to produce highly accurate motion candidates. The aggregation step is designed as the discrete optimization of a global regularized energy. The occlusion map is estimated jointly with the flow field throughout the two steps. We propose a generic exemplar-based approach for occlusion filling with motion vectors. We achieve state-of-the-art results in computer vision benchmarks, with particularly significant improvements in the case of large displacements and occlusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge