Chandhini Suresh

Artificial intelligence for abnormality detection in high volume neuroimaging: a systematic review and meta-analysis

May 09, 2024

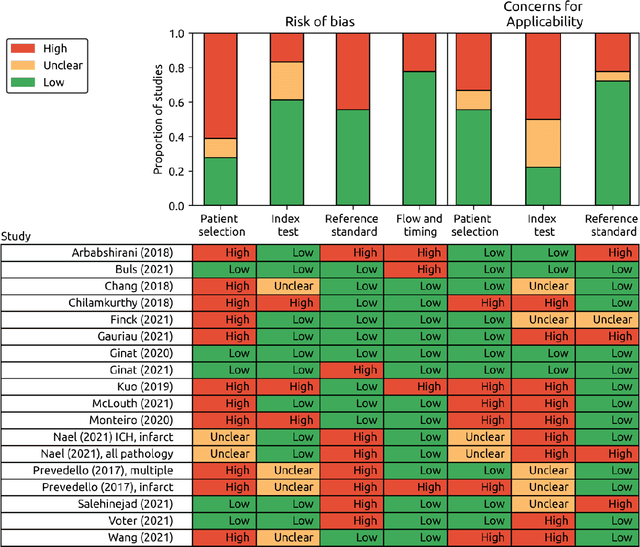

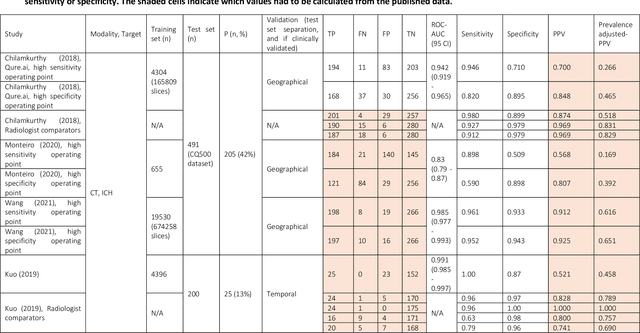

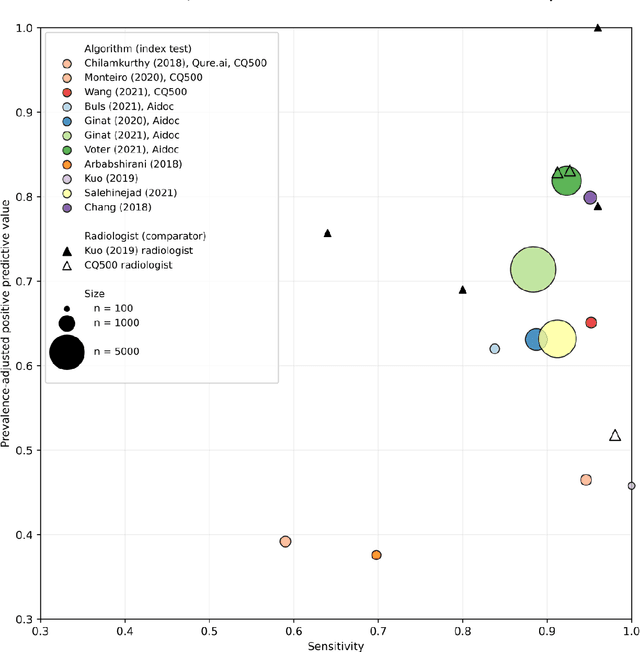

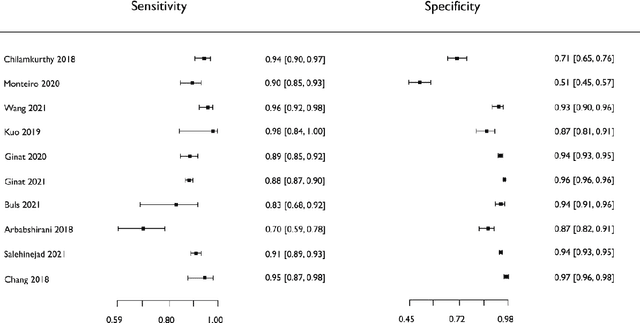

Abstract:Purpose: Most studies evaluating artificial intelligence (AI) models that detect abnormalities in neuroimaging are either tested on unrepresentative patient cohorts or are insufficiently well-validated, leading to poor generalisability to real-world tasks. The aim was to determine the diagnostic test accuracy and summarise the evidence supporting the use of AI models performing first-line, high-volume neuroimaging tasks. Methods: Medline, Embase, Cochrane library and Web of Science were searched until September 2021 for studies that temporally or externally validated AI capable of detecting abnormalities in first-line CT or MR neuroimaging. A bivariate random-effects model was used for meta-analysis where appropriate. PROSPERO: CRD42021269563. Results: Only 16 studies were eligible for inclusion. Included studies were not compromised by unrepresentative datasets or inadequate validation methodology. Direct comparison with radiologists was available in 4/16 studies. 15/16 had a high risk of bias. Meta-analysis was only suitable for intracranial haemorrhage detection in CT imaging (10/16 studies), where AI systems had a pooled sensitivity and specificity 0.90 (95% CI 0.85 - 0.94) and 0.90 (95% CI 0.83 - 0.95) respectively. Other AI studies using CT and MRI detected target conditions other than haemorrhage (2/16), or multiple target conditions (4/16). Only 3/16 studies implemented AI in clinical pathways, either for pre-read triage or as post-read discrepancy identifiers. Conclusion: The paucity of eligible studies reflects that most abnormality detection AI studies were not adequately validated in representative clinical cohorts. The few studies describing how abnormality detection AI could impact patients and clinicians did not explore the full ramifications of clinical implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge