Chamika Mihiranga Liyanagedera

Event-based Temporally Dense Optical Flow Estimation with Sequential Neural Networks

Oct 03, 2022

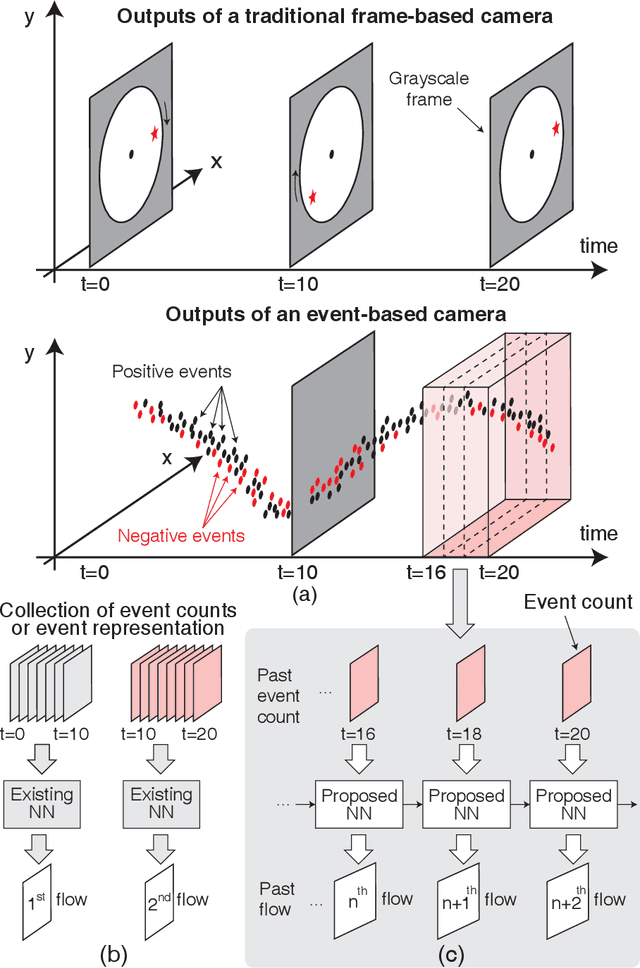

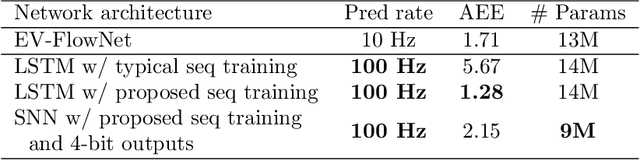

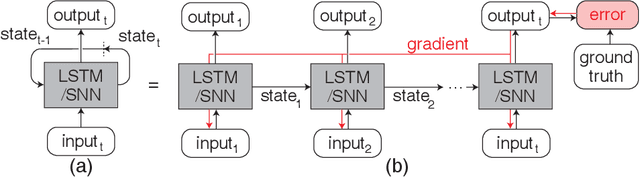

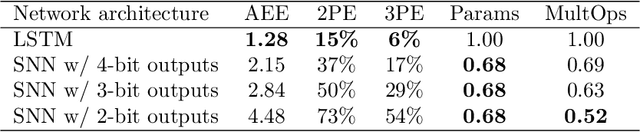

Abstract:Prior works on event-based optical flow estimation have investigated several gradient-based learning methods to train neural networks for predicting optical flow. However, they do not utilize the fast data rate of event data streams and rely on a spatio-temporal representation constructed from a collection of events over a fixed period of time (often between two grayscale frames). As a result, optical flow is only evaluated at a frequency much lower than the rate data is produced by an event-based camera, leading to a temporally sparse optical flow estimation. To predict temporally dense optical flow, we cast the problem as a sequential learning task and propose a training methodology to train sequential networks for continuous prediction on an event stream. We propose two types of networks: one focused on performance and another focused on compute efficiency. We first train long-short term memory networks (LSTMs) on the DSEC dataset and demonstrated 10x temporally dense optical flow estimation over existing flow estimation approaches. The additional benefit of having a memory to draw long temporal correlations back in time results in a 19.7% improvement in flow prediction accuracy of LSTMs over similar networks with no memory elements. We subsequently show that the inherent recurrence of spiking neural networks (SNNs) enables them to learn and estimate temporally dense optical flow with 31.8% lesser parameters than LSTM, but with a slightly increased error. This demonstrates potential for energy-efficient implementation of fast optical flow prediction using SNNs.

DOTIE -- Detecting Objects through Temporal Isolation of Events using a Spiking Architecture

Oct 03, 2022

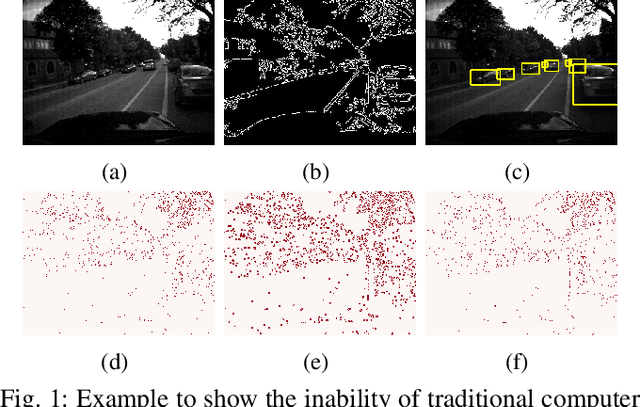

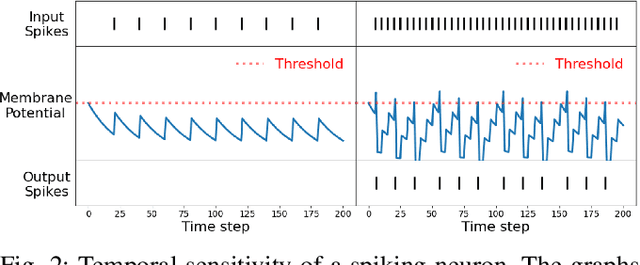

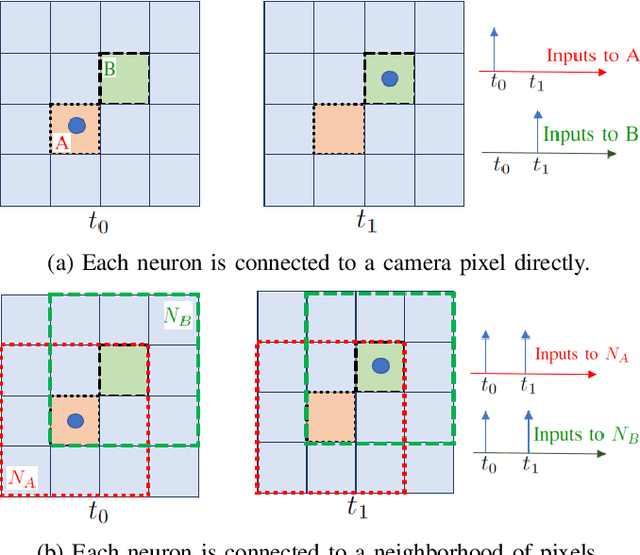

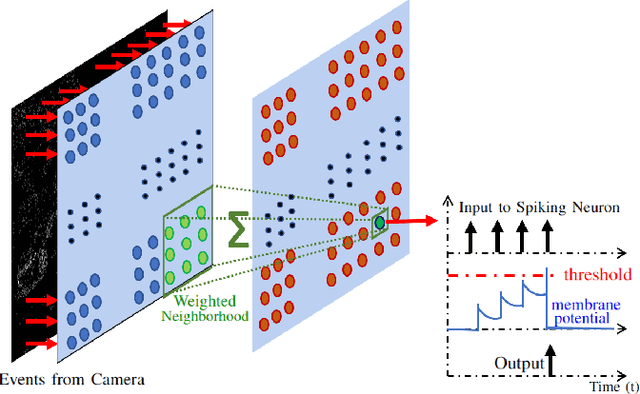

Abstract:Vision-based autonomous navigation systems rely on fast and accurate object detection algorithms to avoid obstacles. Algorithms and sensors designed for such systems need to be computationally efficient, due to the limited energy of the hardware used for deployment. Biologically inspired event cameras are a good candidate as a vision sensor for such systems due to their speed, energy efficiency, and robustness to varying lighting conditions. However, traditional computer vision algorithms fail to work on event-based outputs, as they lack photometric features such as light intensity and texture. In this work, we propose a novel technique that utilizes the temporal information inherently present in the events to efficiently detect moving objects. Our technique consists of a lightweight spiking neural architecture that is able to separate events based on the speed of the corresponding objects. These separated events are then further grouped spatially to determine object boundaries. This method of object detection is both asynchronous and robust to camera noise. In addition, it shows good performance in scenarios with events generated by static objects in the background, where existing event-based algorithms fail. We show that by utilizing our architecture, autonomous navigation systems can have minimal latency and energy overheads for performing object detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge