Carmel Baharav

Fair, Manipulation-Robust, and Transparent Sortition

Jun 26, 2024Abstract:Sortition, the random selection of political representatives, is increasingly being used around the world to choose participants of deliberative processes like Citizens' Assemblies. Motivated by sortition's practical importance, there has been a recent flurry of research on sortition algorithms, whose task it is to select a panel from among a pool of volunteers. This panel must satisfy quotas enforcing representation of key population subgroups. Past work has contributed an algorithmic approach for fulfilling this task while ensuring that volunteers' chances of selection are maximally equal, as measured by any convex equality objective. The question, then, is: which equality objective is the right one? Past work has mainly studied the objectives Minimax and Leximin, which respectively minimize the maximum and maximize the minimum chance of selection given to any volunteer. Recent work showed that both of these objectives have key weaknesses: Minimax is highly robust to manipulation but is arbitrarily unfair; oppositely, Leximin is highly fair but arbitrarily manipulable. In light of this gap, we propose a new equality objective, Goldilocks, that aims to achieve these ideals simultaneously by ensuring that no volunteer receives too little or too much chance of selection. We theoretically bound the extent to which Goldilocks achieves these ideals, finding that in an important sense, Goldilocks recovers among the best available solutions in a given instance. We then extend our bounds to the case where the output of Goldilocks is transformed to achieve a third goal, Transparency. Our empirical analysis of Goldilocks in real data is even more promising: we find that this objective achieves nearly instance-optimal minimum and maximum selection probabilities simultaneously in most real instances -- an outcome not even guaranteed to be possible for any algorithm.

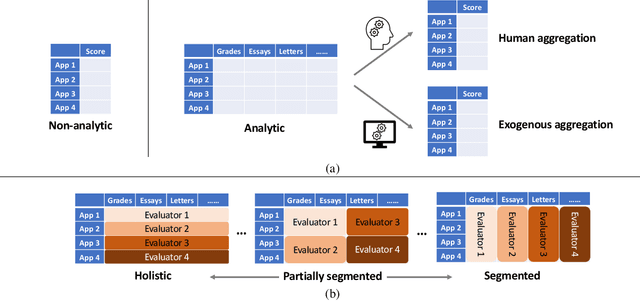

Allocation Schemes in Analytic Evaluation: Applicant-Centric Holistic or Attribute-Centric Segmented?

Sep 18, 2022

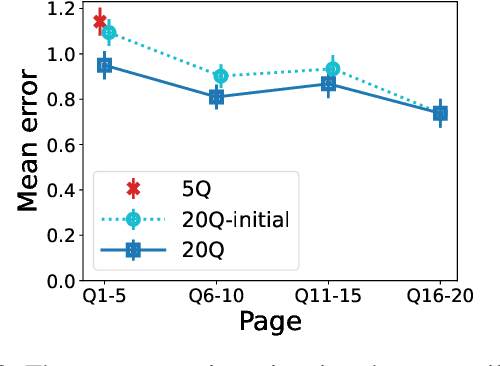

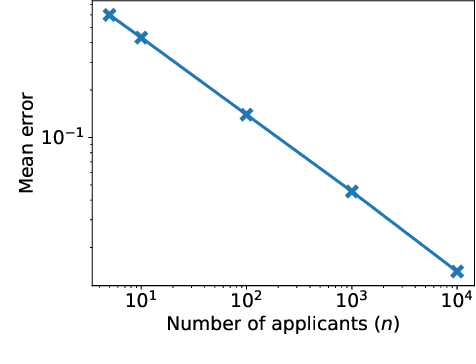

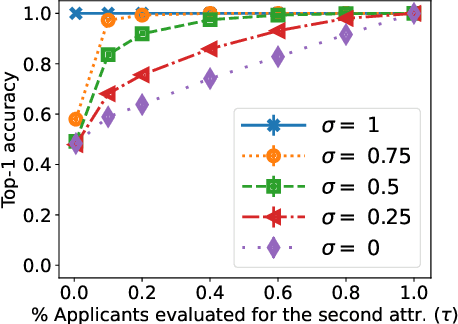

Abstract:Many applications such as hiring and university admissions involve evaluation and selection of applicants. These tasks are fundamentally difficult, and require combining evidence from multiple different aspects (what we term "attributes"). In these applications, the number of applicants is often large, and a common practice is to assign the task to multiple evaluators in a distributed fashion. Specifically, in the often-used holistic allocation, each evaluator is assigned a subset of the applicants, and is asked to assess all relevant information for their assigned applicants. However, such an evaluation process is subject to issues such as miscalibration (evaluators see only a small fraction of the applicants and may not get a good sense of relative quality), and discrimination (evaluators are influenced by irrelevant information about the applicants). We identify that such attribute-based evaluation allows alternative allocation schemes. Specifically, we consider assigning each evaluator more applicants but fewer attributes per applicant, termed segmented allocation. We compare segmented allocation to holistic allocation on several dimensions via theoretical and experimental methods. We establish various tradeoffs between these two approaches, and identify conditions under which one approach results in more accurate evaluation than the other.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge