Carina Benz

Appropriate Reliance on AI Advice: Conceptualization and the Effect of Explanations

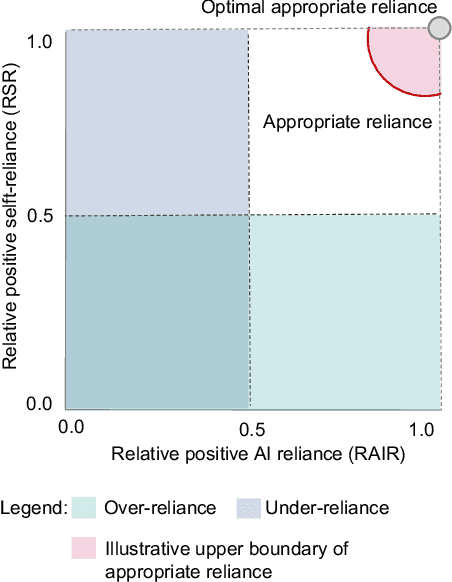

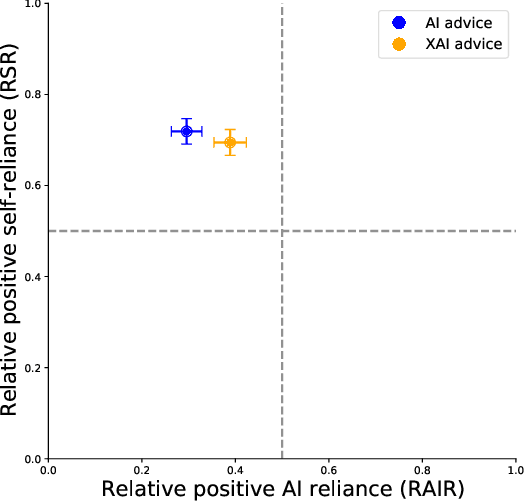

Feb 07, 2023Abstract:AI advice is becoming increasingly popular, e.g., in investment and medical treatment decisions. As this advice is typically imperfect, decision-makers have to exert discretion as to whether actually follow that advice: they have to "appropriately" rely on correct and turn down incorrect advice. However, current research on appropriate reliance still lacks a common definition as well as an operational measurement concept. Additionally, no in-depth behavioral experiments have been conducted that help understand the factors influencing this behavior. In this paper, we propose Appropriateness of Reliance (AoR) as an underlying, quantifiable two-dimensional measurement concept. We develop a research model that analyzes the effect of providing explanations for AI advice. In an experiment with 200 participants, we demonstrate how these explanations influence the AoR, and, thus, the effectiveness of AI advice. Our work contributes fundamental concepts for the analysis of reliance behavior and the purposeful design of AI advisors.

On the Influence of Explainable AI on Automation Bias

Apr 19, 2022

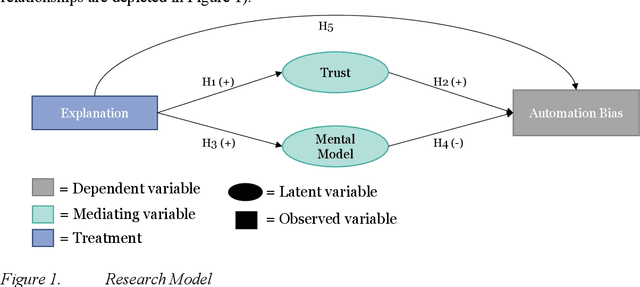

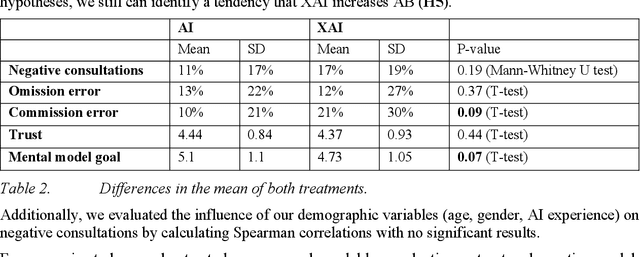

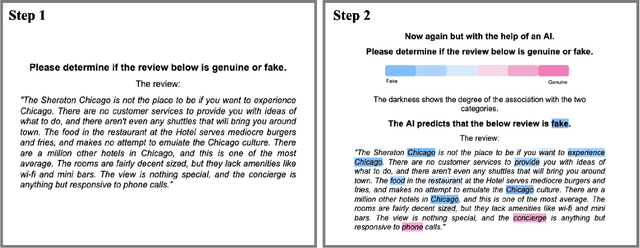

Abstract:Artificial intelligence (AI) is gaining momentum, and its importance for the future of work in many areas, such as medicine and banking, is continuously rising. However, insights on the effective collaboration of humans and AI are still rare. Typically, AI supports humans in decision-making by addressing human limitations. However, it may also evoke human bias, especially in the form of automation bias as an over-reliance on AI advice. We aim to shed light on the potential to influence automation bias by explainable AI (XAI). In this pre-test, we derive a research model and describe our study design. Subsequentially, we conduct an online experiment with regard to hotel review classifications and discuss first results. We expect our research to contribute to the design and development of safe hybrid intelligence systems.

Should I Follow AI-based Advice? Measuring Appropriate Reliance in Human-AI Decision-Making

Apr 14, 2022

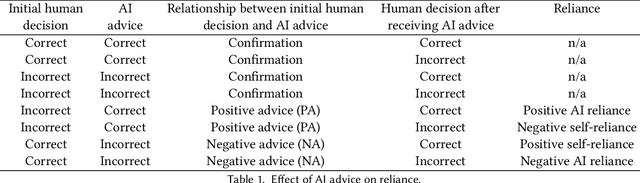

Abstract:Many important decisions in daily life are made with the help of advisors, e.g., decisions about medical treatments or financial investments. Whereas in the past, advice has often been received from human experts, friends, or family, advisors based on artificial intelligence (AI) have become more and more present nowadays. Typically, the advice generated by AI is judged by a human and either deemed reliable or rejected. However, recent work has shown that AI advice is not always beneficial, as humans have shown to be unable to ignore incorrect AI advice, essentially representing an over-reliance on AI. Therefore, the aspired goal should be to enable humans not to rely on AI advice blindly but rather to distinguish its quality and act upon it to make better decisions. Specifically, that means that humans should rely on the AI in the presence of correct advice and self-rely when confronted with incorrect advice, i.e., establish appropriate reliance (AR) on AI advice on a case-by-case basis. Current research lacks a metric for AR. This prevents a rigorous evaluation of factors impacting AR and hinders further development of human-AI decision-making. Therefore, based on the literature, we derive a measurement concept of AR. We propose to view AR as a two-dimensional construct that measures the ability to discriminate advice quality and behave accordingly. In this article, we derive the measurement concept, illustrate its application and outline potential future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge