Canh Hoang Nguyen

IMG2IMU: Applying Knowledge from Large-Scale Images to IMU Applications via Contrastive Learning

Sep 02, 2022

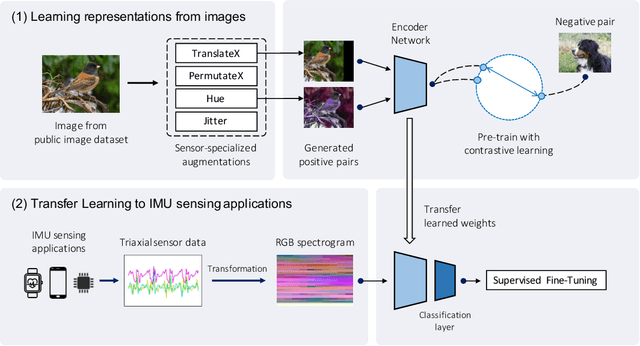

Abstract:Recent advances in machine learning showed that pre-training representations acquired via self-supervised learning could achieve high accuracy on tasks with small training data. Unlike in vision and natural language processing domains, such pre-training for IMU-based applications is challenging, as there are only a few publicly available datasets with sufficient size and diversity to learn generalizable representations. To overcome this problem, we propose IMG2IMU, a novel approach that adapts pre-train representation from large-scale images to diverse few-shot IMU sensing tasks. We convert the sensor data into visually interpretable spectrograms for the model to utilize the knowledge gained from vision. Further, we apply contrastive learning on an augmentation set we designed to learn representations that are tailored to interpreting sensor data. Our extensive evaluations on five different IMU sensing tasks show that IMG2IMU consistently outperforms the baselines, illustrating that vision knowledge can be incorporated into a few-shot learning environment for IMU sensing tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge